How did Skinner use the Skinner box? Skinner showed how positive reinforcement worked by placing a hungry rat in his Skinner box. The box contained a lever on the side, and as the rat moved about the box, it would accidentally knock the lever.

What is a Skinner box and how is it used in learning?

The Skinner Box is a chamber, often small, that is used to conduct operant conditioning research with animals. Within this chamber, there is usually a lever or key that an individual animal can operate to obtain a food or water source within the chamber as a reinforcer.

How did Skinner's Box contribute to psychology?

Skinner's theory of operant conditioning played a key role in helping psychologists understand how behavior is learned. It explains why reinforcement can be used so effectively in the learning process, and how schedules of reinforcement can affect the outcome of conditioning.

What methods did Skinner use?

Skinner conducted research on shaping behavior through positive and negative reinforcement and demonstrated operant conditioning, a behavior modification technique which he developed in contrast with classical conditioning. His idea of the behavior modification technique was to put the subject on a program with steps.

Is the Skinner box still used today?

The Skinner box is still in use today to test pharmaceuticals and it is used in various other types of experimentation with small animals. Behaviorist theories learned from the Skinner box are still applied in many aspects of life today, such as in the classroom and on social media platforms.

What is a Skinner box quizlet?

-the Skinner box is a chamber with a highly controlled environment, used to study operant conditioning processes with laboratory animals. -animals press levers in response to stimuli in order to receive "rewards" Reinforcement. -increases the likelihood that a behavior will recur. Punishment.

How will you apply operant conditioning in the classroom?

Examples of operant conditioning in the classroom include providing stickers for good behavior, loss of playtime through bad behavior, and providing positive and negative grades on tests based on test results.

What did Skinner think about the concept of free will?

Skinner. Concepts like “free will” and “motivation” are dismissed as illusions that disguise the real causes of human behavior. In Skinner's scheme of things the person who commits a crime has no real choice.

How did Skinner's childhood experiences influence his later approach to studying behavior?

How did Skinner's childhood experiences influence his later approach to studying behavior? His concept that humans operate like machines can be related to the fact he spent a lot of time as a child constructing machines. Additionally, his interest in animals also derives from childhood.

What were the results of the Skinner box?

Skinner showed how negative reinforcement worked by placing a rat in his Skinner box and then subjecting it to an unpleasant electric current which caused it some discomfort. As the rat moved about the box it would accidentally knock the lever. Immediately it did so the electric current would be switched off.

What is operant conditioning in psychology?

Operant conditioning (also known as instrumental conditioning) is a process by which humans and animals learn to behave in such a way as to obtain rewards and avoid punishments. It is also the name for the paradigm in experimental psychology by which such learning and action selection processes are studied.

What was Skinner's famous experiment?

The Skinner box. To show how reinforcement works in a controlled environment, Skinner placed a hungry rat into a box that contained a lever. As the rat scurried around inside the box, it would accidentally press the lever, causing a food pellet to drop into the box.

How does a skinner box work?

It must include at least one lever, bar, or key that the animal can manipulate. When the lever is pressed, food, water, or some other type of reinforcement might be dispensed.

Why use Skinner box?

The Skinner box is usually enclosed, to keep the animal from experiencing other stimuli. Using the device, researchers can carefully study behavior in a very controlled environment. For example, researchers could use the Skinner box to determine which schedule of reinforcement led to the highest rate of response in the study subjects. 3. ...

What was Skinner's motivation for creating his operant conditioning chamber?

Skinner was inspired to create his operant conditioning chamber as an extension of the puzzle boxes that Edward Thorndike famously used in his research on the law of effect. Skinner himself did not refer to this device as a Skinner box, instead preferring the term "lever box.". 1.

What happens when a lever is pressed?

When the lever is pressed, food, water, or some other type of reinforcement might be dispensed. Other stimuli can also be presented, including lights, sounds, and images. In some instances, the floor of the chamber may be electrified. The Skinner box is usually enclosed, to keep the animal from experiencing other stimuli.

Why is the Skinner box important?

The Skinner box is an important tool for studying learned behavior. It has contributed a great deal to our understanding of the effects of reinforcement and punishment. 3

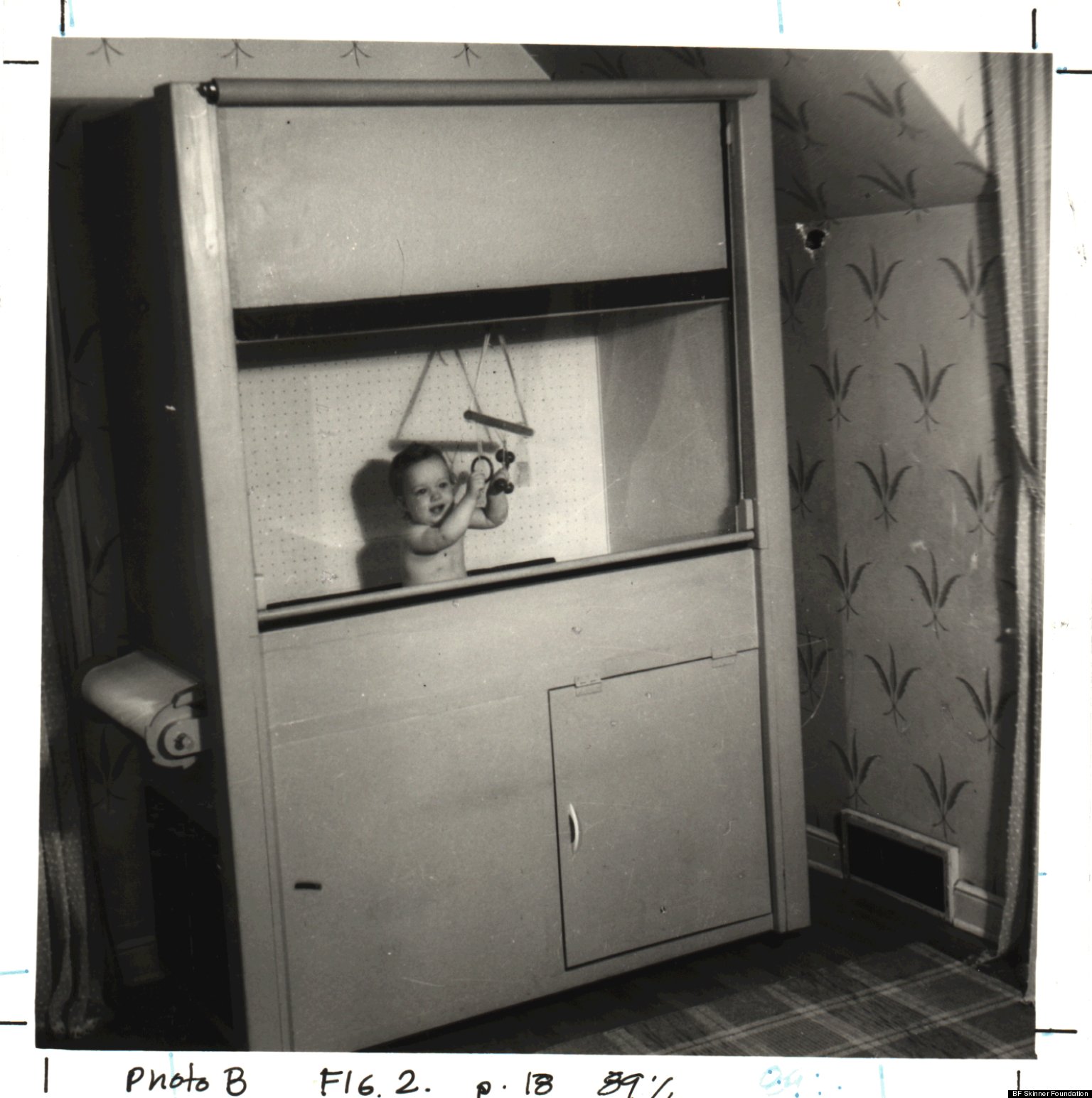

What is Skinner's crib called?

The Skinner box should not be confused with one of Skinner's other inventions, the baby tender (also known as the air crib). At his wife's request, Skinner created a heated crib with a plexiglass window that was designed to be safer than other cribs available at that time. 2 Confusion over the use of the crib led to it being confused with an experimental device, which led some to believe that Skinner's crib was actually a variation of the Skinner box.

What is variable ratio schedule?

Variable-ratio schedule: Subjects receive reinforcement after a random number of responses.

B. F. Skinner and the Operant Conditioning Chamber

The principle of the Skinner Box as it was originally developed was to analyze the response of an organism operating in its environment when it encounters a reinforcer. Skinner used animals in his experiments to formulate or flesh out theory applicable to people.

Marketing

Operant conditioning carries over very applicably to the concept of marketing products. In example, advertisers know that there are three types of operant conditioning: negative and positive reinforcement, as well as all-out punishment.

Gaming

Designers of modern video games are using the principles of Skinner in creating new games. The concept of operant conditioning is present in the design of many games.

What is the Skinner box?

Operant Conditioning Learning. B.F. Skinner proposed his theory on operant conditioning by conducting various experiments on animals. He used a special box known as “Skinner Box” for his experiment on rats. As the first step to his experiment, he placed a hungry rat inside the Skinner box. The rat was initially inactive inside ...

How did Skinner experiment negative reinforcement?

B.F. Skinner also conducted an experiment that explained negative reinforcement. Skinner placed a rat in a chamber in the similar manner, but instead of keeping it hungry, he subjected the chamber to an unpleasant electric current. The rat having experienced the discomfort started to desperately move around the box and accidentally knocked the lever. Pressing of the lever immediately seized the flow of unpleasant current. After a few times, the rat had smartened enough to go directly to the lever in order to prevent itself from the discomfort.

What is the action of pressing the lever?

Here, the action of pressing the lever is an operant response/behavior, and the food released inside the chamber is the reward. The experiment is also known as Instrumental Conditioning Learning as the response is instrumental in getting food.

What was the first step in the Skinner experiment?

As the first step to his experiment, he placed a hungry rat inside the Skinner box. The rat was initially inactive inside the box, but gradually as it began to adapt to the environment of the box, it began to explore around. Eventually, the rat discovered a lever, upon pressing which; food was released inside the box.

What is the result of escaping the electric current?

The electric current reacted as the negative reinforcement , and the consequence of escaping the electric current made sure that the rat repeated the action again and again. Here too, the pressing of the lever is an operant response, and the complete stop of the electric current flow is its reward.

What is the importance of operant conditioning?

The important part in any operant conditioning learning is to recognize the operant behavior and the consequence resulted in that particular environment. ...

What is Skinner's theory?

Skinner based his theory in the simple fact that the study of observable behavior is much simpler than trying to study internal mental events. Skinner’s works concluded a study far less extreme than those of Watson (1913), and it deemed classical conditioning as too simplistic of a theory to be a complete explanation of complex human behavior.

What is baby tender?

This “baby tender,” as Skinner called it, provided Deborah with a place to sleep and remain comfortably warm throughout the severe Minnesota winters without having to be wrapped in numerous layers of clothing and blankets (and developing the attendant rashes).

How old was Deborah when she slept in her crib?

Deborah slept in her novel crib until she was two and a half years old, and by all accounts grew up a happy, healthy, thriving child. The trouble began in October 1945, when the magazine Ladies’ Home Journal ran an article by Skinner about his baby tender. The article featured a picture of Deborah in a portable (and therefore smaller) ...

What is the Skinner box?

Skinner was a renowned behavioral psychologist who began his career in the 1930s and is best known for his development of the Skinner box, a laboratory apparatus used to conduct and record the results of operant conditioning experiments with animals. (These are typically experiments in which an animal must manipulate an object such as a lever in order to obtain a reward):

How to keep a baby warm?

We tackled first the problem of warmth. The usual solution is to wrap the baby in half-a-dozen layers of cloth-shirt, nightdress, sheet, and blankets. This is never completely successful. The baby is likely to be found steaming in its own fluids or lying cold and uncovered. Schemes to prevent uncovering may be dangerous, and in fact they have sometimes even proved fatal. Clothing and bedding also interfere with normal exercise and growth and keep the baby from taking comfortable postures or changing posture during sleep. They also encourage rashes and sores. Nothing can be said for the system on the score of convenience, because frequent changes and launderings are necessary.

Who said these legends were nothing more than outrageous rumors?

Deborah Skinner Buzan affirmed that these legends were nothing more than outrageous rumors:

Who described her experience with the baby tender many years later?

As Deborah Skinner described her experience with the baby tender many years later:

Who wrote the book Opening Skinner's Box?

In 2004 author Lauren Slater touched off a brouhaha and accusations of shoddy research when she repeated many of the familiar “Skinner box” rumors in her book Opening Skinner’s Box: Great Psychological Experiments of the Twentieth Century. According to legend, she wrote, Skinner kept Deborah:

How did Skinner train pigeons?

During World War II, the military invested Skinner’s project to train pigeons to guide missiles through the skies. The psychologist used a device that emitted a clicking noise to train pigeons to peck at a small, moving point underneath a glass screen. Skinner posited that the birds, situated in front of a screen inside of a missile, would see enemy torpedoes as specks on the glass, and rapidly begin pecking at it. Their movements would then be used to steer the missile toward the enemy: Pecks at the center of the screen would direct the rocket to fly straight, while off-center pecks would cause it to tilt and change course. Skinner managed to teach one bird to peck at a spot more than 10,000 times in 45 minutes, but the prospect of pigeon-guided missiles, along with adequate funding, eventually lost luster.

What is reinforcement in psychology?

Skinner’s approach introduced a new term into the literature: reinforcement. Behavior that is reinforced, like a mother excitedly drawing out the sounds of “mama” as a baby coos, tends to be repeated, and behavior that’s not reinforced tends to weaken and die out. “Positive” refers to the practice of encouraging a behavior by adding to it, such as rewarding a dog with a treat, and “negative” refers to encouraging a behavior by taking something away. For example, when a driver absentmindedly continues to sit in front of a green light, the driver waiting behind them honks his car horn. The first person is reinforced for moving when the honking stops. The phenomenon of reinforcement extends beyond babies and pigeons: we’re rewarded for going to work each day with a paycheck every two weeks, and likely wouldn’t step inside the office once they were taken away.

What is the verbal summator?

5. The Verbal Summator. An auditory version of the Rorschach inkblot test, this tool allowed participants to project subconscious thoughts through sound. Skinner quickly abandoned this endeavor as personality assessment didn’t interest him, but the technology spawned several other types of auditory perception tests.

How to teach a pigeon to turn in a circle?

If you want to teach a pigeon to turn in a circle to the left, you give it a reward for any small movement it makes in that direction. Soon, the pigeon catches onto this and makes larger movements to the left, which garner more rewards, until the bird completes the full circle.

How did Skinner use the teaching box?

4. The teaching box. Skinner believed using his teaching machine to break down material bit by bit, offering rewards along the way for correct responses, could serve almost like a private tutor for students. Material was presented in sequence, and the machine provided hints and suggestions until students verbally explained a response to a problem (Skinner didn’t believe in multiple choice answers). The device wouldn’t allow students to move on in a lesson until they understood the material, and when students got any part of it right, the machine would spit out positive feedback until they reached the solution. The teaching box didn’t stick in a school setting, but many computer-based self-instruction programs today use the same idea.

How many times did Skinner teach a bird to peck?

Skinner managed to teach one bird to peck at a spot more than 10,000 times in 45 minutes, but the prospect of pigeon-guided missiles, along with adequate funding, eventually lost luster. 3. The Air-Crib.

What is an animal that operates on their environment?

The term “operant” refers to an animal or person “operating” on their environment to affect change while learning a new behavior. B.F. Skinner at the Harvard psychology department, circa 1950 (Photo via Wikimedia) Operant conditioning breaks down a task into increments.

What is the operant chamber used for?

It is used to study both operant conditioning and classical conditioning. Skinner created the operant chamber as a variation of the puzzle box originally created by Edward Thorndike.

Why did Skinner create the operant conditioning chamber?

Skinner designed the operant conditioning chamber to allow for specific hypothesis testing and behavioural observation. He wanted to create a way to observe animals in a more controlled setting as observation of behaviour in nature can be unpredictable.

How does operant conditioning work?

An operant conditioning chamber permits experimenters to study behavior conditioning (training) by teaching a subject animal to perform certain actions (like pressing a lever) in response to specific stimuli, such as a light or sound signal. When the subject correctly performs the behavior, the chamber mechanism delivers food or other reward. In some cases, the mechanism delivers a punishment for incorrect or missing responses. For instance, to test how operant conditioning works for certain invertebrates, such as fruit flies, psychologists use a device known as a "heat box". Essentially this takes up the same form as the Skinner box, but the box is composed of two sides: one side that can undergo temperature change and the other that does not. As soon as the invertebrate crosses over to the side that can undergo a temperature change, the area is heated up. Eventually, the invertebrate will be conditioned to stay on the side that does not undergo a temperature change. This goes to the extent that even when the temperature is turned to its lowest point, the fruit fly will still refrain from approaching that area of the heat box. These types of apparatuses allow experimenters to perform studies in conditioning and training through reward/punishment mechanisms.

What is gamification in game design?

Gamification, the technique of using game design elements in non-game contexts, has also been described as using operant conditioning and other behaviorist techniques to encourage desired user behaviors.

How does operant conditioning affect behavior?

For instance, shaping a behavior of a child is influenced by the compliments, comments, approval, and disapproval of one's behavior. An important factor of operant conditioning is its ability to explain learning in real-life situations. From an early age, parents nurture their children’s behavior by using rewards and by showing praise following an achievement (crawling or taking a first step) which reinforces such behavior. When a child misbehaves, punishments in the form of verbal discouragement or the removal of privileges are used to discourage them from repeating their actions. An example of this behavior shaping is seen by way of military students. They are exposed to strict punishments and this continuous routine influences their behavior and shapes them to be a disciplined individual. Skinner’s theory of operant conditioning played a key role in helping psychologists to understand how behavior is learned. It explains why reinforcements can be used so effectively in the learning process, and how schedules of reinforcement can affect the outcome of conditioning.

What is the Skinner box?

An operant conditioning chamber (also known as the Skinner box) is a laboratory apparatus used to study animal behavior. The operant conditioning chamber was created by B. F. Skinner while he was a graduate student at Harvard University. It may have been inspired by Jerzy Konorski's studies.

Why are conditioning chambers used in animal learning?

The chambers design allows for easy monitoring of the animal and provides a space to manipulate certain behaviours. This controlled environment may allow for research and experimentation which cannot be performed in the field.

How did Skinner show positive reinforcement?

Skinner showed how positive reinforcement worked by placing a hungry rat in his Skinner box. The box contained a lever on the side, and as the rat moved about the box, it would accidentally knock the lever. Immediately it did so a food pellet would drop into a container next to the lever.

How can operant conditioning be used to produce complex behavior?

Skinner argues that the principles of operant conditioning can be used to produce extremely complex behavior if rewards and punishments are delivered in such a way as to encourage move an organism closer and closer to the desired behavior each time.

What is operant conditioning?

Operant conditioning, also known as instrumental conditioning, is a method of learning normally attributed to B.F. Skinner, where the consequences of a response determine the probability of it being repeated. Through operant conditioning behavior which is reinforced (rewarded) will likely be repeated, and behavior which is punished will occur less ...

What is the Skinner box?

A Skinner box, also known as an operant conditioning chamber, is a device used to objectively record an animal's behavior in a compressed time frame.

Why did the Skinner study show that rats learned to repeat behavior?

In the Skinner study, because food followed a particular behavior the rats learned to repeat that behavior, e.g., operant conditioning. • There is little difference between the learning that takes place in humans and that in other animals. Therefore research (e.g., operant conditioning) can be carried out on animals (Rats / Pigeons) ...

What is Skinner's view of behavior?

He believed that the best way to understand behavior is to look at the causes of an action and its consequences. He called this approach operant conditioning. YouTube.

How did Skinner teach rats to avoid electric current?

In fact Skinner even taught the rats to avoid the electric current by turning on a light just before the electric current came on . The rats soon learned to press the lever when the light came on because they knew that this would stop the electric current being switched on.