How do I install spark on a cluster?

Installing Spark. Install Spark in standalone mode on a Single node cluster – for Apache Spark Installation in Standalone Mode, simply place Spark setup on the node of the cluster and extract and configure it. Follow this guide If you are planning to install Spark on a multi-node cluster.

What is spark standalone mode?

The Spark standalone mode sets the system without any existing cluster management software. For example Yarn Resource Manager / Mesos. We have spark master and spark worker who divides driver and executors for Spark application in Standalone mode. So, let’s start Spark Installation in Standalone Mode.

What is Apache Spark standalone mode of deployment?

Spark Standalone Mode of Deployment This is necessary to update all the present packages in your machine. This will install JDK in your machine and would help you to run Java applications. Java is a pre-requisite for using or running Apache Spark Applications. This screenshot shows the java version and assures the presence of java on the machine.

What is the default security mode for spark?

Security in Spark is OFF by default. This could mean you are vulnerable to attack by default. Please see Spark Security and the specific security sections in this doc before running Spark. To install Spark Standalone mode, you simply place a compiled version of Spark on each node on the cluster.

How do I run Spark application locally?

So, how do you run the spark in local mode? It is very simple. When we do not specify any --master flag to the command spark-shell, pyspark, spark-submit, or any other binary, it is running in local mode. Or we can specify --master option with local as argument which defaults to 1 thread.

Can you run Spark on a single machine?

Spark can run in Local Mode on a single machine or in Cluster-Mode on different machines connected to distributed computing. Local Mode is ideal for learning Spark installation and application development. All you need is your laptop or an instance on the computing cloud, like AWS EC2.

What is difference between local and standalone mode in Spark?

So the only difference between Standalone and local mode is that in Standalone you are defining "containers" for the worker and spark master to run in your machine (so you can have 2 workers and your tasks can be distributed in the JVM of those two workers?)

Do you need to install Spark on all nodes?

No, it is not necessary to install Spark on all the 3 nodes. Since spark runs on top of Yarn, it utilizes yarn for the execution of its commands over the cluster's nodes. So, you just have to install Spark on one node.

What is the difference between Spark cluster mode and Spark client mode?

In cluster mode, the Spark driver runs inside an application master process which is managed by YARN on the cluster, and the client can go away after initiating the application. In client mode, the driver runs in the client process, and the application master is only used for requesting resources from YARN.

How do I run Pyspark on local machine?

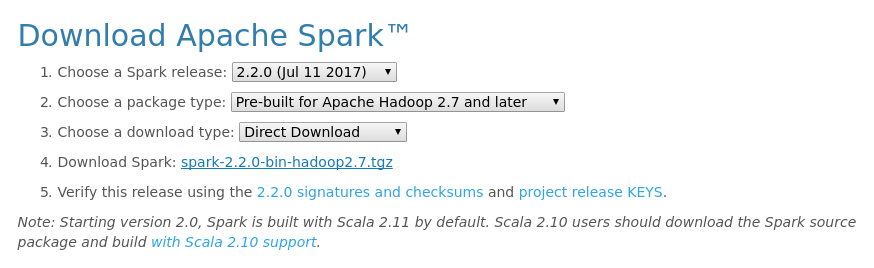

Installing Apache SparkHead over to the Spark homepage.Select the Spark release and package type as following and download the . tgz file.Save the file to your local machine and click 'Ok'.Let's extract the file using the following command. $ tar -xzf spark-2.4.6-bin-hadoop2.7.tgz.

What is standalone mode?

When you start the software, it detects any frame that is connected to the computer. If no frame is connected, the software runs in “standalone” mode. This lets you do everything except run tests on specimens.

Does Spark standalone use Hadoop?

Spark is a fast and general processing engine compatible with Hadoop data. It can run in Hadoop clusters through YARN or Spark's standalone mode, and it can process data in HDFS, HBase, Cassandra, Hive, and any Hadoop InputFormat.

What are different modes in Spark?

Spark application can be submitted in two different ways – cluster mode and client mode. In cluster mode, the driver will get started within the cluster in any of the worker machines. So, the client can fire the job and forget it. In client mode, the driver will get started within the client.

Does Spark require cluster?

Spark on a distributed model can be run with the help of a cluster. There are x number of workers and a master in a cluster. The one which forms the cluster divide and schedules resources in the host machine. Dividing resources across applications is the main and prime work of cluster managers.

When should you not use Spark?

When Not to Use SparkIngesting data in a publish-subscribe model: In those cases, you have multiple sources and multiple destinations moving millions of data in a short time. ... Low computing capacity: The default processing on Apache Spark is in the cluster memory.

Why Spark is faster than Hive?

Speed: – The operations in Hive are slower than Apache Spark in terms of memory and disk processing as Hive runs on top of Hadoop. Read/Write operations: – The number of read/write operations in Hive are greater than in Apache Spark. This is because Spark performs its intermediate operations in memory itself.

How Spark works on a single node?

Single Node cluster properties Runs Spark locally. The driver acts as both master and worker, with no worker nodes. Spawns one executor thread per logical core in the cluster, minus 1 core for the driver. All stderr , stdout , and log4j log output is saved in the driver log.

Can I run Spark on my laptop?

Apache Spark comes in a compressed tar/zip files hence installation on windows is not much of a deal as you just need to download and untar the file. Download Apache spark by accessing the Spark Download page and select the link from “Download Spark (point 3 from below screenshot)”.

Is Spark still relevant?

According to Eric, the answer is yes: “Of course Spark is still relevant, because it's everywhere. Everybody is still using it.

What is PySpark?

PySpark is the Python API for Apache Spark, an open source, distributed computing framework and set of libraries for real-time, large-scale data processing. If you're already familiar with Python and libraries such as Pandas, then PySpark is a good language to learn to create more scalable analyses and pipelines.

Installing Spark Standalone to A Cluster

To install Spark Standalone mode, you simply place a compiled version of Spark on each node on the cluster. You can obtain pre-built versions of Sp...

Starting A Cluster Manually

You can start a standalone master server by executing:Once started, the master will print out a spark://HOST:PORT URL for itself, which you can use...

Connecting An Application to The Cluster

To run an application on the Spark cluster, simply pass the spark://IP:PORT URL of the master as to the SparkContextconstructor.To run an interacti...

Launching Spark Applications

The spark-submit script provides the most straightforward way tosubmit a compiled Spark application to the cluster. For standalone clusters, Spark...

Configuring Ports For Network Security

Spark makes heavy use of the network, and some environments have strict requirements for usingtight firewall settings. For a complete list of ports...

i. Platform

Operating system: Ubuntu 14.04 or later, we can also use other Linux flavors like CentOS, Redhat, etc.

ii. Software you need to install before installing Spark

You need to install Java before Spark installation. So, let’s begin by installing Java.

iii. Installing Spark

Install Spark in standalone mode on a Single node cluster – for Apache Spark Installation in Standalone Mode, simply place Spark setup on the node of the cluster and extract and configure it. Follow this guide If you are planning to install Spark on a multi-node cluster.

iv. Start Spark Services

Now, start a standalone master server by executing- [php]./sbin/start-master.sh [/php]

v. Running sample Spark application

Once you have done Apache Spark Installation in Standalone Mode Let’s run Apache Spark Pi example (the jar for the example is shipped with Spark) [php]./bin/spark-submit –class org.apache.spark.examples.SparkPi –master spark://sapna-All-Series:7077 –executor-memory 1G –total-executor-cores 1 /home/sapna/spark-2.0.0-bin-hadoop2.6/examples/jars/spark-examples_2.11-2.0.0.jar 10 [/php] –class: The entry point for your application. –master: The master URL for the cluster. –executor-memory: Specify memory to be allocated for the application. –total-executor-cores: Specify no.

vi. Starting the Spark Shell

Now to play with Spark, firstly create RDD and perform various RDD operations using this Spark shell commands tutorial. See Also-

How to install Spark standalone?

Installing Spark Standalone to a Cluster. To install Spark Standalone mode, you simply place a compiled version of Spark on each node on the cluster. You can obtain pre-built versions of Spark with each release or build it yourself.

How to run an application on Spark cluster?

To run an application on the Spark cluster, simply pass the spark://IP:PORT URL of the master as to the SparkContext constructor.

How to launch a Spark cluster?

To launch a Spark standalone cluster with the launch scripts, you should create a file called conf/workers in your Spark directory, which must contain the hostnames of all the machines where you intend to start Spark workers, one per line. If conf/workers does not exist, the launch scripts defaults to a single machine (localhost), which is useful for testing. Note, the master machine accesses each of the worker machines via ssh. By default, ssh is run in parallel and requires password-less (using a private key) access to be setup. If you do not have a password-less setup, you can set the environment variable SPARK_SSH_FOREGROUND and serially provide a password for each worker.

What is Spark Submit?

The spark-submit script provides the most straightforward way to submit a compiled Spark application to the cluster. For standalone clusters, Spark currently supports two deploy modes. In client mode, the driver is launched in the same process as the client that submits the application. In cluster mode, however, the driver is launched from one of the Worker processes inside the cluster, and the client process exits as soon as it fulfills its responsibility of submitting the application without waiting for the application to finish.

How many parts does Spark have?

Spark Standalone has 2 parts, the first is configuring the resources for the Worker, the second is the resource allocation for a specific application.

What is the option to control the number of cores that spark-shell uses on the cluster?

You can also pass an option --total-executor-cores <numCores> to control the number of cores that spark-shell uses on the cluster.

How to specify jars in Spark?

For any additional jars that your application depends on, you should specify them through the --jars flag using comma as a delimiter (e.g. --jars jar1,jar2 ). To control the application’s configuration or execution environment, see Spark Configuration.

How to install Spark standalone?

Installing Spark Standalone to a Cluster. To install Spark Standalone mode, you simply place a compiled version of Spark on each node on the cluster. You can obtain pre-built versions of Spark with each release or build it yourself.

How to run an application on Spark cluster?

To run an application on the Spark cluster, simply pass the spark://IP:PORT URL of the master as to the SparkContext constructor.

How to launch a Spark cluster?

To launch a Spark standalone cluster with the launch scripts, you should create a file called conf/slaves in your Spark directory, which must contain the hostnames of all the machines where you intend to start Spark workers, one per line. If conf/slaves does not exist, the launch scripts defaults to a single machine (localhost), which is useful for testing. Note, the master machine accesses each of the worker machines via ssh. By default, ssh is run in parallel and requires password-less (using a private key) access to be setup. If you do not have a password-less setup, you can set the environment variable SPARK_SSH_FOREGROUND and serially provide a password for each worker.

What is the option to control the number of cores that spark-shell uses on the cluster?

You can also pass an option --total-executor-cores <numCores> to control the number of cores that spark-shell uses on the cluster.

How to specify jars in Spark?

For any additional jars that your application depends on, you should specify them through the --jars flag using comma as a delimiter (e.g. --jars jar1,jar2 ). To control the application’s configuration or execution environment, see Spark Configuration.

What is sbin/start-master.sh?

sbin/start-master.sh - Starts a master instance on the machine the script is executed on.

What is the main port of Spark?

The master and each worker has its own web UI that shows cluster and job statistics. By default, you can access the web UI for the master at port 8080. The port can be changed either in the configuration file or via command-line options.

What version of Hadoop is needed for Spark?

If you are using Spark versions that is lower than 2.0, for example spark-1.6.1-bin-hadoop2.6, you will need the winutils.exe for hadoop-2.6.0, which can be downloaded from https://github.com/stonefl/winutils/raw/master/hadoop-2.6.0/bin/winutils.exe

What is Apache Spark?

Apache Spark is a cluster comuting framework for large-scale data processing, which aims to run programs in parallel across many nodes in a cluster of computers or virtual machines . It comibnes a stack of libraries including SQL and DataFrames, MLlib, GraphX, and Spark Streaming. Spark can run in four modes:

Where does Apache Mesos driver run?

Apache Mesos, where driver runs on the master node while work nodes run on separat machines.

Is Spark local mode compatible with cluster mode?

As Spark’s local mode is fully compatible with cluster modes, thus the local mode is very useful for prototyping, developing, debugging, and testing. Programs written and tested locally can be run on a cluster with just a few additional steps. In addition, the standalone mode can also be used in real-world scenarios to perform parallel computating across multiple cores on a single computer. In this post, I will walk through the stpes of setting up Spark in a standalone mode on Windows 10.

Can you run spark-shell as administrator?

Now, you can run spark-shell in the cmd ( run as administrator) or PowerShell ( run as administrator) to test the installation. If you can see the scala console shown as below, then you are good to go, Congratulations!

How many modes of deployment for Apache Spark?

There are two modes to deploy Apache Spark on Hadoop YARN.

What is Spark streaming?

Spark Streaming: It is the component that works on live streaming data to provide real-time analytics. The live data is ingested into discrete units called batches which are executed on Spark Core. Spark SQL: It is the component that works on top of Spark core to run SQL queries on structured or semi-structured data.

What is client mode in YARN?

Client mode: In this mode, the resources are requested from YARN by application master and Spark driver runs in the client process.

What is Spark Core?

Spark Core: It is the foundation of Spark application on which other components are directly dependent. It provides a platform for a wide variety of applications such as scheduling, distributed task dispatching, in-memory processing and data referencing.

What is Spark analytics?

Spark is an open-source framework for running analytics applications. It is a data processing engine hosted at the vendor-independent Apache Software Foundation to work on large data sets or big data. It is a general-purpose cluster computing system that provides high-level APIs in Scala, Python, Java, and R.

Where to download Apache Spark?

Download Apache Spark according to your Hadoop version from https://spark.apache.org/downloads.html

Does Spark have a file system?

Spark does not have its own file system. It processes data from diverse data sources such as Hadoop Distributed File System (HDFS), Amazon’s S3 system, Apache Cassandra, MongoDB, Alluxio, Apache Hive. It can run on Hadoop YARN (Yet Another Resource Negotiator), on Mesos, on EC2, on Kubernetes or using standalone cluster mode.

What happens if a Spark Master node goes down?

This means that if the Spark Master node goes down, the Spark cluster would stop functioning, all currently submitted or running applications would fail, and no new applications could be submitted.

How to open pyspark?

Open the PySpark shell by running the pyspark command in the Terminal from any directory. If Spark has been successfully installed, you should see the following output:

What to do if Java 1.7 is not installed?

If Java 1.7 or higher is not installed, install the Java 1.7 runtime and development environments using Ubuntu’s APT (Advanced Packaging Tool). Alternatively, you could use the Oracle JDK instead:

Where to run start-slave.sh script?

Run the start-slave.sh script from the sbin director y on all of the Spark slave nodes.

Does Spark include Hadoop?

The Spark releases do not actually include Hadoop as the names may imply. They simply include libraries to integrate with the Hadoop clusters and distributions listed. Many of the Hadoop classes are required regardless of whether you are using Hadoop. I will use the spark-1.5.2-bin-hadoop2.6.tgz package for this installation.