Getting Started with AWS Data Pipeline

- Create the Pipeline The quickest way to get started with AWS Data Pipeline is to use a pipeline definition called a template. ...

- Monitor the Running Pipeline After you activate your pipeline, you are taken to the Execution details page where you can monitor the progress of your pipeline. ...

- View the Output Open the Amazon S3 console and navigate to your bucket. ...

- Delete the Pipeline ...

Full Answer

What is a a pipeline in AWS?

A pipeline is the AWS Data Pipeline resource that contains the definition of the dependent chain of data sources, destinations, and predefined or custom data processing activities required to execute your business logic.

How can I use AWS data pipeline to monitor traffic?

For example, you can use AWS Data Pipeline to archive your web server's logs to Amazon Simple Storage Service (Amazon S3) each day and then run a weekly Amazon EMR (Amazon EMR) cluster over those logs to generate traffic reports. AWS Data Pipeline schedules the daily tasks to copy data and the weekly task to launch the Amazon EMR cluster.

Can I use AWS glue with AWS data pipeline?

Also, you can create a workflow from an AWS Glue blueprint, or you can manually build a workflow one component at a time using the AWS Management Console or the AWS Glue API. No. Use an AWS SDK to interact with AWS Data Pipeline or to implement a custom Task Runner.

What happens when an activity fails in AWS data pipeline?

If failures occur in your activity logic or data sources, AWS Data Pipeline automatically retries the activity. If the failure persists, AWS Data Pipeline sends you failure notifications via Amazon Simple Notification Service (Amazon SNS).

See more

What is AWS data pipeline used for?

AWS Data Pipeline is a web service that helps you reliably process and move data between different AWS compute and storage services, as well as on-premises data sources, at specified intervals.

How do I start AWS data pipeline?

Open the AWS Data Pipeline console at https://console.aws.amazon.com/datapipeline/ . The first screen that you see depends on whether you've created a pipeline in the current region. If you haven't created a pipeline in this region, the console displays an introductory screen. Choose Get started now.

How do you activate data pipeline?

From Setup, in the Quick Find box, enter Data Pipelines , then select Getting Started. Note To see this option, you must be assigned the Use Data Pipelines Add On User Settings permission set license and the Data Pipelines Add On permission set. To enable Data Pipelines, select the Data Pipelines option.

Is AWS data pipeline an ETL tool?

AWS Data Pipeline Product Details As a managed ETL (Extract-Transform-Load) service, AWS Data Pipeline allows you to define data movement and transformations across various AWS services, as well as for on-premises resources.

What is the difference between ETL and pipeline?

ETL refers to a set of processes extracting data from one system, transforming it, and loading it into a target system. A data pipeline is a more generic term; it refers to any set of processing that moves data from one system to another and may or may not transform it.

What is data pipeline examples?

A data pipeline is a series of processes that migrate data from a source to a destination database. An example of a technical dependency may be that after assimilating data from sources, the data is held in a central queue before subjecting it to further validations and then finally dumping into a destination.

What is HEVO pipeline?

Hevo is an end-to-end data pipeline platform that enables you to easily pull data from all your sources to the warehouse, run transformations for analytics, and deliver operational intelligence to business tools. Try Hevo for free. 150+ connectors. Zero-maintenance. No credit card required.

Is HEVO cloud based?

Hevo Data Overview The platform supports 150+ ready-to-use integrations across SaaS Applications, Cloud Databases, Cloud Storage, SDKs, and Streaming Services. Over 1000 data-driven companies spread across 45+ countries trust Hevo for their data integration needs.

What is HEVO app?

Hevo is a no-code, bi-directional data pipeline platform specially built for modern ETL, ELT, and Reverse ETL Needs. It helps data teams streamline and automate org-wide data flows that result in a saving of ~10 hours of engineering time/week and 10x faster reporting, analytics, and decision making.

What is the difference between AWS data pipeline and glue?

AWS Glue provides support for Amazon S3, Amazon RDS, Redshift, SQL, and DynamoDB and also provides built-in transformations. On the other hand, AWS Data Pipeline allows you to create data transformations through APIs and also through JSON, while only providing support for DynamoDB, SQL, and Redshift.

Is Amazon S3 a ETL tool?

Modernizing Amazon S3 ETL ETL (Extract, Transform, Load) tools will help you collect your data from APIs and file transfers (the Extract step), convert them into standardized analytics-ready tables (the Transform step) and put them all into a single data repository (the Load step) to centralize your analytics efforts.

How does ETL work with AWS?

How ETL works. ETL is a three-step process: extract data from databases or other data sources, transform the data in various ways, and load that data into a destination. In the AWS environment, data sources include S3, Aurora, Relational Database Service (RDS), DynamoDB, and EC2.

What is AWS DataSync?

AWS DataSync is a secure, online service that automates and accelerates moving data between on premises and AWS Storage services.

How do I transfer data from S3 to DynamoDB?

To import data from S3 to DynamoDBLog in to the console and navigate to the DynamoDB service. ... The Imports from S3 page lists information about any existing or recent import jobs that have been created. ... On Destination table – new table. ... Confirm the choices and settings are correct and choose Import to begin your import.More items...•

What is Amazon CloudSearch?

Amazon CloudSearch is a managed service in the AWS Cloud that makes it simple and cost-effective to set up, manage, and scale a search solution for your website or application. Amazon CloudSearch supports 34 languages and popular search features such as highlighting, autocomplete, and geospatial search.

What is AWS migration hub?

a day agoAWS Migration Hub provides a central location to collect server and application inventory data for the assessment, planning, and tracking of migrations to AWS. Migration Hub can also help accelerate application modernization following migration.

Prerequisites

This tutorial will be a hands-on demonstration but doesn’t require many tools to get started. If you’d like to follow along, be sure you have an AWS account.

Creating Data in a DynamoDB Table

What is the AWS data pipeline anyway? AWS data pipeline is a web service that helps move data within AWS compute and storage services as well as on-premises data sources at specified intervals. And as part of preparations for moving data between sources, you’ll create an AWS data pipeline.

Providing Access to Resources with IAM Policy and Role

You now have the DynamoDB table set up. But to move data to the S3, you’ll create an IAM policy and a role to provide access. Both IAM policy and a role that also allows or denies certain actions on services.

Creating an S3 Bucket

You now have data you can transfer between sources. But where exactly will you transfer the data? Create an S3 bucket that will serve as storage where you can transfer data from your DynamoDB table ( ATA-employee_id ).

Conclusion

In this tutorial, you learned how to move data between sources, specifically with DynamoDB and S3 bucket using the AWS Data Pipeline service. You’ve also touched on setting up IAM roles and policies to provide access for moving data between resources.

What is AWS Data Pipeline?from edureka.co

AWS Data Pipeline is a web service that helps you reliably process and move data between different AWS compute and storage services, as well as on-premises data sources, at specified intervals.

What is data node in AWS?from edureka.co

Data Nodes: In AWS Data Pipeline, a data node defines the location and type of data that a pipeline activity uses as input or output. It supports data nodes like:

What is pipeline action?from edureka.co

Actions: Actions are steps that a pipeline component takes when certain events occur, such as success, failure, or late activities. Send an SNS notification to a topic based on success, failure, or late activities. Trigger the cancellation of a pending or unfinished activity, resource, or data node.

What is a resource in pipeline?from edureka.co

Resources: A resource is a computational resource that performs the work that a pipeline activity specifies.

What is a precondition in a pipeline?from edureka.co

Preconditions: A precondition is a pipeline component containing conditional statements that must be true before an activity can run.

Is data available in multiple formats?from edureka.co

Variety of formats: Data is available in multiple formats. Converting unstructured data to a compatible format is a complex & time-consuming task.

Is managing bulk data expensive?from edureka.co

Time-consuming & costly: Managing bulk of data is time-consuming & a very expensive . A lot of money is to be spent on transform, store & process data.

General

AWS Free Tier includes 750hrs of Micro Cache Node with Amazon ElastiCache.

Functionality

Yes, AWS Data Pipeline provides built-in support for the following activities:

Getting Started

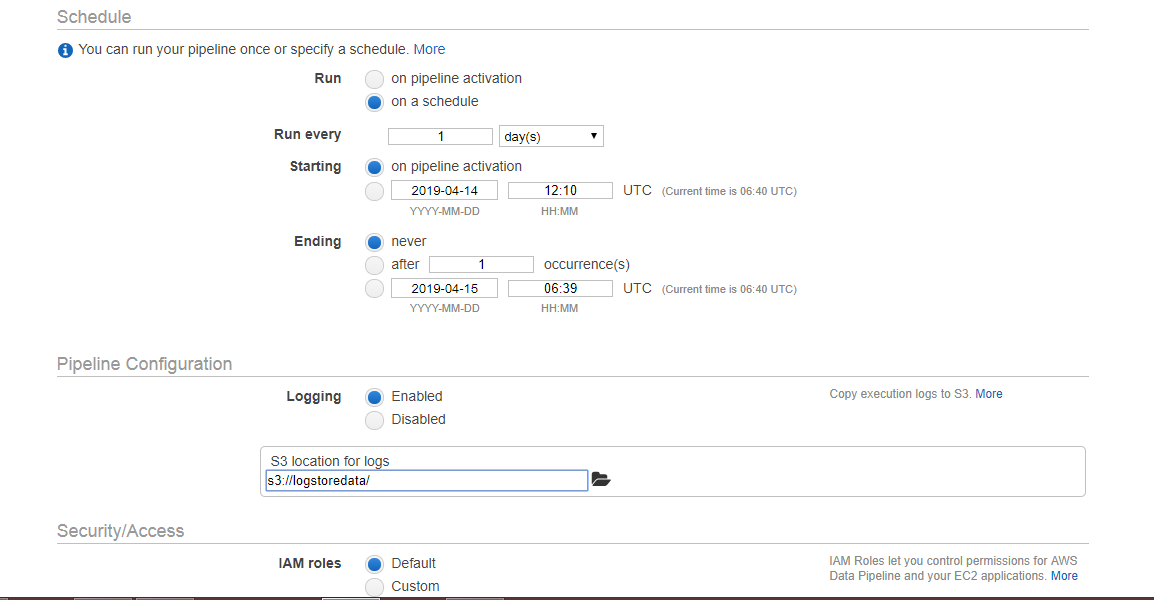

To get started with AWS Data Pipeline, simply visit the AWS Management Console and go to the AWS Data Pipeline tab. From there, you can create a pipeline using a simple graphical editor.

Billing

Except as otherwise noted, our prices are exclusive of applicable taxes and duties, including VAT and applicable sales tax. For customers with a Japanese billing address, use of AWS services is subject to Japanese Consumption Tax. Learn more.

What is AWS Data Pipeline?

In the Amazon Cloud environment, AWS Data Pipeline service makes this dataflow possible between these different services. It enables automation of data-driven workflows.

What is cloud trail in AWS?

AWS CloudTrail captures all API calls for AWS Data Pipeline as events. These events can be streamed to a target S3 bucket by creating a trail from the AWS console. A CloudTrail event represents a single request from any source and includes information about the requested action, the date and time of the action, request parameters, and so on. This information is quite useful when diagnosing a problem on a configured data pipeline at runtime. The log entry format is as follows:

What is action in a pipeline?

Action – Actions are event handlers which are executed in response to pipeline events.

What is an activity in a datanode?

Activities – specify the task to be performed on data stored in datanodes. Different types of activities are provided depending on the application. These include:

What is data node?

DataNodes – represent data stores for input and output data. DataNodes can be of various types depending on the backend AWS Service used for data storage. Examples include:

AWS Glue vs. AWS Data Pipeline – Key Features

Glue provides more of an end-to-end data pipeline coverage than Data Pipeline, which is focused predominantly on designing data workflow. Also, AWS is continuing to enhance Glue; development on Data Pipeline appears to be stalled.

AWS Glue vs. AWS Data Pipeline – Supported Data Sources

Both systems support data throughout the AWS ecosystem. Glue provides broader coverage and greater flexibility for external sources.

AWS Glue vs. AWS Data Pipeline – Transformations

Again. Glue provides broader coverage, including out-of-the-box transformations and more ways to work with ancillary AWS services. However, it’s more complex to deploy and maintain.

AWS Glue vs. AWS Data Pipeline – Pricing

There are many more variations on pricing for AWS Glue than there are for Data Pipeline, due in part to the former’s multiple components. For more information, please see the AWS Glue pricing page and the AWS Data Pipeline pricing page.

Prerequisites

Creating Data in A DynamoDB Table

- What is the AWS data pipeline anyway? AWS data pipeline is a web service that helps move data within AWS compute and storage services as well as on-premises data sources at specified intervals. And as part of preparations for moving data between sources, you’ll create an AWS data pipeline. Since you’ll be using DynamoDB to transfer data to an S3 bu...

Providing Access to Resources with Iam Policy and Role

- You now have the DynamoDB table set up. But to move data to the S3, you’ll create an IAM policy and a roleto provide access. Both IAM policy and a role that also allows or denies certain actions on services. Related:Learning Identity and Access Management (IAM) AWS Through Examples 1. Search for IAM on the dashboard, and click the IAMitem, as shown below to access the IAM das…

Creating An S3 Bucket

- You now have data you can transfer between sources. But where exactly will you transfer the data? Create an S3 bucket that will serve as storage where you can transfer data from your DynamoDB table (ATA-employee_id). 1. Login to your AWS account and enter S3 on the search box, as shown below. Click on the S3item to access the Amazon S3 dashboard. 2. Next, click on …

Conclusion

- In this tutorial, you learned how to move data between sources, specifically with DynamoDB and S3 bucket using the AWS Data Pipeline service. You’ve also touched on setting up IAM roles and policies to provide access for moving data between resources. At this point, you can now transfer various data using the AWS data pipeline. So what’s next for you? Why not build data warehouse…