In most sciences, results yielding a p-value of .05 are considered on the borderline of statistical significance. If the p-value is under .01, results are considered statistically significant and if it's below .005 they are considered highly statistically significant.

Is the p-value of Alpha = 0053 significant?

And what about p-value = 0.053? Join ResearchGate to ask questions, get input, and advance your work. Dear Ghahraman Mahmoudi, please read my previous answer. Considering a significance level alpha = 0.05, a p-value = 0.05 is significant and p-value = 0.053 is not significant.

What is the cut off for p value for significance?

In the majority of analyses, an alpha of 0.05 is used as the cutoff for significance. If the p-value is less than 0.05, we reject the null hypothesis that there's no difference between the means and conclude that a significant difference does exist. If the p-value is larger than 0.05, we cannot conclude that a significant difference exists.

Is a p-value of 1.00000 significant?

Some of the p-values are significant (i.e. less than 0.05) and some are not. However, several of the p-values equal 1.00000. I assume this means the two things are definitely NOT significantly different, but is this R rounding up from 0.9999 or is a p-value of 1.000 meaningful?

What does a p-value of 0/05 mean?

yes p-value = 0,05, it means significant. I know the original question is old but with some new replies, I thought I would make a brief comment. While .05 may be statistically significant, consider a program like SPSS may not be providing decimals beyond 2.

What is an alpha in statistics?

What does p=.03 mean in a t-test?

How to simulate p-value?

What is the chance that this person has the disease?

How does a hypothesis test work?

How does a diagnosic test work?

What is the significance of a p-value?

See 4 more

About this website

Is the p-value 005 significant?

If the p-value is under . 01, results are considered statistically significant and if it's below . 005 they are considered highly statistically significant.

What if the p-value is exactly 05?

If the p-value is 0.05 or lower, the result is trumpeted as significant, but if it is higher than 0.05, the result is non-significant and tends to be passed over in silence.

What does a 0.05 level of significance represent?

For example, a significance level of 0.05 indicates a 5% risk of concluding that a difference exists when there is no actual difference. Lower significance levels indicate that you require stronger evidence before you will reject the null hypothesis.

Is p 0.001 significant?

Conventionally, p < 0.05 is referred as statistically significant and p < 0.001 as statistically highly significant.

Is p-value of 0.05 good?

Is a 0.05 P-Value Significant? A p-value less than 0.05 is typically considered to be statistically significant, in which case the null hypothesis should be rejected. A p-value greater than 0.05 means that deviation from the null hypothesis is not statistically significant, and the null hypothesis is not rejected.

Is .054 statistically significant?

054 indicates a not significant outcome.

Which is better 0.01 or 0.05 significance level?

The degree of statistical significance generally varies depending on the level of significance. For example, a p-value that is more than 0.05 is considered statistically significant while a figure that is less than 0.01 is viewed as highly statistically significant.

Is p 0.008 statistically significant?

However, the P value of 0.008 would suggest a degree of “significance” to the result that belies its clinical interpretation.

What does a 0.003 p-value mean?

The interpretation of this is that the significance test offers support of the alternative hypothesis (against the null hypothesis). Since the P-value 0.003 is less than the significance level 0.1, the results of the significance test are statistically significant.

Is p 0.03 statistically significant?

After analyzing the sample delivery times collected, the p-value of 0.03 is lower than the significance level of 0.05 (assume that we set this before our experiment), and we can say that the result is statistically significant.

What does a .05 level of significance mean quizlet?

a .05 level of significance means that: there is only a 5 percent chance that a statistic's value could be obtained as a result of random error.

What does a 0.05 significance level correspond with in confidence level?

95%For example, if your significance level is 0.05, the equivalent confidence level is 95%. Both of the following conditions represent statistically significant results: The P-value in a hypothesis test is smaller than the significance level. The confidence interval excludes the null hypothesis value.

What does a significant level of 5% mean?

In statistical tests, statistical significance is determined by citing an alpha level, or the probability of rejecting the null hypothesis when the null hypothesis is true. For this example, alpha, or significance level, is set to 0.05 (5%).

What does 5% level of significance mean explain with suitable examples?

Significance Level = p (type I error) = α The results are written as “significant at x%”. Example: The value significant at 5% refers to p-value is less than 0.05 or p < 0.05. Similarly, significant at the 1% means that the p-value is less than 0.01. The level of significance is taken at 0.05 or 5%.

Would a p-value of exactly 0.05 indicate a statistically ... - Quora

Answer (1 of 7): I’m dismayed by the number of answers that claim that you cannot get a p-value of exactly 5%. Mathematically, that’s nonsense despite it being claimed by virtually everyone else who chimed in with an answer. I gave a simple example of a scenario in which the p-value is 5% in a co...

What happens when p-value is equal to 0.05? - Critical Homework

QUESTION JUN 08, 2017 Discussion Board Questions $10.00 Due today, 6/8 19:00 EST 250 word AMA format response for each question below: Don't use plagiarized sources. Get Your Custom Essay on What happens when p-value is equal to 0.05? Just from $10/Page Order Essay 1: Thank you for your discussion post. As you may notice,… Continue reading What happens when p-value is equal to 0.05?

If p-value is exactly equal to 0.05, is that significant or...ask 8

Answer of If p-value is exactly equal to 0.05, is that significant or insignificant? The p-value= 0.050 is considered significant or insignificant for...

How to Interpret a P-Value Less Than 0.05 (With Examples) - Statology

A hypothesis test is used to test whether or not some hypothesis about a population parameter is true.. Whenever we perform a hypothesis test, we always define a null and alternative hypothesis: Null Hypothesis (H 0): The sample data occurs purely from chance.; Alternative Hypothesis (H A): The sample data is influenced by some non-random cause.; If the p-value of the hypothesis test is less ...

What is the threshold for type 1 error?

the error made when the data says to reject the null hypothesis but the null is actually true). This threshold is called the significance level and is often denoted α. It is the threshold by which you judge a p-value (also called the attained significance) in the traditional approach to hypothesis testing. (This claim does not mean I advocate the traditional approach. I agree with the Peter Flom that “Significance, even if it’s a reasonable question, isn’t one that ought to be answered ‘yes’ and ‘no’. It’s a question of degree.”)

What is the probability of a type 1 error?

(In practice, the p-value can be carried to more places, e.g., 0.04998 or 0.0501.) Choice of the 5% level amounts to a viewpoint of the decision-maker that “I will reject H0 if the chance of making an error thereby is no more than 5%.” So the probability of this error (traditionally called a Type I error) is 0.05, not more. Of course, the decision maker might be thinking “I will reject H0 if the chance of making an error thereby is less than 0.05.” It isn’t less than 0.05, but equal to 0.05.

When you decide a priory the significance level, do you have to take the decision for or against the null?

Having said that, in the spirit of the process of Hypothesis testing, once you have decided a priory the significance level, you must take the decision for or against the null hypothesis with respect to the p-value obtained otherwise the whole purpose of the test would be defeated.

What does choice 3 mean in math?

Choice 3 means that my test has a 100% chance of a Type II error (i.e. failing to reject a false null hypothesis) so that is not ideal.

What is a P value?

P-values are real numbers from the continuum between 0 and 1. As such there are (in a carefully defined way) uncountably many of them. The result one gets doing a p test is a single number. It might round to 5%, say it is in the range 4.99% to 5.01%, but if you carried the calculation a few more digits you would get a result away from 5%. The point is there is some finite probability of getting an answer between 4.99% and 5.01%, but there is zero probability of getting exactly 5% to infinite decimal places. Each decimal place that you extend your p-value reduces it's probability closer and clo

What number was rolled on a single roll of the die?

Data: On a single roll of the die, the number 4 was rolled.

Do statisticians use p-values?

Statisticians are much less fond of p-values than scientists in general. In 2016, the American Statistical Association released a statement discouraging reliance on a simple, hard cutoff on p-values:

What is the threshold for statistical significance?

For fields where the threshold for defining statistical significance for new discoveries is P < 0.05, we propose a change to P < 0.005. This simple step would immediately improve the reproducibility of scientific research in many fields. Results that would currently be called “significant” but do not meet the new threshold should instead be called “suggestive.” While statisticians have known the relative weakness of using P≈0.05 as a threshold for discovery and the proposal to lower it to 0.005 is not new, a critical mass of researchers now endorse this change.

How many answers do you get when you ask two statisticians for an opinion?

Having said that, you know the old joke. Ask two statisticians for an opinion, and you will get at least three answers.

What does it mean when a test statistic is large?

Large test statistic (or "very negative") means small p-value and incompatibility with the null hypothesis.

What are the two factors that determine the test statistic?

In general, two factors are common to many formulae for the test statistic: 1) the difference between groups (or difference between observed and expected) - this is in the numerator; and 2) the variability and number of people/objects you studied (this is in the denominator).

What is the p-value of a test statistic?

The p-value relates to the test statistic, not "the results of the experiment" (or "the results" as many sources state). What is the probability that the test statistic is as extreme or more extreme than the value we obtained, if the null hypothesis is in fact true.

What is the correct solution to the goalpost?

The correct solutions are (1) to improve manuscript review quality; (2) to better educate about statistics (make it a required course, dang it!), and (3) to stop the emphasis on publishing in high-profile / high-ranked journals. These days with PubMed and the internet, everyone's going to see your papers and you will have an impact.

What does a p-value of 0.05 mean?

A p-value of 0.05, the traditional threshold, means that there is a 5% chance that you would have obtained those results without there being a real effect. A p-value of 0.005 means there is a 0.5% chance – or a change from 1/20 to 1/200. There are major problems with over-reliance on the p-value.

Answer

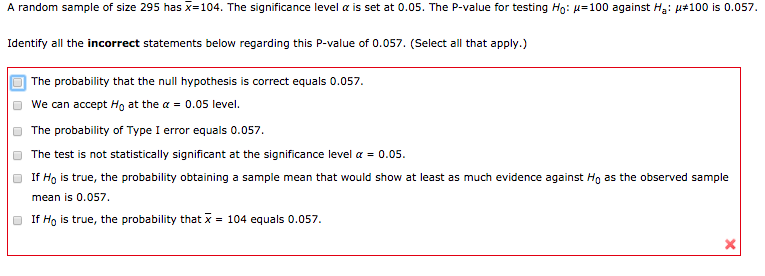

Statistical significance Asked to explain the meaning of “statistically significant at the A 0.05 level,” a student says, “This means that the probability that the null hypothesis is true is less than 0.05.” Is this explanation correct? Why or why not?

Video Transcript

a student saw the Alfa of 0.5 and they said that this means that the probability that the no hypothesis is true is less than 0.5 Now that's a misinterpretation of what this Alfa value stands for again. The no hypothesis is either true or it's not true. It doesn't change or fluctuate.

Why is it true that statistics are as much about interpretation and presentation as it is mathematics?

It rings true because statistics really is as much about interpretation and presentation as it is mathematics. That means we human beings who are analyzing data, with all our foibles and failings, have the opportunity to shade and shadow the way results get reported.

How many ways have contributors to scientific journals used language to obscure their results?

Which brings me back to the blog post I referenced at the beginning. Do give it a read, but the bottom line is that the author cataloged 500 different ways that contributors to scientific journals have used language to obscure their results (or lack thereof).

What is the alpha of significance?

In the majority of analyses, an alpha of 0.05 is used as the cutoff for significance. If the p-value is less than 0.05, we reject the null hypothesis that there's no difference between the means and conclude that a significant difference does exist. If the p-value is larger than 0.05, we cannot conclude that a significant difference exists.

When do you find p-values?

Most of us first encounter p-values when we conduct simple hypothesis tests, although they also are integral to many more sophisticated methods. Let's use Minitab Statistical Software to do a quick review of how they work (if you want to follow along and don't have Minitab, the full package is available free for 30 days ). We're going to compare fuel consumption for two different kinds of furnaces to see if there's a difference between their means.

Is p-value statistically significant?

It's still not statistically significant, and data analysts should not try to pretend otherwise. A p-value is not a negotiation: if p > 0.05, the results are not significant. Period.

Is p-value misinterpreted?

P-values are frequently misinterpreted, which causes many problems. I won't rehash those problems here since we have the rebutted the concerns over p-values in Part 1 . But the fact remains that the p-value will continue to be one of the most frequently used tools for deciding if a result is statistically significant.

Does the blogger address the question of whether the opposite situation occurs?

The blogger does not address the question of whether the opposite situation occurs. Do contributors ever write that a p-value of, say, 0.049999 is:

Why do we need to specify alpha and beta?

In A/B testing one needs to specify alpha and beta in order to detemine the sample size that is required to allow a decision between A and B with the desired confidences (1-alpha ia the confidence in accepting B and 1-beta is the confidence in accepting A). So this must have been done neccesarily before doing the experiment. The test only tells you if your data implies that you should accept A or that you should accept B. This concept does NOT aim at a rejection of some (null-)hypothesis.

What does it mean when you don't reject the null hypothesis?

If you don't reject the null hypothesis, your statement is that you don't have enough data to dare concluding the direction. You say that your data is not sufficiently conclusive. 2) For what p-value you like to reject the null is your decision.

What is significance test?

In contrast, a significance test evaluates the probability of the data under some hypothesis (the "p-value"). If this is considered too unlikely, then one is willing to believe that the hypothesis is not suited to describe the data, so it is rejected or nullified (therefore "null hypothesis"!). The implication is that this means that the data is sufficient to conclude whether the effect (e.g. an expected difference between two groups or a slope) is deemed positive or negative. Here it is irrelevant if the "degree of unlikeliness" (a limit of p so that for lower values one would reject the hypothesis) is fixed before the experiment or thought of after the experiment.

What does p=0.05 mean?

In this case, p=0.05 means only that you have no sufficient data to reject H0.

Can you perform null hypothesis significance testing?

You can perform null-hypothesis-significance testing and decide to accept or reject the null hypothesis that B is not different from A . Another homework question answered.

Is the null hypothesis accepted?

1) The null hypothesis is NEVER accepted. The experiment and the test only aims to reject the null hypothesis. Being able to reject the null means that you have enough data to be sufficiently confident at least about the direction of the effect.

Can a hypothesis be nullified in a significance test?

Unfortunately, the hypothesis A in A/B testing could be formulated as a hypothesis that would be tried to nullify in a significance test, and the acceptance of B can then be evaluated by comparing the empirical p-value to alpha (B is accepted when p<alpha). Here, alpha must have been known to plan the study. It is impossible to set alpha afterwards because this would have required a different sample size. However, the whole procedure makes no sense if B and beta are not specified and the sample size does not ensure the confidences of the decisions. This is completely mixed up in almost all stats books for prationeers and tought wrongly in almost all stats courses for non-statisticians (usually under the name "null-hypothesis-significance testing"). NHST).

What does a p-value of 1.0 mean?

In particular, as nicely described by @david-robinson in http://varianceexplained.org/statistics/interpreting-pvalue-histogram/, p-values close to 1.0 may indicate that you have been applying a one-sided test when maybe you wanted a two-sided test or may be caused by missing values in your data, distorting the results of the test. Another option (as @Cliff-AB mentions) is the Bonferroni correction that you are applying, which seems the most plausible cause.

What is the meaning of "back up"?

Making statements based on opinion; back them up with references or personal experience.

When is a p-value of 1 on a paired t-test?

If the data are discrete it's possible to get an exact p-value of 1 on a paired t-test, when the mean difference is exactly 0.

What is an alpha in statistics?

The term " alpha " comes from hypothesis tests and denotes the accepted rate of type-I errors in a decision-strategic approach. The decision is made between two alternative hypothesis, where one of these hypotheses is a statistical null hypothesis H0 (a mere technical reqirement for the probability calculus) and a substantive alternative hypothesis HA. The aim is to use data to make an informed or in somw way "optimal" decision between tow COURSES OF ACTION, where one course is to ACT AS IF H0 was the case and the other is to ACT AS IF HA was the case. These actions are associated with consequences, and one must consider and somehow weigh the expected possible wins and losses of these actions, what is evaluated according to the selected substantive alternative (i.e. some non-zero effect size that is considered to be relevant for the actions to be taken). This requires some planning, and giving alpha without also giving beta (the accepted rate of type-II errors) is again non-sensical. The whole strategy makes sense only when a substantial HA is defined, and when resonable values alpha AND beta are selected according to the expected wins and losses of the possible actions. This strategy does actually not calculate a p-value. It is completely sufficient to determine the " (non-)rejection region of H0" of a test statistic that is derived from the data. If the observed statistic is inside the rejection region, the decision is to ACT AS IF HA was the case. Otherwiese, the decision is to ACT AS IF H0 is the case. The whole thing is not about a null hypothesis or about accepting or rejecting hypotheses. It is about actions. Only about actions and their possible consequences.

What does p=.03 mean in a t-test?

Let take a t-test the p-values of a t-test in simple language tells me what the probability is the mean of population A is similar to population B . If p=.03 this tells me that there is a probability of 3% the means of my two populations are similar (excluding whether this is actually true or not). It does not tell me anything about the probability that I am wrong.

How to simulate p-value?

I can simply simulate what the p-value does by random resampling population A and population B of the first example a 1000 times. Each time I compare if the mean of population A is smaller than population B. The percentage of A-B smaller is then simply my p-value. This shows me that by random resampling the population the mean of population A is 0.4% of the time smaller than population B (Fig 2). the t-test returns a p-value of 0.0087. These are very close (vary due to the random resampling). One can simply understand that if you increase the sample size the variation of the means gets smaller. Also, why should I be interested in the variation of the mean by random organisation of both populations?

What is the chance that this person has the disease?

Now in we simply use the test on some person. The test is positive. What is the chance that this person has the disease? - This depends on the prevalence of the disease. If the prevalence is tiny, the chance that this person has the disease is still very small. If the prevalence is moderate, the chance that this person has the disease is large. When we do not know the prevalence we can not say what the chance is that the tested person ihas the disease.

How does a hypothesis test work?

The test works just like a hypothesis test and has a known specificity and sensitivity. From these properties one can derive the probability of the test to give a 'positive' result when the patient is actually healthy (a false-positive result). This probability is denoted by "alpha" in hypothesis tests.

How does a diagnosic test work?

Consider a diagnosic test. The test works just like a hypothesis test and has a known specificity and sensitivity. From these properties one can derive the probability of the test to give a 'positive' result when the patient is actually healthy (a false-positive result). This probability is denoted by "alpha" in hypothesis tests. A good test surely has a low "alpha". For our example, let's say alpha is 1%. This means: if 1000 healthy (!) persons are tested, we expect about 10 tests turning out to be (false) positive and 990 (correct) negative.

What is the significance of a p-value?

The term " significant " comes from significance tests that use just one hypothesis and calculate a p-value which is a measure for the " (statistical!) significance" of the data (given the model and the assumed hypothesis). The smaller p, the more significant is the data. There is no cut-off, no general rule how to use this information. It must be interpreted in the context of the experiment. Dividing results into "significant" vs. "non-significant" is non-sensical. Significances are "shades of gray", nothing like black-and-white.