How do I install Hadoop on Windows?

How do I install hadoop? Install Hadoop Step 1: Click here to download the Java 8 Package. Step 2: Extract the Java Tar File. Step 3: Download the Hadoop 2.7.3 Package. Step 4: Extract the Hadoop tar File. Step 5: Add the Hadoop and Java paths in the bash file (. Step 6: Edit the Hadoop Configuration files. Step 7: Open core-site.

What are the main features of Hadoop?

What are the main top key features of Hadoop such as it is Cost Effective System, Large Cluster of Nodes, Parallel Processing, Distributed Data, Automatic Failover Management, Data Locality Optimization, Heterogeneous Cluster and Scalability.

What are the pre-requisites to learn Hadoop?

Skills Needed to Learn Hadoop Programming Skills. Hadoop requires familiarity with a variety of programming languages, depending on the function you want it to perform. SQL Knowledge. SQL proficiency is required regardless of the role you wish to pursue in Big Data. ... Linux. ...

What are the alternatives to Hadoop?

Top 10 Alternatives & Competitors to Hadoop HDFS

- Google BigQuery. Analyze Big Data in the cloud with BigQuery. ...

- Databricks Lakehouse Platform. Buying software just got smarter. ...

- Cloudera. ...

- Hortonworks Data Platform

- Snowflake. ...

- Microsoft SQL Server. ...

- Google Cloud Dataproc. ...

- Vertica

See more

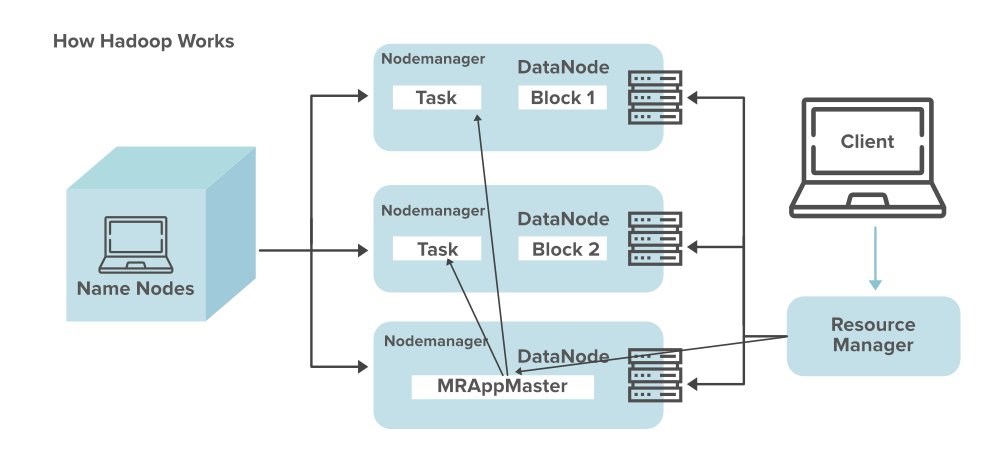

How Hadoop Works

Hadoop makes it easier to use all the storage and processing capacity in cluster servers, and to execute distributed processes against huge amounts of data. Hadoop provides the building blocks on which other services and applications can be built.

Running Hadoop on AWS

Amazon EMR is a managed service that lets you process and analyze large datasets using the latest versions of big data processing frameworks such as Apache Hadoop, Spark, HBase, and Presto on fully customizable clusters.

Why is Hadoop useful?

The modest cost of commodity hardware makes Hadoop useful for storing and combining data such as transactional, social media, sensor, machine, scientific, click streams, etc. The low-cost storage lets you keep information that is not deemed currently critical but that you might want to analyze later.

Who manages Hadoop?

Today, Hadoop’s framework and ecosystem of technologies are managed and maintained by the non-profit Apache Software Foundation (ASF), a global community of software developers and contributors.

How to get data into Hadoop?

Getting data into Hadoop 1 Use third-party vendor connectors (like SAS/ACCESS ® or SAS Data Loader for Hadoop ). 2 Use Sqoop to import structured data from a relational database to HDFS, Hive and HBase. It can also extract data from Hadoop and export it to relational databases and data warehouses. 3 Use Flume to continuously load data from logs into Hadoop. 4 Load files to the system using simple Java commands. 5 Create a cron job to scan a directory for new files and “put” them in HDFS as they show up. This is useful for things like downloading email at regular intervals. 6 Mount HDFS as a file system and copy or write files there.

What are the core modules of Apache?

Currently, four core modules are included in the basic framework from the Apache Foundation: Hadoop Common – the libraries and utilities used by other Hadoop modules. Hadoop Distributed File System (HDFS) – the Java-based scalable system that stores data across multiple machines without prior organization.

Why is Hadoop used for analytics?

Because Hadoop was designed to deal with volumes of data in a variety of shapes and forms, it can run analytical algorithms. Big data analytics on Hadoop can help your organization operate more efficiently, uncover new opportunities and derive next-level competitive advantage. The sandbox approach provides an opportunity to innovate with minimal investment.

How does Hadoop help the IoT?

At the core of the IoT is a streaming, always on torrent of data. Hadoop is often used as the data store for millions or billions of transactions. Massive storage and processing capabilities also allow you to use Hadoop as a sandbox for discovery and definition of patterns to be monitored for prescriptive instruction. You can then continuously improve these instructions, because Hadoop is constantly being updated with new data that doesn’t match previously defined patterns.

What is Bloor Group's report on Hadoop?

That’s how the Bloor Group introduces the Hadoop ecosystem in this report that explores the evolution of and deployment options for Hadoop. It includes a detailed history and tips on how to choose a distribution for your needs.

How was Hadoop developed?

Hadoop was born out of a need to process increasingly large volumes of Big Data and was inspired by Google’s MapReduce, a programming model that divides an application into smaller components to run on different server nodes. Unlike the proprietary data warehouse solutions that were prevalent at the time it was introduced, Hadoop makes it possible for organizations to analyze and query large data sets in a scalable way using free, open-source software and off-the-shelf hardware. It enables companies to store and process their Big Data with lower costs, greater scalability, and increased computing power, fault tolerance, and flexibility. Hadoop also paved the way for further developments in Big Data analytics, such as Apache Spark.

Why is Hadoop useful?

Because Hadoop detects and handles failures at the application layer, rather than the hardware layer , Hadoop can deliver high availability on top of a cluster of computers , even though the individual servers may be prone to failure.

What are the benefits of Hadoop?

Hadoop has five significant advantages that make it particularly useful for Big Data projects. Hadoop is:

What is Hadoop software?

Hadoop is a software framework that can achieve distributed processing of large amounts of data in a way that is reliable, efficient, and scalable, relying on horizontal scaling to improve computing and storage capacity by adding low-cost commodity servers.

What is Hadoop used for?

The Hadoop platform is mainly for offline batch applications and is typically used to schedule batch tasks on static data. The computing process is relatively slow. To get results, some queries may take hours or even longer, so it is impotent when faced with applications and services with real-time requirements.

What is the purpose of HDFS in Hadoop?

The HDFS is the module responsible for reliably storing data across multiple nodes in the cluster and for replicating the data to provide fault tolerance. Raw data, intermediate results of processing, processed data and results are all stored in the Hadoop cluster. HDFS is composed of a master node also known as NameNode, a secondary NameNode for high availability, and slave nodes called DataNodes, which are the data processing and storage units. The NameNode is a fundamental component of the HDFS as it is responsible for the upkeeping of namespace of the file system and it maintains an updated directory tree of all files stored on the cluster, metadata about the files, as well as the locations of data files in the cluster. Data is stored in blocks on the Hadoop cluster, i.e., Hadoop is a block storage system (block size 64 MB for Apache Hadoop and 128 MB for Cloudera). However, Hadoop can also integrate with object stores (object storage systems) such as Openstack's Swift, Amazon AWS's S3A, Azure blob storage via Wasp on the cloud.

What is MapReduce used for?

The MapReduce programming model can easily make many general data batch processing tasks and operations parallel on a large-scale cluster and can have automated failover capability. Led by open-source software such as Hadoop, the MapReduce programming model has been widely adopted and is applied to Web search, fraud detection, and a variety of other practical applications.

Why do we need HBase?

Why do we need HBase when the data is stored in the HDFS file system, which is the core data storage layer within Hadoop? For operations other than MapReduce execution and operations that aren’t easy to work with in HDFS, and when you need random access to data, HBase is very useful. HBase satisfies two types of use cases:

Which cloudera supports interactive SQL?

Cloudera Impala, which supports interactive SQL of data in HDFS (Hadoop Distributed File System) and HBase through popular BI tools.

What happens when one node fails in Hadoop?

Likewise, Hadoop has a very high degree of fault tolerance; if one node in the cluster fails, the processing tasks are redistributed among the other nodes in the cluster, and multiple copies of the data is stored in the Hadoop cluster.

What is Hadoop used for?

It provides a software framework for distributed storage and processing of big data using the MapReduce programming model. Hadoop was originally designed for computer clusters built from commodity hardware, which is still the common use. It has since also found use on clusters of higher-end hardware.

How does Hadoop work?

Hadoop works directly with any distributed file system that can be mounted by the underlying operating system by simply using a file:// URL; however, this comes at a price – the loss of locality. To reduce network traffic, Hadoop needs to know which servers are closest to the data, information that Hadoop-specific file system bridges can provide.

What language is Hadoop?

The Hadoop framework itself is mostly written in the Java programming language, with some native code in C and command line utilities written as shell scripts.

What is Hadoop MapReduce?

Hadoop MapReduce – an implementation of the MapReduce programming model for large-scale data processing.

How does a Hadoop cluster work?

Each datanode serves up blocks of data over the network using a block protocol specific to HDFS. The file system uses TCP/IP sockets for communication. Clients use remote procedure calls (RPC) to communicate with each other.

What is the core of Apache Hadoop?

The core of Apache Hadoop consists of a storage part , known as Hadoop Distributed File System (HDFS), and a processing part which is a MapReduce programming model. Hadoop splits files into large blocks and distributes them across nodes in a cluster.

What is the difference between Hadoop 1 and Hadoop 2?

Difference between Hadoop 1 and Hadoop 2 (YARN) The biggest difference between Hadoop 1 and Hadoop 2 is the addition of YARN (Yet Another Resource Negotiator), which replaced the MapReduce engine in the first version of Hadoop. YARN strives to allocate resources to various applications effectively.

What is Hadoop used for?

Hadoop is a framework that enables processing of large data sets which reside in the form of clusters. Being a framework, Hadoop is made up of several modules that are supported by a large ecosystem ...

What is Apache Hadoop?

Overview: Apache Hadoop is an open source framework intended to make interaction with big data easier, However, for those who are not acquainted with this technology, one question arises that what is big data ? Big data is a term given to the data sets which can’t be processed in an efficient manner with the help of traditional methodology such as RDBMS. Hadoop has made its place in the industries and companies that need to work on large data sets which are sensitive and needs efficient handling. Hadoop is a framework that enables processing of large data sets which reside in the form of clusters. Being a framework, Hadoop is made up of several modules that are supported by a large ecosystem of technologies.

What is HDFS in Hadoop?

HDFS: HDFS is the primary or major component of Hadoop ecosystem and is responsible for storing large data sets of structured or unstructured data across various nodes and thereby maintaining the metadata in the form of log files. HDFS consists of two core components i.e. Name node. Data Node.

What is HDFS in a cluster?

HDFS maintains all the coordination between the clusters and hardware, thus working at the heart of the system.

What is MapReduce used for?

By making the use of distributed and parallel algorithms, MapReduce makes it possible to carry over the processing’s logic and helps to write applications which transform big data sets into a manageable one.

Why is Pig important in Hadoop?

Pig helps to achieve ease of programming and optimization and hence is a major segment of the Hadoop Ecosystem.

Which is better: Hadoop or Spark?

Spark is best suited for real-time data whereas Hadoop is best suited for structured data or batch processing, hence both are used in most of the companies interchangeably.

Purpose

- Hadoop is an open-source software framework for storing data and running applications on clusters of commodity hardware. It provides massive storage for any kind of data, enormous processing power and the ability to handle virtually limitless concurrent tasks or jobs.

Development

- As the World Wide Web grew in the late 1900s and early 2000s, search engines and indexes were created to help locate relevant information amid the text-based content. In the early years, search results were returned by humans. But as the web grew from dozens to millions of pages, automation was needed. Web crawlers were created, many as university-led research projects, a…

Projects

- One such project was an open-source web search engine called Nutch the brainchild of Doug Cutting and Mike Cafarella. They wanted to return web search results faster by distributing data and calculations across different computers so multiple tasks could be accomplished simultaneously. During this time, another search engine project called Google was in progress. I…

Criticism

- MapReduce programming is not a good match for all problems. Its good for simple information requests and problems that can be divided into independent units, but it's not efficient for iterative and interactive analytic tasks. MapReduce is file-intensive. Because the nodes dont intercommunicate except through sorts and shuffles, iterative algorithms require multiple map-s…

Security

- Data security. Another challenge centers around the fragmented data security issues, though new tools and technologies are surfacing. The Kerberos authentication protocol is a great step toward making Hadoop environments secure.

Software

- Hadoop Distributed File System (HDFS) the Java-based scalable system that stores data across multiple machines without prior organization. Other software components that can run on top of or alongside Hadoop and have achieved top-level Apache project status include: Open-source software is created and maintained by a network of developers from around the world. It's free t…

Resources

- YARN (Yet Another Resource Negotiator) provides resource management for the processes running on Hadoop.

Example

- MapReduce a parallel processing software framework. It is comprised of two steps. Map step is a master node that takes inputs and partitions them into smaller subproblems and then distributes them to worker nodes. After the map step has taken place, the master node takes the answers to all of the subproblems and combines them to produce output.

Goals

- SAS support for big data implementations, including Hadoop, centers on a singular goal helping you know more, faster, so you can make better decisions. Regardless of how you use the technology, every project should go through an iterative and continuous improvement cycle. And that includes data preparation and management, data visualization and exploration, analytical m…

Results

- And remember, the success of any project is determined by the value it brings. So metrics built around revenue generation, margins, risk reduction and process improvements will help pilot projects gain wider acceptance and garner more interest from other departments. We've found that many organizations are looking at how they can implement a project or two in Hadoop, wit…