What is the main purpose of Flume?

Flume is an open-source distributed data collection service used for transferring the data from source to destination. It is a reliable, and highly available service for collecting, aggregating, and transferring huge amounts of logs into HDFS.

Where is the Flume agent installed?

The main Flume files are located in /usr/hdp/current/flume-server. The main configuration files are located in /etc/flume/conf.

How do I run Flume agent?

There are two options for starting Flume.To start Flume directly, run the following command on the Flume host: /usr/hdp/current/flume-server/bin/flume-ng agent -c /etc/flume/conf -f /etc/flume/conf/ flume.conf -n agent.To start Flume as a service, run the following command on the Flume host: service flume-agent start.

How do I know if Flume agent is running?

To check if Apache-Flume is installed correctly cd to your flume/bin directory and then enter the command flume-ng version . Make sure that you are in the correct directory by using the ls command. flume-ng will be in the output if you are in the correct directory. Save this answer.

How does Apache Flume work?

Apache Flume can store data in centralized stores (i.e data is supplied from a single store) like HBase & HDFS. Flume is horizontally scalable. If the read rate exceeds the write rate, Flume provides a steady flow of data between read and write operations. Flume provides reliable message delivery.

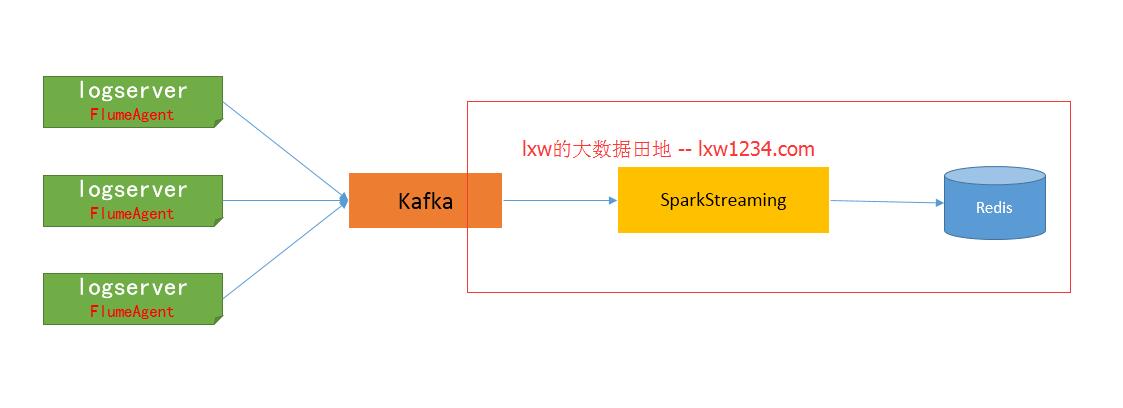

What is Kafka and Flume?

Apache Kafka is a distributed data system. Apache Flume is a available, reliable, and distributed system. It is optimized for ingesting and processing streaming data in real-time. It is efficiently collecting, aggregating and moving large amounts of log data from many different sources to a centralized data store.

What are the components of Flume agent?

Flume agents consist of three elements: a source, a channel, and a sink. The channel connects the source to the sink. You must configure each element in the Flume agent. Different source, channel, and sink types have different configurations, as described in the Flume documentation.

What is Flume water?

The Flume 2 is a wireless whole-home smart water monitor that connects to your phone and Amazon Alexa to detect leaks and tell you how much water you're using.

What is Flume architecture?

The Flume architecture consists of data generators, Flume Agent, Data collector, and central repository. The Flume Agent consists of three components that are a source, channel, and sink. There are other additional Flume components such as Interceptors, Channel selectors, and Sink Processors.

Which command will start the Flume agent?

The flume-ng executable lets you run a Flume NG agent or an Avro client which is useful for testing and experiments. No matter what, you'll need to specify a command (e.g. agent or avro-client ) and a conf directory ( --conf

How do I set up Flume?

Step 1: Configure a Repository.Step 2: Install JDK.Step 3: Install Cloudera Manager Server.Step 4: Install Databases. Install and Configure MariaDB. Install and Configure MySQL. Install and Configure PostgreSQL. ... Step 5: Set up the Cloudera Manager Database.Step 6: Install CDH and Other Software.Step 7: Set Up a Cluster.

How do I restart Flume?

If you wish to completely reset Flume, including resetting all Preferences, Flume Pro status, caches and account information, hold down the ⌥ (OPTION) key as soon as you launch Flume. You will be asked to confirm that you wish to reset Flume.

What would be the correct step after Flume and Flume agent is installed?

After installing Flume, we need to configure it using the configuration file which is a Java property file having key-value pairs. We need to pass values to the keys in the file. Name the components of the current agent. Describe/Configure the source.

What is the preferred replacement for Flume?

Some of the top alternatives of Apache Flume are Apache Spark, Logstash, Apache Storm, Kafka, Apache Flink, Apache NiFi, Papertrail, and some more.

What is difference between Flume and sqoop?

The major difference between Sqoop and Flume is that Sqoop is used for loading data from relational databases into HDFS while Flume is used to capture a stream of moving data.

Which is important for multifunction Flume agents?

In Multi agent flows, the sink of the previous agent (ex: Machine1) and source of the current hop (ex: Machine2) need to be avro type with the sink pointing to the hostname or IP address and port of the source machine. So, thus Avro RPC mechanism acts as the bridge between agents in multi hop flow.

How many agents does a flume have?

It receives the data (events) from clients or other agents and forwards it to its next destination (sink or agent). Flume may have more than one agent. Following diagram represents a Flume Agent. As shown in the diagram a Flume Agent contains three main components namely, source, channel, and sink.

What is Flume used for?

Along with the log files, Flume is also used to import huge volumes of event data produced by social networking sites like Facebook and Twitter, and e-commerce websites like Amazon and Flipkart. Flume supports a large set of sources and destinations types.

What is flume routing?

Flume provides the feature of contextual routing. The transactions in Flume are channel-based where two transactions (one sender and one receiver) are maintained for each message. It guarantees reliable message delivery. Flume is reliable, fault tolerant, scalable, manageable, and customizable.

Why is flume needed?

Therefore, along with the sources and the channels, it is needed to describe the channel used in the agent.

How to download Flume?

Open the website. Click on the download link on the left-hand side of the home page. It will take you to the download page of Apache Flume.

Where is the OAuth test button in Flume?

Finally, click on the Test OAuth button which is on the right side top of the page. This will lead to a page which displays your Consumer key, Consumer secret, Access token, and Access token secret. Copy these details. These are useful to configure the agent in Flume.

How many transactions are there in Flume?

In Flume, for each event, two transactions take place: one at the sender and one at the receiver. The sender sends events to the receiver. Soon after receiving the data, the receiver commits its own transaction and sends a “received” signal to the sender. After receiving the signal, the sender commits its transaction.

Where is the agent in Flume?

An agent is started using a shell script called flume-ng which is located in the bin directory of the Flume distribution. You need to specify the agent name, the config directory, and the config file on the command line:

What is Flume used for?

Since data sources are customizable, Flume can be used to transport massive quantities of event data including but not limited to network traffic data, social-media-generated data, email messages and pretty much any data source possible. Apache Flume is a top level project at the Apache Software Foundation.

Why is Flume not logging?

Logging the raw stream of data flowing through the ingest pipeline is not desired behaviour in many production environments because this may result in leaking sensitive data or security related configurations, such as secret keys , to Flume log files. By default, Flume will not log such information.

How does an agent flow?

This is done by listing the names of each of the sources, sinks and channels in the agent, and then specifying the connecting channel for each sink and source. For example, an agent flows events from an Avro source called avroWeb to HDFS sink hdfs-cluster1 via a file channel called file-channel. The configuration file will contain names of these components and file-channel as a shared channel for both avroWeb source and hdfs-cluster1 sink.

What is Flume's memory channel?

There’s also a memory channel which simply stores the events in an in-memory queue, which is faster but any events still left in the memory channel when an agent process dies can’t be recovered.

How are events staged in Flume?

The events are staged in a channel on each agent. The events are then delivered to the next agent or terminal repository (like HDFS) in the flow. The events are removed from a channel only after they are stored in the channel of next agent or in the terminal repository. This is a how the single-hop message delivery semantics in Flume provide end-to-end reliability of the flow.

What is Apache Flume?

Apache Flume is a distributed, reliable, and available system for efficiently collecting, aggregating and moving large amounts of log data from many different sources to a centralized data store. The use of Apache Flume is not only restricted to log data aggregation. Since data sources are customizable, Flume can be used to transport massive ...

What is Flume service?

Flume is a distributed, reliable, and available service for efficiently collecting, aggregating, and moving large amounts of log data. It has a simple and flexible architecture based on streaming data flows. It is robust and fault tolerant with tunable reliability mechanisms and many failover and recovery mechanisms. It uses a simple extensible data model that allows for online analytic application.

Where to download Flume?

This release can be downloaded from the Flume download page at: http://flume.apache.org/download.html

What is Flume 1.9.0?

Version 1.9.0 is the eleventh Flume release as an Apache top-level project.

Is Flume 1.3.1 backwards compatible?

Flume 1.3.1 has been put through many stress and regression tests, is stable, production-ready software, and is backwards-compatible with Flume 1.3.0 and Flume 1.2.0. Apache Flume 1.3.1 is a maintainance release for the 1.3.0 release, and includes several bug fixes and performance enhancements.

How many agents does a flume have?

It receives the data (events) from clients or other agents and forwards it to its next destination (sink or agent). Flume may have more than one agent. Following diagram represents a Flume Agent. As shown in the diagram a Flume Agent contains three main components namely, source, channel, and sink.

What is source in a flume?

A source is the component of an Agent which receives data from the data generators and transfers it to one or more channels in the form of Flume events.

What is the architecture of Flume?

As shown in the illustration, data generators (such as Facebook, Twitter) generate data which gets collected by individual Flume agents running on them. Thereafter, a data collector (which is also an agent) collects the data from the agents which is aggregated and pushed into a centralized store such as HDFS or HBase.

What is an event in Flume?

An event is the basic unit of the data transported inside Flume. It contains a payload of byte array that is to be transported from the source to the destination accompanied by optional headers. A typical Flume event would have the following structure −

Can flume agents have multiple channels?

Note − A flume agent can have multiple sources, sinks and channels. We have listed all the supported sources, sinks, channels in the Flume configuration chapter of this tutorial.

What is a flume agent?

A Flume agent is a process (JVM) that hosts the components that allow Event s to flow from an external source to a external destination. A Source consumes Event s having a specific format, and those Event s are delivered to the Source by an external source like a web server.

How does Flume work?

The Sink removes an Event from the Channel only after the Event is stored into the Channel of the next agent or stored in the terminal repository. This is how the single-hop message delivery semantics in Flume provide end-to-end reliability of the flow. Flume uses a transactional approach to guarantee the reliable delivery of the Event s. The Source s and Sink s encapsulate the storage/retrieval of the Event s in a Transaction provided by the Channel. This ensures that the set of Event s are reliably passed from point to point in the flow. In the case of a multi-hop flow, the Sink from the previous hop and the Source of the next hop both have their Transaction s open to ensure that the Event data is safely stored in the Channel of the next hop.

What is the default RPC protocol for Flume?

As of Flume 1.4.0, Avro is the default RPC protocol. The NettyAvroRpcClient and ThriftRpcClient implement the RpcClient interface. The client needs to create this object with the host and port of the target Flume agent, and can then use the RpcClient to send data into the agent. The following example shows how to use the Flume Client SDK API within a user’s data-generating application:

What is a channel in Flume?

The Channel is a passive store that holds the Event until that Event is consumed by a Sink. One type of Channel available in Flume is the FileChannel which uses the local filesystem as its backing store. A Sink is responsible for removing an Event from the Channel and putting it into an external repository like HDFS ...

What is an event in a Flume?

An Event is a unit of data that flows through a Flume agent. The Event flows from Source to Channel to Sink, and is represented by an implementation of the Event interface. An Event carries a payload (byte array) that is accompanied by an optional set of headers (string attributes). A Flume agent is a process (JVM) that hosts the components that allow Event s to flow from an external source to a external destination.

Where does Flume 1.x development take place?

The Flume 1.x development happens under the branch “trunk” so this command line can be used:

Does Flume need Avrosource?

The remote Flume agent needs to have an AvroSource (or a ThriftSource if you are using a Thrift client) listening on some port. Below is an example Flume agent configuration that’s waiting for a connection from MyApp:

What is Flume?

Apache Flume is a tool/service/data ingestion mechanism for collecting aggregating and transporting large amounts of streaming data such as log files, events (etc...) from various sources to a centralized data store.

What is flume routing?

Flume provides the feature of contextual routing. The transactions in Flume are channel-based where two transactions (one sender and one receiver) are maintained for each message. It guarantees reliable message delivery. Flume is reliable, fault tolerant, scalable, manageable, and customizable.

What stores can Flume store?

Using Apache Flume we can store the data in to any of the centralized stores (HBase, HDFS).

Can Flume be used with Hadoop?

Using Flume, we can get the data from multiple servers immediately into Hadoop.

What is Apache Flume?

Apache Flume is tool/Service for efficiently collecting, combining and moving large amounts of log data. Flume is a highly distributed, reliable and available tool/service.

Flume Components?

Apache Flume is collection of 6 important components and the components are.

Flume Advantages?

The basic advantage is Flume used for log data. The other advantages of Flume are -

Flume Features?

As discussed earlier, Flume has flexible design based on streaming data flows.