Everything you need to know about “activation functions” for deep learning models

- Activation Function An activation function is a function used to transform the output signal of the previous node in a neural network. ...

- Need for an activation function Well yeah, you already know the basic reason by now. ...

- Desired properties of an activation function As it’s clear from above, activation functions should be non-linear. ...

- Different types of activation functions ReLU ...

- Conclusions ...

What is an activation function?

This is where activation functions come into play. An activation function is a deceptively small mathematical expression which decides whether a neuron fires up or not. This means that the activation function suppresses the neurons whose inputs are of no significance to the overall application of the neural network.

What is the activation function in artificial neural networks?

In artificial neural networks(ANN), the activation function helps us to determine the output of Neural Network. They decide whether the neuron should be activated or not. It determines the output of a model, its accuracy, and computational efficiency. Single neuron structure. Source wiki.tum.de Inputs are fed into the neurons in the input layer.

How do I Choose an activation function in deep learning?

There’s no clear-cut answer to selecting an activation function as it all depends on the problem to be solved. But if you’re new to deep learning, you might want to start with the sigmoid function before moving on to others - depending on the outcome of your results.

What are the different aspects of deep learning?

There are various aspects of deep learning that we usually have to consider while making a deep learning model. Choosing the right number of layers, the activation function, number of epochs, loss function, the optimizer to name a few.

What is an activation function in neural network?

What is a Neural Network Activation Function? An Activation Function decides whether a neuron should be activated or not. This means that it will decide whether the neuron's input to the network is important or not in the process of prediction using simpler mathematical operations.

What is meant by an activation function?

An activation function is the function in an artificial neuron that delivers an output based on inputs. Activation functions in artificial neurons are an important part of the role that the artificial neurons play in modern artificial neural networks.

What are activation functions in CNN?

The activation function is a node that is put at the end of or in between Neural Networks. They help to decide if the neuron would fire or not. “The activation function is the non linear transformation that we do over the input signal. This transformed output is then sent to the next layer of neurons as input.” —

What is activation function and types?

An activation function is a very important feature of an artificial neural network , they basically decide whether the neuron should be activated or not. In artificial neural networks, the activation function defines the output of that node given an input or set of inputs.

Why is ReLU used in CNN?

As a consequence, the usage of ReLU helps to prevent the exponential growth in the computation required to operate the neural network. If the CNN scales in size, the computational cost of adding extra ReLUs increases linearly.

Why do we need an activation function?

Why do we need them? In broad terms, activation functions are necessary to prevent linearity. Without them, the data would pass through the nodes and layers of the network only going through linear functions (a*x+b).

What is ReLU and Softmax?

As per our business requirement, we can choose our required activation function. Generally , we use ReLU in hidden layer to avoid vanishing gradient problem and better computation performance , and Softmax function use in last output layer .

Is Softmax an activation function?

The softmax function is used as the activation function in the output layer of neural network models that predict a multinomial probability distribution.

What is ReLU in deep learning?

The Rectified Linear Unit (ReLU) is the most commonly used activation function in deep learning. The function returns 0 if the input is negative, but for any positive input, it returns that value back. The function is defined as: The plot of the function and its derivative: The plot of ReLU and its derivative.

What is the best activation function in neural networks?

ReLU (Rectified Linear Unit) Activation Function The ReLU is the most used activation function in the world right now. Since, it is used in almost all the convolutional neural networks or deep learning.

How many types of activation functions are there?

6 Types of Activation Function in Neural Networks You Need to...Sigmoid Function.Hyperbolic Tangent Function (Tanh)Softmax Function.Softsign Function.Rectified Linear Unit (ReLU) Function.Exponential Linear Units (ELUs) Function.

How many activation functions are there?

There are perhaps three activation functions you may want to consider for use in hidden layers; they are: Rectified Linear Activation (ReLU) Logistic (Sigmoid) Hyperbolic Tangent (Tanh)

What is activation function Mcq?

Each neuron has a weight, and multiplying the input number with the weight gives the output of the neuron, which is transferred to the next layer. The activation function is a mathematical “gate” in between the input feeding the current neuron and its output going to the next layer.

How do you find the activation function?

The larger the input (more positive), the closer the output value will be to 1.0, whereas the smaller the input (more negative), the closer the output will be to -1.0. The Tanh activation function is calculated as follows: (e^x – e^-x) / (e^x + e^-x)

Which all are activation function?

6 Types of Activation Function in Neural Networks You Need to...Sigmoid Function.Hyperbolic Tangent Function (Tanh)Softmax Function.Softsign Function.Rectified Linear Unit (ReLU) Function.Exponential Linear Units (ELUs) Function.

What is activation function and threshold?

A threshold activation function (or simply the activation function, also known as squashing function) results in an output signal only when an input signal exceeding a specific threshold value comes as an input.

What is the impact of activation functions in deep neural networks?

The choice of activation functions in Deep Neural Networks has a significant impact on the training dynamics and task performance.

What is the activation function of an artificial neural network?

In artificial neural networks(ANN), the activation function helps us to determine the output of Neural Network. They decide whether the neuron should be activated or not. It determines the output of a model, its accuracy, and computational efficiency.

How are inputs fed into neurons?

Inputs are fed into the neurons in the input layer. Inputs(Xi) are then multiplied with their weights(Wi) and add the bias gives the output(y=(Xi*Wi)+b) of the neuron. We apply our activation function on Ythen it is transfer to the next layer.

Does the synapse activate all the neurons at the same time?

It does not activate all the neurons at the same time

Why Do We Need Activation Functions?

The purpose of an activation function is to add some kind of non-linear property to the function, which is a neural network. Without the activation functions, the neural network could perform only linear mappings from inputs x to the outputs y. Why is this?

What is the function of softmax activation?

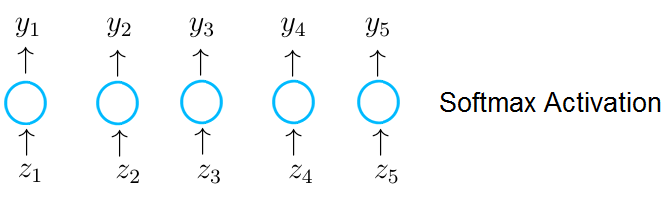

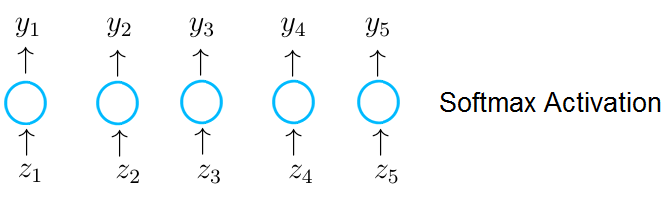

Simply speaking, the softmax activation function forces the values of output neurons to take values between zero and one, so they can represent probability scores.

What is the input vector of a neuron in the output layer?

Imagine that the neurons in the output layer receive an input vector z, which is the result of a dot-product between a weight matrix of the current layer with the output of a previous layer. A neuron in the output layer with a softmax activation receives a single value z1, which is an entry in the vector z and outputs the value y_1.

What are the undesirable properties of sigmoid activation?

Another undesirable property of the sigmoid activation is the fact the outputs of the function are not zero-centered. Usually, this makes training the neural network more difficult and unstable.

What does the softmax function do?

Fortunately, the softmax function does not only force the outputs into the range between zero and out, but the function also makes sure that the outputs across all possible classes sum up to one. Let's now see how the softmax function works in practice.

What is the function of neural network?

Just like any regular mathematical function a neural network maps from input x to output y.

Is a neural network a function?

That is, instead of considering a neural network as a simple collection of nodes and connections , we can think of a neural network as a function. This function incorporates all calculations that we’ve viewed separately as one single, chained calculation:

What is the first thing that comes to our mind when we have an activation function?

The first thing that comes to our mind when we have an activation function would be a threshold based classifier i.e. whether or not the neuron should be activated based on the value from the linear transformation.

What is the most widely used non-linear activation function?

The next activation function that we are going to look at is the Sigmoid function. It is one of the most widely used non-linear activation function. Sigmoid transforms the values between the range 0 and 1. Here is the mathematical expression for sigmoid-

What is a neural network without activation function?

A neural network without an activation function is essentially just a linear regression model. Thus we use a non linear transformation to the inputs of the neuron and this non-linearity in the network is introduced by an activation function.

Is activation proportional to input?

Here the activation is proportional to the input.The variable ‘a’ in this case can be any constant value. Let’s quickly define the function in python:

Can a binary step function be used as an activation function?

The binary step function can be used as an activation function while creating a binary classifier. As you can imagine, this function will not be useful when there are multiple classes in the target variable. That is one of the limitations of binary step function.

Is a smooth S-shaped function continuously differentiable?

Additionally, as you can see in the graph above, this is a smooth S-shaped function and is continuously differentiable. The derivative of this function comes out to be ( sigmoid (x)* (1-sigmoid (x)). Let’s look at the plot of it’s gradient.

Can we do without an activation function?

Now the question is – if the activation function increases the complexity so much , can we do without an activation function?

How does activation function help neural networks?

The problem if we do this would be that the layers of the neural network wont be able to learn complex functions over time. T he activation function adds non linearity to the model. This helps it to learn complex functions. The result at a particular node that is sent to the next layer is calculated by using the activation function on the value obtained from the weights of the layer.

What are the aspects of deep learning?

There are various aspects of deep learning that we usually have to consider while making a deep learning model. Choosing the right number of layers, the activation function, number of epochs, loss function, the optimizer to name a few.

How are weights updated in deep learning?

The weights are updated using the chain rule . But in deep learning there is a problem. If the derivative of the function is very small then the weights that are being updated change very little. This makes the training very slow since the training is all about updating the weights so that they converge.

What is the value of alpha?

The value of alpha defines the curve for the values lesser than 0. It is a hyperparameter and it’s value depends upon the use case and datasets.

Is ReLU activation the same as positive?

This is another improvised version of the ReLU activation function. It differs in the handling of the negative values just like the other forms of ReLU. For positive values all the above ReLU units perform the same.

What is activation function?

An activation function is a function used to transform the output signal of the previous node in a neural network. This transformation helps the network to learn complex patterns in the data. That’s it! I also didn’t believe initially that it’s this simple, but it’s true.

Why is activation function important?

This is important because, input to the activation function is W*x + b where W is the weights of the cell, and the x is the inputs, and then there is the bias b added to that. If not restricted, this value can attain a very high magnitude, especially in the case of very deep neural networks that work with millions of parameters. This in turn leads to computational issues as well as value overflow issues.

What is the main requirement for all the components of a neural network?

The main requirement for all the components of a neural network is that they should be differentiable, hence an activation function should also be differentiable .

Is activation function linear or nonlinear?

As it’s clear from above, activation functions should be non-linear.

Neural Network as A Function

Why Do We Need Activation functions?

- The purpose of an activation function is to add some kind of non-linear property to the function, which is a neural network. Without the activation functions, the neural network could perform only linear mappings from inputs x to the outputs y. Why is this? Without the activation functions, the only mathematical operation during the forward propaga...

Different Kinds of Activation Functions

- At this point, we should discuss the different activation functions we use in deep learning as well as their advantages and disadvantages

What Activation Function Should I use?

- I will answer this question with the best answer there is: it depends. Specifically, it depends on the problem you are trying to solve and the value range of the output you’re expecting. For example, if you want your neural network to predict values that are larger than one, then tanh or sigmoid is not suitable for use in the output layer, and we must use ReLU instead. On the other hand, if we e…

Table of Contents

- Why Do We Need Activation Functions in CNN?

- Variants Of Activation Function

- Python Code Implementation

- Conclusion

Why Do We Need It?

- Non-linear activation functions: Without an activation function, a Neural Network is just a linear regression model. The activation function transforms the input in a non-linear way, allowing it to learn and as well as accomplish more complex tasks. Mathematical proof:- The diagram’s elements include:- A hidden layer, i.e. layer 1:- A visible layer...

Variants of Activation Function

- 1).Linear Function: – • Equation: The equation for a linear function is y = ax, which is very much similar to the equation for a straight line. • -inf to +inf range • Applications: The linear activation function is only used once, in the output layer. • Problems:If we differentiate a linear function to introduce non-linearity, the outcome will no longer be related to the input “x”and the function will …

Python Code Implementation

- def sigmoid(x): s=1/(1+np.exp(-x)) ds=s*(1-s) return s,dsx=np.arange(-6,6,0.01) sigmoid(x)# Setup centered axes fig, ax = plt.subplots(figsize=(9, 5)) ax.plot(x,sigmoid(x)[0], color=”#307EC7″, linewidth=3, label=”sigmoid”) ax.plot(x,sigmoid(x), color=”#9621E2″, linewidth=3, label=”derivative”) Output, Source: Author

Conclusion

- Key Takeaways: 1 We covered the basic Introduction about Activation Function. 2 We also covered various needs to deploy the Activation Function. 3 We also covered the Python Implementation of Activation Functions 4 We also Covered various variants of the Activation Functions 5 At Last, we covered the need for the addition of Non-Linearity with Activation Functi…

Overview

- Activation function is one of the building blocks on Neural Network

- Learn about the different activation functions in deep learning

- Code activation functions in python and visualize results in live coding window

Introduction

- The Internet provides access to plethora of information today. Whatever we need is just a Google (search) away. However, when we have so much information, the challenge is to segregate between relevant and irrelevant information. When our brain is fed with a lot of information simultaneously, it tries hard to understand and classify the information into “useful” and “not-so-…

Table of Contents

- Brief overview of neural networks

- Can we do without an activation function ?

- Popular types of activation functions and when to use them

- Choosing the Right Activation Function

Brief Overview of Neural Networks

- Before I delve into the details of activation functions, let us quickly go through the concept of neural networks and how they work. A neural network is a very powerful machine learning mechanism which basically mimics how a human brain learns. The brain receives the stimulus from the outside world, does the processing on the input, and then generates the output. As the …

Can We Do Without An Activation function?

- We understand that using an activation function introduces an additional step at each layer during the forward propagation. Now the question is – if the activation function increases the complexity so much, can we do without an activation function? Imagine a neural network without the activation functions. In that case, every neuron will only be performing a linear transformation o…

Popular Types of Activation Functions and When to Use Them

- 1. Binary Step Function

The first thing that comes to our mind when we have an activation function would be a threshold based classifier i.e. whether or not the neuron should be activated based on the value from the linear transformation. In other words, if the input to the activation function is greater than a thre… - 2. Linear Function

We saw the problem with the step function, the gradient of the function became zero. This is because there is no component of x in the binary step function. Instead of a binary function, we can use a linear function. We can define the function as- Here the activation is proportional to th…

Choosing The Right Activation Function

- Now that we have seen so many activation functions, we need some logic / heuristics to know which activation function should be used in which situation. Good or bad – there is no rule of thumb. However depending upon the properties of the problem we might be able to make a better choice for easy and quicker convergence of the network. 1. Sigmoid functions and their combin…

Projects

- Now, its time to take the plunge and actually play with some other real datasets. So are you ready to take on the challenge? Accelerate your deep learning journey with the following Practice Problems: 1. Practice Problem: Identify the Apparels 2. Practice Problem: Identify the Digits

End Notes

- In this article I have discussed the various types of activation functions and what are the types of problems one might encounter while using each of them. I would suggest to begin with a ReLU function and explore other functions as you move further. You can also design your own activation functions giving a non-linearity component to your network. If you have used your ow…