Full Answer

What is the best caching service in AWS?

The large portfolio of AWS also includes services for caching. Amazon ElastiCache offers in-memory caches based on Redis or Memcached. Amazon CloudFront provides a Content Delivery Network (CDN) caching HTTP close to your worldwide customers.

What is caching in computer?

In computing, a cache is a high-speed data storage layer which stores a subset of data, typically transient in nature, so that future requests for that data are served up faster than is possible by accessing the data’s primary storage location. Caching allows you to efficiently reuse previously retrieved or computed data. How does Caching work?

What are managed caches in AWS?

An overview of managed caches in AWS accompanied with usage notes. Repeat read access patterns have mandated the implementation of a caching layer in most modern applications. These access patterns might be for different use cases, such as configuration files, images, any file on a CDN.

What is ElastiCache in AWS?

Amazon ElastiCache is a fully managed, in-memory caching service supporting flexible, real-time use cases. You can use ElastiCache for caching, which accelerates application and database performance, or as a primary data store for use cases that don't require durability like session stores, gaming leaderboards, streaming, and analytics.

What is cache in AWS?

In computing, a cache is a high-speed data storage layer which stores a subset of data, typically transient in nature, so that future requests for that data are served up faster than is possible by accessing the data's primary storage location.

What is caching and how it works?

Cached data works by storing data for re-access in a device's memory. The data is stored high up in a computer's memory just below the central processing unit (CPU).

What is caching & types of caching?

Caching is a mechanism to improve the performance of any type of application. Technically, caching is the process of storing and accessing data from a cache. But wait, what is a cache? A cache is a software or hardware component aimed at storing data so that future requests for the same data can be served faster.

What is caching with example?

Caches are used to store temporary files, using hardware and software components. An example of a hardware cache is a CPU cache. This is a small chunk of memory on the computer's processor used to store basic computer instructions that were recently used or are frequently used.

What is cache in simple terms?

A cache is a reserved storage location that collects temporary data to help websites, browsers, and apps load faster. Whether it's a computer, laptop or phone, web browser or app, you'll find some variety of a cache. A cache makes it easy to quickly retrieve data, which in turn helps devices run faster.

What is cloud cache?

Cloud Cache is a technology that provides incremental functionality to Profile Container and Office Container. Cloud Cache uses a Local Profile to service all reads from a redirected Profile or Office Container, after the first read.

What are the two types of caching?

ASP.NET provides the following different types of caching:Output Caching : Output cache stores a copy of the finally rendered HTML pages or part of pages sent to the client. ... Data Caching : Data caching means caching data from a data source.More items...

What are the 3 types of cache memory?

There are three general cache levels:L1 cache, or primary cache, is extremely fast but relatively small, and is usually embedded in the processor chip as CPU cache.L2 cache, or secondary cache, is often more capacious than L1. ... Level 3 (L3) cache is specialized memory developed to improve the performance of L1 and L2.

Is cache a memory?

Computer cache definition Cache is the temporary memory officially termed “CPU cache memory.” This chip-based feature of your computer lets you access some information more quickly than if you access it from your computer's main hard drive.

Where cache is stored?

In modern computers, the cache memory is stored between the processor and DRAM; this is called Level 2 cache. On the other hand, Level 1 cache is internal memory caches which are stored directly on the processor.

When should you cache data?

Caches are generally used to keep track of frequent responses to user requests. It can also be used in the case of storing results of long computational operations. Caching is storing data in a location different than the main data source such that it's faster to access the data.

Why is cache faster than database?

When query results are fetched, they are stored in the cache. The next time that information is needed, it is fetched from the cache instead of the database. This can reduce latency because data is fetched from memory, which is faster than disk.

What is the purpose of the cache?

Cache is a small amount of memory which is a part of the CPU - closer to the CPU than RAM . It is used to temporarily hold instructions and data that the CPU is likely to reuse.

How does cache work in the CPU?

How does cache memory work? Cache memory temporarily stores information, data and programs that are commonly used by the CPU. When data is required, the CPU will automatically turn to cache memory in search of faster data access. This is because server RAM is slower and is further away from the CPU.

How does cache work in browser?

The basic idea behind it is the following: The browser requests some content from the web server. If the content is not in the browser cache then it is retrieved directly from the web server. If the content was previously cached, the browser bypasses the server and loads the content directly from its cache.

What does caching mean in computer terms?

temporary storage areaCaching (pronounced “cashing”) is the process of storing data in a cache. A cache is a temporary storage area. For example, the files you automatically request by looking at a Web page are stored on your hard disk in a cache subdirectory under the directory for your browser.

Amazon ElastiCache

Amazon ElastiCache is a web service that makes it easy to deploy, operate, and scale an in-memory data store and cache in the cloud. The service improves the performance of web applications by allowing you to retrieve information from fast, managed, in-memory data stores, instead of relying entirely on slower disk-based databases.

Amazon DynamoDB Accelerator (DAX)

Amazon DynamoDB Accelerator (DAX) is a fully managed, highly available, in-memory cache for DynamoDB that delivers up to a 10x performance improvement – from milliseconds to microseconds – even at millions of requests per second.

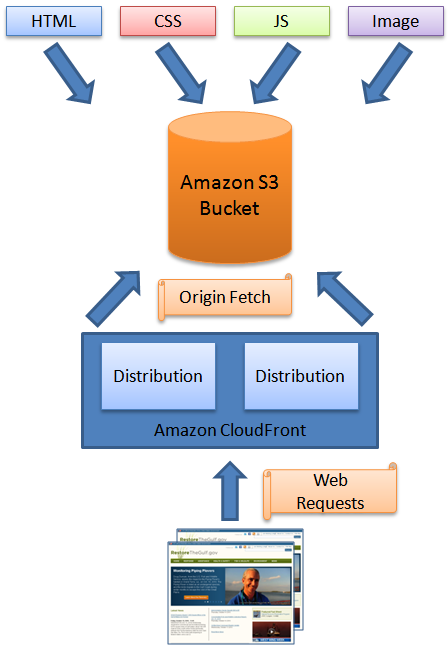

Amazon CloudFront

Amazon CloudFront is a global content delivery network (CDN) service that accelerates delivery of your websites, APIs, video content or other web assets. It integrates with other Amazon Web Services products to give developers and businesses an easy way to accelerate content to end users with no minimum usage commitments.

AWS Greengrass

AWS Greengrass is software that lets you run local compute, messaging & data caching for connected devices in a secure way. With AWS Greengrass, connected devices can run AWS Lambda functions, keep device data in sync, and communicate with other devices securely – even when not connected to the Internet.

Amazon Route 53

Amazon Route 53 is a highly available and scalable cloud Domain Name System (DNS) web service.

Overview

In-memory data caching can be one of the most effective strategies to improve your overall application performance and to reduce your database costs.

How Caching Helps

A database cache supplements your primary database by removing unnecessary pressure on it, typically in the form of frequently accessed read data. The cache itself can live in a number of areas including your database, application or as a standalone layer.

Relational Database Caching techniques

Many of the techniques we’ll review can be applied to any type of database. However, we’ll focus on relational databases as it’s the most common database caching use case.

Amazon ElastiCache Compared to Self-Managed Redis

Redis is an open source, in-memory data store that has become the most popular key/value engine in the market. Much of its popularity is due to its support for a variety of data structures as well as other features including Lua scripting support and Pub/Sub messaging capability.

How to apply caching

Caching is applicable to a wide variety of use cases, but fully exploiting caching requires some planning. When deciding whether to cache a piece of data, consider the following questions:

Caching design patterns

Lazy caching, also called lazy population or cache-aside, is the most prevalent form of caching. Laziness should serve as the foundation of any good caching strategy. The basic idea is to populate the cache only when an object is actually requested by the application. The overall application flow goes like this:

Cache (almost) everything

Finally, it might seem as if you should only cache your heavily hit database queries and expensive calculations, but that other parts of your app might not benefit from caching.

Caching technologies

The most popular caching technologies are in the in-memory Key-Value category of NoSQL databases. An in-memory key-value store is a NoSQL database optimized for read-heavy application workloads (such as social networking, gaming, media sharing and Q&A portals) or compute-intensive workloads (such as a recommendation engine).

Get started with Amazon ElastiCache

It's easy to get started with caching in the cloud with a fully-managed service like Amazon ElastiCache. It removes the complexity of setting up, managing and administering your cache, and frees you up to focus on what brings value to your organization. Sign up today for Amazon ElastiCache.

Caching in AWS

Repeat read access patterns have mandated the implementation of a caching layer in most modern applications. These access patterns might be for different use cases, such as configuration files, images, any file on a CDN.

ElastiCache for Redis & Memcached

AWS ElastiCache is a caching service that supports both Redis and Memcached engines. Both Redis and Memcached have a baseline of standard features but provide enough unique features to have separate use cases for both. We’ll delve into the architecture and AWS-managed features for both of these caches in the upcoming posts.

DynamoDB

Caching doesn’t start and end with Redis and Memcached. DynamoDB is a multi-purpose, serverless, key-value database used both as a key-value-cum-document store and a cache. For simple applications, you can use DynamoDB to store configuration files for your application.

In-Memory Tables in Databases

Having used relational databases extensively, I have to mention that I haven’t seen a complex application using only a single caching solution. Databases use the same caching mechanisms in the form of buffer memory. The idea is to keep as much of the database within memory as possible.

Why add cache to architecture?

Adding a cache to your architecture can help to optimize performance, flatten traffic spikes, and reduce costs. I highly recommend thinking about caching already when designing an architecture. Introducing a caching layer later might be challenging.

What is Cloudfront cache?

CloudFront utilizes those edge locations to minimize the distance and, therefore, latency between your users and your cloud infrastructure. You can think of CloudFront as a cache for static web content – like stylesheets, images, and videos – as well as a cache for dynamic content – like a rendered HTML page.

What is Amazon ElastiCache?

Amazon ElastiCache offers in-memory caches based on Redis or Memcached.

What to do if cache cannot answer a request?

If the cache cannot answer the request, send the request to the database. Write the result from the database to the cache. As an alternative, you could use the “write-through” strategy. When writing data to the database, update the cache as well. For each read request, ask the cache instead of the database.

How to populate cache?

First, how do you populate your cache? The “lazy loading” strategy uses the following approach: 1 Ask the cache for a result. 2 If the cache cannot answer the request, send the request to the database. 3 Write the result from the database to the cache.

Which frameworks support Memcached?

Many application frameworks like Spring or Ruby on Rails come with support for either Redis or Memcached to cache database queries, rendered partials, and many more.

Does write through update cache?

It is also worth noting that the “write-through” strategy does update the cache automatically. There is no need to think about cache invalidation in that scenario. But it requires that all your data fit into the cache!

The ecstasy and the agony of caches

Over years of building services at Amazon we’ve experienced various versions of the following scenario: We build a new service, and this service needs to make some network calls to fulfill its requests. Perhaps these calls are to a relational database, or an AWS service like Amazon DynamoDB, or to another internal service.

When we use caching

Several factors lead us to consider adding a cache to our system. Many times this begins with an observation about a dependency's latency or efficiency at a given request rate. For example, this could be when we determine that a dependency might start throttling or otherwise be unable to keep up with the anticipated load.

Local caches

Service caches can be implemented either in memory or external to the service. On-box caches, commonly implemented in process memory, are relatively quick and easy to implement and can provide significant improvements with minimal work. On-box caches are often the first approach implemented and evaluated once the need for caching is identified.

External caches

External caches can address many of the issues we’ve just discussed. An external cache stores cached data in a separate fleet, for example using Memcached or Redis. Cache coherence issues are reduced because the external cache holds the value used by all servers in the fleet.

Inline vs. side caches

Another decision we need to make when we evaluate different caching approaches is the choice between inline and side caches. Inline caches, or read-through/write-through caches, embed cache management into the main data access API, making cache management an implementation detail of that API.

Cache expiration

Some of the most challenging cache implementation details are picking the right cache size, expiration policy, and eviction policy. The expiration policy determines how long to retain an item in the cache. The most common policy uses an absolute time-based expiration (that is, it associates a time to live (TTL) with each object as it is loaded).

Other considerations

An important consideration is what the cache behavior is when errors are received from the downstream service. One option for dealing with this is to reply to clients using the last cached good value, for example leveraging the soft TTL / hard TTL pattern described earlier.

CDN reduces load on application origin and improves user experience

A Content Delivery Network (CDN) is a critical component of nearly any modern web application. It used to be that CDN merely improved the delivery of content by replicating commonly requested files (static content) across a globally distributed set of caching servers. However, CDNs have become much more useful over time.

Back to Caching Home

Caches have also become much more intelligent, providing the ability to inspect information contained in the request header and vary the response based on device type, requestor information, query string, or cookie settings.

How it works

Amazon ElastiCache is a fully managed, in-memory caching service supporting flexible, real-time use cases. You can use ElastiCache for caching, which accelerates application and database performance, or as a primary data store for use cases that don't require durability like session stores, gaming leaderboards, streaming, and analytics.

Use cases

Access data with microsecond latency and high throughput for lightning-fast application performance.

How to get started

Explore how to create a subnet group and cluster, and connect to a node.

Override API Gateway stage-level caching for method caching

You can override stage-level cache settings by enabling or disabling caching for a specific method. By increasing or decreasing its TTL period; or by turning encryption on or off for cached responses.

Use method or integration parameters as cache keys to index cached responses

When a cached method or integration has parameters, which can take the form of custom headers, URL paths, or query strings, you can use some or all of the parameters to form cache keys. API Gateway can cache the method's responses, depending on the parameter values used.

Flush the API stage cache in API Gateway

When API caching is enabled, you can flush your API stage's entire cache to ensure your API's clients get the most recent responses from your integration endpoints.

Invalidate an API Gateway cache entry

A client of your API can invalidate an existing cache entry and reload it from the integration endpoint for individual requests. The client must send a request that contains the Cache-Control: max-age=0 header.

ElastiCache For Redis & Memcached

- AWS ElastiCacheis a caching service that supports both Redis and Memcached engines. Both Redis and Memcached have a baseline of standard features but provide enough unique features to have separate use cases for both. We’ll delve into the architecture and AWS-managed features for both of these caches in the upcoming posts. Memcached is a multi-thre...

DynamoDB

- Caching doesn’t start and end with Redis and Memcached. DynamoDB is a multi-purpose, serverless, key-value database used both as a key-value-cum-document store and a cache. For simple applications, you can use DynamoDB to store configuration files for your application. For high volume, complex applications like gaming platforms, you can use DynamoDB to store sessi…

In-Memory Tables in Databases

- Having used relational databases extensively, I have to mention that I haven’t seen a complex application using only a single caching solution. Databases use the same caching mechanisms in the form of buffer memory. The idea is to keep as much of the database within memory as possible. For faster reads and writes of non-critical data, say, when you’re processing data for a…

What’s Next?

- This was the third post in a long series of posts about data-related services on AWS. Previously, I have talked about RDS in detail. The last post was about specialty (purpose-built) databases on AWS. If you’re interested in reading more of my writings about data & infrastructure engineering, you can visit this page and subscribe! You can also connect with me on LinkedIn. More content …