What is criterion entropy in decision tree?

Gini index and entropy is the criterion for calculating information gain. Decision tree algorithms use information gain to split a node. Both gini and entropy are measures of impurity of a node. A node having multiple classes is impure whereas a node having only one class is pure.

What is criterion gini in decision tree?

The Gini Index or Gini Impurity is calculated by subtracting the sum of the squared probabilities of each class from one. It favours mostly the larger partitions and are very simple to implement. In simple terms, it calculates the probability of a certain randomly selected feature that was classified incorrectly.

What are the conditions for stopping criterion in decision tree?

Stop criterion. If we continue to grow the tree fully until each leaf node corresponds to the lowest impurity, then the data have typically been overfitted. If splitting is stopped too early, error on training data is not sufficiently high and performance will suffer due to bais.

What is value in decision tree?

value is the split of the samples at each node. so at the root node, 32561 samples are divided into two child nodes of 24720 and 7841 samples each.

Which is better Gini or entropy?

The range of Entropy lies in between 0 to 1 and the range of Gini Impurity lies in between 0 to 0.5. Hence we can conclude that Gini Impurity is better as compared to entropy for selecting the best features.

Is Gini and entropy the same?

The Gini Index and the Entropy have two main differences: Gini Index has values inside the interval [0, 0.5] whereas the interval of the Entropy is [0, 1]. In the following figure, both of them are represented.

What is the stopping criterion?

Stopping criterion: Since an iterative method computes successive approximations to the solution of a linear system, a practical test is needed to determine when to stop the iteration. Ideally this test would measure the distance of the last iterate to the true solution, but this is not possible.

What is overfitting in decision tree?

Is your Decision Tree Overfitting? Overfitting refers to the condition when the model completely fits the training data but fails to generalize the testing unseen data. Overfit condition arises when the model memorizes the noise of the training data and fails to capture important patterns.

What are nodes in decision tree?

Decision tree symbolsNameMeaningDecision nodeIndicates a decision to be madeChance nodeShows multiple uncertain outcomesAlternative branchesEach branch indicates a possible outcome or actionRejected alternativeShows a choice that was not selected1 more row

What are Hyperparameters in decision tree?

Hyperparameters Regularizations: Such types of models are often called non-parametric models. Because the number of parameters is not determined before the training, so our model is free to fit closely with the training data. To avoid this overfitting you need to constraint the decision trees during training.

How do you read decision tree results?

1 Interpretation. The interpretation is simple: Starting from the root node, you go to the next nodes and the edges tell you which subsets you are looking at. Once you reach the leaf node, the node tells you the predicted outcome.

How do you analyze a decision tree?

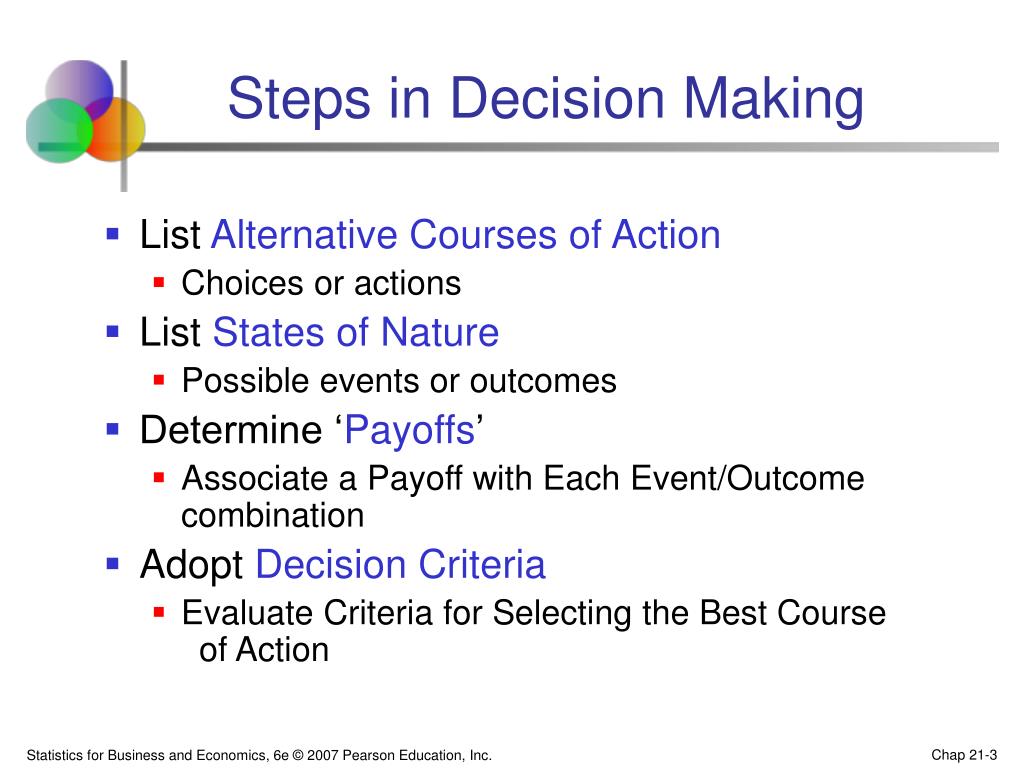

Follow these five steps to create a decision tree diagram to analyze uncertain outcomes and reach the most logical solution.Start with your idea. Begin your diagram with one main idea or decision. ... Add chance and decision nodes. ... Expand until you reach end points. ... Calculate tree values. ... Evaluate outcomes.

Should Gini index be high or low decision tree?

The value of 0.5 of the Gini Index shows an equal distribution of elements over some classes. While designing the decision tree, the features possessing the least value of the Gini Index would get preferred. You can learn another tree-based algorithm(Random Forest).

What is Gini in random forest?

Random Forests allow us to look at feature importances, which is the how much the Gini Index for a feature decreases at each split. The more the Gini Index decreases for a feature, the more important it is. The figure below rates the features from 0–100, with 100 being the most important.

Should Gini be high or low?

Key Takeaways. The Gini index is a measure of the distribution of income across a population. A higher Gini index indicates greater inequality, with high-income individuals receiving much larger percentages of the population's total income.

What is Gini index in CART?

Gini index is a CART algorithm which measures a distribution among affection of specific-field with the result of instance. It means, it can measure how much every mentioned specification is affecting directly in the resultant case. Gini index is used in the real-life scenario.

What is the most likelihood criterion?

According to the most likelihood criterion, the decision-maker assumes that the event that has the highest probability will happen in a Chance node. Based on that assumption, the decision-maker chooses the Action that has the highest payoff. Notice the following decision tree.

Which criterion is used to find a middle ground between the extremes posed by the optimist and?

You can select the one that gives the highest number based on such evaluation. This criterion is called the Optimism-Pessimism rule. Suggested by Leonid Hurwicz in 1951, this criterion attempts to find a middle ground between the extremes posed by the optimist and pessimist criteria.

How to judge an option?

From the above chart, you can judge an option by looking into the Minimum and Maximum possible outcome at the same time. A pessimist person will notice that Investment B's minimum outcome is higher than Investment A's minimum outcome, so he/she may choose Investment B.

Which theorem is based on the assumption that a decision-maker is maximizing the expected value of?

This theorem is the basis for the expected utility theory.

Why are decision trees less appropriate?

Decision trees are less appropriate for estimation tasks where the goal is to predict the value of a continuous attribute. Decision trees are prone to errors in classification problems with many class and relatively small number of training examples. Decision tree can be computationally expensive to train. The process of growing a decision tree is ...

What are the weaknesses of decision trees?

The weaknesses of decision tree methods : 1 Decision trees are less appropriate for estimation tasks where the goal is to predict the value of a continuous attribute. 2 Decision trees are prone to errors in classification problems with many class and relatively small number of training examples. 3 Decision tree can be computationally expensive to train. The process of growing a decision tree is computationally expensive. At each node, each candidate splitting field must be sorted before its best split can be found. In some algorithms, combinations of fields are used and a search must be made for optimal combining weights. Pruning algorithms can also be expensive since many candidate sub-trees must be formed and compared.

Do you have to sort the candidate splitting field before finding the best split?

At each node, each candidate splitting field must be sorted before its best split can be found. In some algorithms, combinations of fields are used and a search must be made for optimal combining weights. Pruning algorithms can also be expensive since many candidate sub-trees must be formed and compared. References :

Is decision tree classifier good?

Decision trees can handle high dimensional data. In general decision tree classifier has good accuracy. Decision tree induction is a typical inductive approach to learn knowledge ...

What are decision trees?

Decision trees are a way to diagram the steps required to solve a problem or make a decision. They help us look at decisions from a variety of angles, so we can find the one that is most efficient. The diagram can start with the end in mind, or it can start with the present situation in mind, but it leads to some end result or conclusion -- the expected outcome. The result is often a goal or a problem to solve.

How do decision trees influence machine learning?

Decision trees have influenced regression models in machine learning. While designing the tree, developers set the nodes’ features and the possible attributes of that feature with edges.

How does Gini impurity work in decision trees?

In decision trees, Gini impurity is used to split the data into different branches. Decision trees are used for classification and regression. In decision trees, impurity is used to select the best attribute at each step. The impurity of an attribute is the size of the difference between the number of points that the attribute has and the number of points that the attribute does not have. If the number of points that an attribute has is equal to the number of points that it does not have, then the attribute impurity is zero.

What is 0.5 in statistics?

Where 0.5 is the total probability of classifying a data point imperfectly and hen ce is exactly 50%.

When is regression tree used?

The regression tree is used when the predicted outcome is a real number and the classification tree is used to predict the class to which the data belongs. These two terms are collectively called as Classification and Regression Trees (CART).

Do decision trees mimic human subjective power?

To recognize it, one must think that decision trees somewhat mimic human subjective power. So, a problem with more human cognitive questioning is likely to be more suited for decision trees. The underlying concept of decision trees can be easily understandable for its tree-like structure.