Data virtualization tools commonly include the following features:

- Connect to multiple data sources

- Support for on-premises, cloud, and hybrid data sources

- Support for different data types

- Abstraction of the technical characteristics of data, such as API, query language, structure, and location

- Centralize data acquisition logic in a virtualized metadata layer

- Real-time data retrieval, delivery, and updates

What is data virtualization and how does it work?

Data virtualization is a kind of data integration technology that provides access to data in real-time, seamlessly all in one place. Data virtualization tools provide a layer that combines the enterprise data from different sources and creates a holistic view of the data.

What is data-as-a-service virtualization?

Data virtualization is one of those technologies that enable Data-as-a-Service (DaaS) solutions. Data virtualization is a data management approach that enables data processing without dealing with the technical aspects of data storage.

What are the best data virtualization tools?

Best Data Virtualization Tools include: TIBCO Data Virtualization, Oracle Data Service Integrator, Denodo, Actifio Sky, Red Hat JBoss Data Virtualization, AtScale, and Stone Bond Enterprise Enabler. Data Virtualization Tools Overview What are Data Virtualization Tools?

What is the best data virtualization tool for JBoss?

Red Hat JBoss Data Virtualization is a popular data virtualization tool offering data supply along with data integration solutions for multiple data sources and allowing those multiple sources of data as a single source and delivering the required data in user desired form. 5. Amazon Elastic Compute Cloud (EC2)

What is data virtualization?

Data virtualization has many uses, since it is simply the process of inserting a layer of data access between disparate data sources and data consumers, such as dashboards or visualization tools. Some of the more common use cases include:

How does data virtualization help with unstructured data?

Bridging structured and unstructured: Data virtualization can bridge the semantic differences of unstructured and structured data, integration is easier and data quality improves across the board.

Why is data virtualization important?

Because of its abstraction and federation, data virtualization is ideal for use with Big Data. It hides the complexities of the Big Data stores, whether they are Hadoop or NoSQL stores, and makes it easy to integrate data from these stores with other data within the enterprise.

Why is data visualization achieved through data virtualization?

Data visualization is achieved through data virtualization because it is pulling the data from many different sources. It is not data federation. More on this later, but for now, data virtualization and data federation are two different subjects, although some people use the terms interchangeably, which is incorrect.

Why is virtualization important for call centers?

With data virtualization spanning the data silos, everyone from a call center to a database manager can see the entire span of data stores from a single point of access.

What is data federation?

Data federation is a type of data virtualization. Both are techniques designed to simplify access for applications to data. The difference is that data federation is used to provide a single form of access to virtual databases with strict data models. Data virtualization doesn’t use a data model and can access a variety of data types.

What is a logical data warehouse?

For starters, unlike a data warehouse, where data is prepared, filtered , and stored, no data is stored in a LDW. Data resides at the source, whatever that may be, including a traditional data warehouse. Because of this no infrastructure is needed; you use the existing data stores. A good LDW package federates all data sources and provides a single platform for integration using a range of services, like SOAP, REST, Odata, SharePoint, and ADO.Net.

What are Data Virtualization Tools?

Data virtualization provides access to data while hiding technical aspects like location, structure, or access language. This allows applications to access data without having to know where it resides. This can be used in (for instance) data federation, where data in separate data stores are made to look like a single data store to the consuming application.

What is Enterprise Enabler?

Enterprise Enabler is a data virtualization or data integration platform from Stone Bond Technologies in Houston, Texas.

What is a dremio?

Dremio, from the company of the same name in Santa Clara, is described as a data lake engine. Dremio operationalizes data lake storage and speeds analytics processes, boasting a high-performance and high-efficiency query engine while also democratizing data access for data scientists…

What is Cisco data virtualization?

Cisco Data Virtualization, formerly Composite (acquired July 2013) is, as the name might suggest, a data or datacenter virtualization platform.

What is Keenlog Analytics?

Keenlog Analytics provides intelligence to the SMB business and supports decision-makers with the necessary data visibility to control and take actions to meet their logistics’ financial and market goals. The vendor states the product includes the following: • More…

What is CONNX software?

CONNX, from Software AG (acquired in 2016), is a mainframe integration solution that allows users to access and integrate mainframe data, relational data, and cloud data wherever it resides and however it is structured, without altering core systems.

What is Oracle Data Service Integrator?

Oracle Data Service Integrator is standards based, declarative, and enables re-usability of data services. For more information…

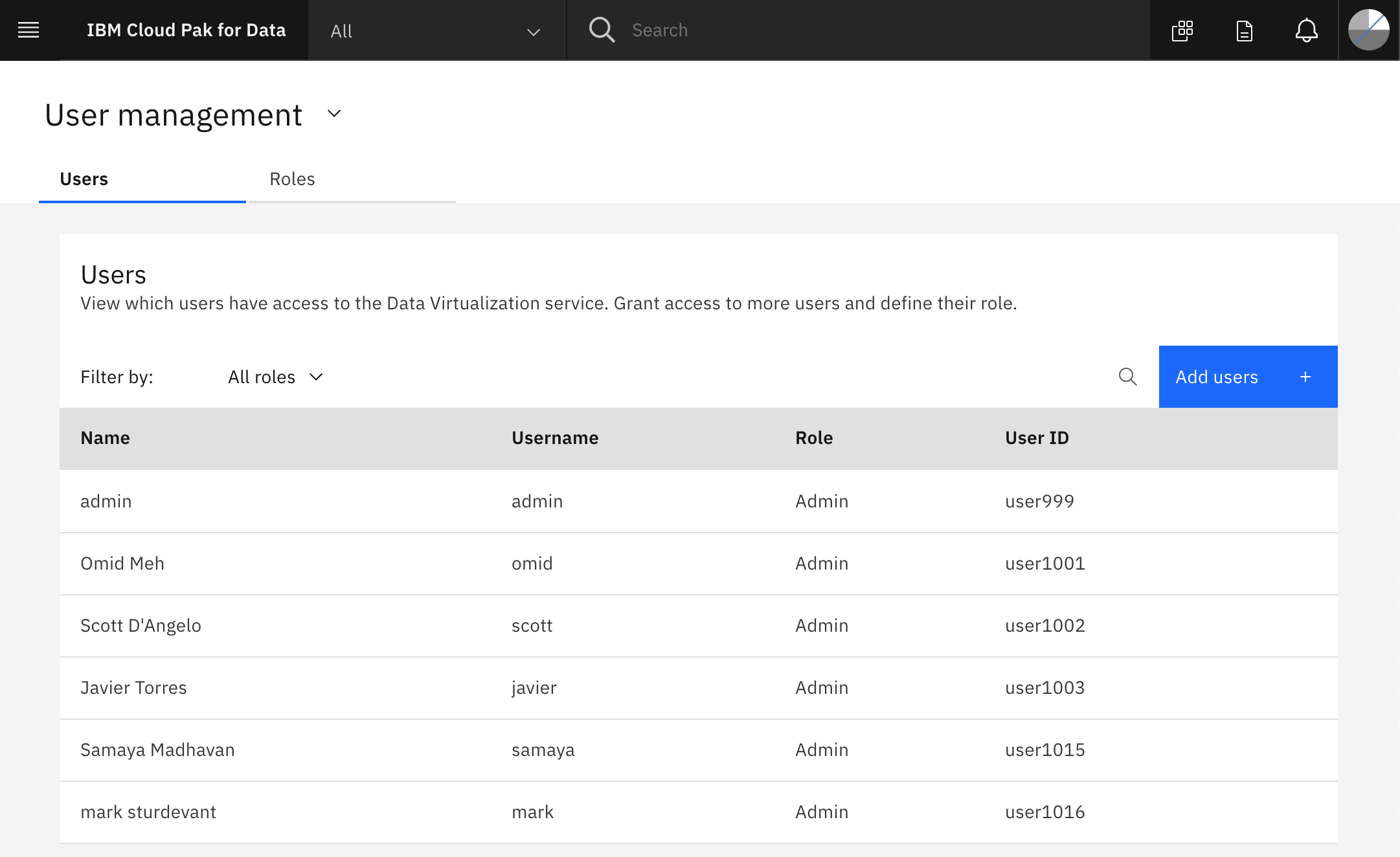

What is IBM Cloud Pak for Data?

With the IBM Cloud Pak for Data AutoSQL framework, use a single distributed query engine across multiple data sources. AutoSQL combines with data virtualization to query across clouds, databases, data lakes, warehouses and streaming data without copying or data movement. You gain faster access to the data you need most.

How does data virtualization help manufacturing?

Data virtualization and edge computing can achieve reliable operations for the manufacturing industry. Having near real-time analytics performed at the site where data is being generated can help organizations identify issues promptly and, in so doing, prevent unexpected operational outages and interruptions.

What is the impact of virtualization on financial institutions?

With data virtualization, institutions don’t have to move their data to a central data center or to cloud for processing and analysis .

How do automated manufacturing environments prioritize alarms?

Automated manufacturing environments prioritize alarms by augmenting their quality and process techniques with meta-learning or rules. With data virtualization and machine learning methods, manufacturers can increasingly sift through patterns of alarms and convert them into actionable information.

What is IoT sensor?

IoT sensors are creating massive amounts of data. With the growth in the number of sensors collecting data, data volume is set to explode. Moving data analytics to the edge with a data platform that can analyze batch and streaming data speeds up and simplifies analytics, simultaneously — providing insights where and when needed.

Why is brick and mortar important?

Brick-and-mortar stores are looking for any competitive advantage they can get over web-based retailers. Data virtualization enables near-instant edge analytics, providing unprecedented insights into consumer behavior. This helps retailers better target merchandise, sales and promotions, and do more to provide exceptional customer experiences.

How to break down silos in business?

Companies often try to break down silos by copying disparate data for analysis into central data stores, such as data marts, data warehouses and data lakes. This is costly and prone to error when most manage an average of 400 unique data sources for business intelligence.¹ With data virtualization, you can access data at the source without moving data, accelerating time to value with faster and more accurate queries.

Why is data virtualization important?

Data virtualization is the fastest approach to merge them for analytics. Increasing importance of analytics: Data virtualization enables faster analytics. As organizations’ interest in analytics and data driven decision making rises, the importance of a logical data warehouse gets more apparent since it enables a faster analytics process.

How does data virtualization help organizations?

Data virtualization enables organizations to increase analytics effectiveness and reduce analytics costs by creating a virtual layer that aggregates data from multiple sources. This enables companies to access data from multiple sources without setting up a costly data warehouse or spending time on data preparation. It is also called Logical data warehouses – LDW, data federation, virtual databases, and decentralized data warehouses.

What is LDW in data?

The Logical Data Warehouse (LDW) is the most common implementation of data virtualization. It is a term invented by Gartner in 2011. LDW differs from data warehouse because it is not monolithic. Its architecture, besides from core data warehouse of organization, includes external data sources such as enterprise systems, web and cloud data.

Why did Anadolu Hayat partner with Delphix?

Solution: Anadolu Hayat partnered with Delphix to ingest production databases so that they provided their test and solution development teams more accurate data with less time and fewer resources.

How much data is needed for a logical data warehouse?

A sufficient amount of data sources (>10) is needed for a logical data warehouse to make more sense in terms of efficiency. Otherwise, the trade-off between cost and speed may not be worth it.

How fast is a logical data warehouse?

A logical data warehouse is up to 90% faster to implement since less effort is required for its setup.

Why is Anadolu Hayat facing storage challenges?

Challenge: Anadolu Hayat is an insurance company in Turkey. They were facing storage challenges because they were in parallel planning to move their data center. These shortages lead to problems in database creation requests and it would take more than a week to export/import a database from production systems.

What is Oracle Big Data?

Oracle Big Data SQL enables organizations to immediately analyze data across Apache Hadoop, Apache Kafka, NoSQL and Oracle Database leveraging their existing SQL skills, security policies and applications with extreme performance. So its great experience while wrking in it.

What is a Gartner peer review?

Gartner Peer Insights reviews constitute the subjective opinions of individual end users based on their own experiences, and do not represent the views of Gartner or its affiliates.

How has data virtualization improved business?

Data virtualization has made us achieved three major things, one reduced expense, reduced risk as well as an improved revenue in total. We can easily use all collected data to execute various business oriented purposes. And at least we have a single access point to monitor our data.

What is Discovery Pro?

Discovery Pro helps you get a graphical overview of the market, compare vendors and create custom shortlists as per your business needs.

What is data virtualization?

Data virtualization technology is based on the execution of distributed data management processing, primarily for queries, against multiple heterogeneous data sources, and federation of query results into virtual views. This is followed by the consumption of these virtual views by applications, query/reporting tools, message-oriented middleware or other data management infrastructure components. Data virtualization can be used to create virtualized and integrated views of data in-memory, rather than executing data movement and physically storing integrated views in a target data structure. It provides a layer of abstraction above the physical implementation of data, to simplify querying logic.

Table of Contents

Top 10 Data Virtualization Tools

Denodo

- The Denodo data virtualization tool helps in virtualizing data as well as gaining insights from it. The data catalog feature is added to the latest version of Denodo. It is efficient in performing various operations like combining, virtualization of data, identification, and cataloging the data from the source.

Red Hat JBoss Data Virtualization

- Red Hat Data Virtualization tool is a good choice for developer-led organizations and those that are using microservices and containers, to build and enable a virtual data layer that abstracts disparate data sources.

Frequently Asked Question

- What is a Data Virtualization tool?

Data Virtualization Tools help in creating a holistic overview of the data. This view can be used to perform operations on the data without even knowing its original location. Data Virtualization tools help in reducing costs and reduce errors. - How is Data Virtualization done?

Data Virtualization is done through a middle layer, which is a layer that provides virtual access to various data sources and unifies them. This layer provides information on data sources based on demand and even updates in real-time.

Conclusion

- The market is moving towards data and many new techniques and technologies are being developed to cater to different needs and provide the utmost efficiency. Data Virtualization helps in creating views and performing operations on data without actually moving the data. Also, Data virtualization protects the original data and saves storage by creating thin copies so that operati…

Data Virtualization Capabilities

Data Virtualization Use Cases

Data Virtualization: Related Topics

Data Virtualization Architecture

Data Virtualization Tools

- Data virtualization platforms are all designed to span disparate data sources through a unified interface, but they all get there by a different route. A few big names have gotten into the market but since left. These include Cisco, which sold off its data virtualization product to TIBCO in 2017, and IBM, which got into the market with a big splash...

Data Virtualization vs Data Integration

- Data virtualization tools provide features which are given as follows 1. Evaluate multiple sources of data as one source 2. Integrate and convert data to create a virtual model of data 3. Virtualize access to data in real-time, without transferring it 4. Advanced Query Engine 5. Centralize all of the metadata 6. Simple data analytics 7. Publication...

Data Virtualization Tools Features

Data Virtualization Tools Comparison

Pricing Information

Data Virtualization Tools Best of Awards

- Data virtualization tools commonly include the following features: 1. Connect to multiple data sources 2. Support for on-premises, cloud, and hybrid data sources 3. Support for different data types 4. Abstraction of the technical characteristics of data, such as API, query language, structure, and location 5. Centralize data acquisition logic in a ...