Which operating system does AWS use?

macOS support is limited to the following AWS Regions:

- US East (N. Virginia) (us-east-1)

- US East (Ohio) (us-east-2)

- US West (Oregon) (us-west-2)

- Europe (Ireland) (eu-west-1)

- Asia Pacific (Singapore) (ap-southeast-1)

Is AWS glue expensive?

Still expensive as it requires $1500 for dev endpoint per month AWS has recently released the AWS glue libraries which can be used to setup the local development environment. This helps to integrate Glue ETL jobs with maven build system for building and testing.

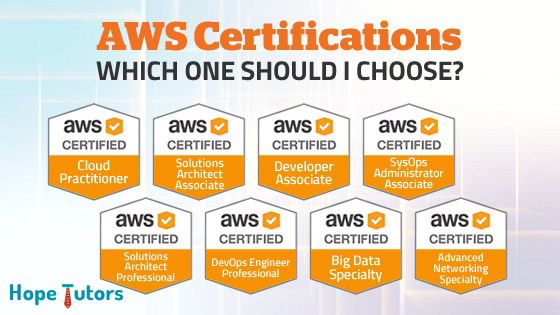

Is AWS certification necessary for doing AWS job?

The short answer is that an AWS certification alone will not get you a job. There are several other attributes that play an essential part in kick-starting your AWS career. So does that mean that certifications are not worth attaining? Absolutely not! Certifications are extremely useful – if not essential to getting a good IT job and AWS careers can be extremely rewarding.

Should I start with AWS or azure?

AWS is one of the most effective platforms for building out new software applications. Many founders are considering the tradeoff for AWS vs Azure for startups. Both platforms are growing at staggering rates and poised to be two of the top performing cloud providers over time. So, who should you choose? Ultimately, we recommend building on AWS.

What is ETL in cloud?

ETL, which stands for extract, transform and load, is a data integration process that combines data from multiple data sources into a single, consistent data store that is loaded into a data warehouse or other target system.

What is the meaning ETL?

extract, transform, loadETL, which stands for “extract, transform, load,” are the three processes that, in combination, move data from one database, multiple databases, or other sources to a unified repository—typically a data warehouse.

Is S3 an ETL tool?

Modernizing Amazon S3 ETL ETL (Extract, Transform, Load) tools will help you collect your data from APIs and file transfers (the Extract step), convert them into standardized analytics-ready tables (the Transform step) and put them all into a single data repository (the Load step) to centralize your analytics efforts.

What is ETL tool used for?

What is an ETL tool? Extract, Transform and Load (ETL)) is the process used to turn raw data into information that can be used for actionable business intelligence (BI). An ETL tool is an instrument that automates this process by providing three essential functions: Extraction of data from underlying data sources.

Is AWS an ETL?

AWS Data Pipeline Product Details As a managed ETL (Extract-Transform-Load) service, AWS Data Pipeline allows you to define data movement and transformations across various AWS services, as well as for on-premises resources.

Is ETL same as SQL?

SQL is a language to manage and query data that stored in relational databases. ETL is a method to Extract data from different sources Transform and Load the extracted data into other sources. ... You can use SQL (just writing SQL queries) for ETL purposes without using an ETL tool.

Is lambda a ETL?

AWS Lambda is the platform where we do the programming to perform ETL, but AWS lambda doesn't include most packages/Libraries which are used on a daily basis (Pandas, Requests) and the standard pip install pandas won't work inside AWS lambda.

Is SQL a ETL tool?

SSIS is part of the Microsoft SQL Server data software, used for many data migration tasks. It is basically an ETL tool that is part of Microsoft's Business Intelligence Suite and is used mainly to achieve data integration. This platform is designed to solve issues related to data integration and workflow applications.

How is ETL done in AWS?

How ETL works. ETL is a three-step process: extract data from databases or other data sources, transform the data in various ways, and load that data into a destination. In the AWS environment, data sources include S3, Aurora, Relational Database Service (RDS), DynamoDB, and EC2.

What are example of ETL tools?

Enterprise Software ETLInformatica PowerCenter. This product is arguably the most mature ETL product in the market. ... IBM InfoSphere DataStage. ... Oracle Data Integrator (ODI). ... Microsoft SQL Server Integration Services (SSIS). ... Ab Initio. ... SAP Data Services. ... SAS Data Manager.

Which ETL tool is used most?

15 Best ETL Tools In 2022Informatica PowerCenter - Cloud data management solution. ... Microsoft SQL Server Integration Services - Enterprise ETL platform. ... Talend Data Fabric - Enterprise data integration with open-source ETL tool. ... Integrate.io (XPlenty) - ETL tool for e-commerce. ... Stitch - Modern, managed ETL service.More items...•

What are all the ETL tools?

8 More Top ETL Tools to Consider1) Striim. Striim offers a real-time data integration platform for big data workloads. ... 2) Matillion. Matillion is a cloud ETL platform that can integrate data with Redshift, Snowflake, BigQuery, and Azure Synapse. ... 3) Pentaho. ... 4) AWS Glue. ... 5) Panoply. ... 6) Alooma. ... 7) Hevo Data. ... 8) FlyData.

What is ETL in job?

Any system dealing with data processing requires moving information from storage and transforming it in the process to be used by people or machines. This process is known as Extract, Transform, Load, or ETL. And usually, it is carried out by a specific type of engineer — an ETL developer.

What is ETL tool example?

As the data is loaded into the data warehouse or data lake, the ETL tool sets the stage for long-term analysis and usage of such data. Examples include banking data, insurance claims, and retail sales history.

What is ETL and SQL?

The SQL Server ETL (Extraction, Transformation, and Loading) process is especially useful when there is no consistency in the data coming from the source systems. When faced with this predicament, you will want to standardize (validate/transform) all the data coming in first before loading it into a data warehouse.

What is ETL job in data warehouse?

ETL is a process in Data Warehousing and it stands for Extract, Transform and Load. It is a process in which an ETL tool extracts the data from various data source systems, transforms it in the staging area, and then finally, loads it into the Data Warehouse system.

What is the best ETL tool for AWS?

A far more viable AWS ETL option is BryteFlow. With the BryteFlow AWS ETL tool, the Change Data Capture and data transformation are linked, and integration is seamless, so that data is transformed as it is ingested.

What is ETL?

ETL stands for Extract Transform and Load. Extract gets the data from databases or other sources, Transform – modifies the data to make it suitable for consumption and Load – Loads the data to the destination (in this case on AWS). This allows data from disparate sources, to be made available on a Data Lake or a Data Warehouse in the same format, so that it can be easily used for reporting and analytics. AWS Glue is one of the AWS ETL tools.

What is AWS glue?

Script Auto generation – AWS Glue can be used to auto-generate an ETL script. You can also write your own scripts in Python (PySpark) or Scala. Glue takes the input on where the data is stored. From there, Glue creates ETL scripts in Scala and Python for Apache Spark.

How long does it take for AWS glue to start?

Delay in starting jobs – AWS ETL Glue jobs have a cold start time of approximately 15 mins per job . It generally depends on the amount of resources you are requesting and the availability of the resources on the AWS side. This can significantly impact the pipeline and delivery of data

What is glue in AWS?

AWS Glue is an Extract Transform Load (ETL) service from AWS that helps customers prepare and load data for analytics. It is a completely managed AWS ETL tool and you can create and execute an AWS ETL job with a few clicks in the AWS Management Console. All you do is point AWS Glue to data stored on AWS and Glue will find your data and store the related metadata (table definition and schema) in the AWS Glue Data Catalog. Once catalogued in the Glue Data Catalog, your data can be immediately searched upon, queried, and accessible for ETL in AWS.

Why is Kafka stream used in AWS glue?

This not only introduces another integration and another point of failure, but also slows down the processing as a row by row approach needs to be undertaken and then integrated with AWS Glue. For destinations like Snowflake, Redshift and S3, this might be a severe bottleneck. More on AWS DMS

Does AWS glue give control over table jobs?

Lack of control for tables – AWS Glue does not give any control over individual table jobs. ETL is applicable to the complete database.

ETL Processing Using AWS Data Pipeline and Amazon Elastic MapReduce

This blog post shows you how to build an ETL workflow that uses AWS Data Pipeline to schedule an Amazon Elastic MapReduce (Amazon EMR) cluster to clean and process web server logs stored in an Amazon Simple Storage Service (Amazon S3) bucket. AWS Data Pipeline is an ETL service that you can use to automate the movement and transformation of data.

Step 1: Determine Whether Log Files are Available in an Amazon S3 Bucket

The first step is to check whether files are available in the s3://emr-worksop/data/input/ {YYYY-MM-DD} location. This is passed as a variable myInputData. A variable can be referenced in other places by # {variable_name}.

Step 2: Creating an Amazon EMR Cluster with EMRFS

The data pipeline JSON code below launches a three-node Amazon EMR cluster with EMRFS with one master and two core nodes. terminateAfter terminates the cluster after one hour.

Step 3: Run emrfs sync to Update Metadata with the Contents of the Amazon S3 Bucket

For this, we use the preStepCommand feature. Amazon EMR’s documentation provides more information about this feature.

Step 4: Launch a Pig Job on the Amazon EMR Cluster

The Pig job is submitted to the Amazon EMR cluster as a Step. The input to this job are log files present in an Amazon S3 bucket referenced by variable myInputData. After processing the log file, the output is written to another Amazon S3 location referenced by the variable myTransformedData.

Step 5: Reuse the Amazon EMR Cluster to Launch a Hive job for Generating Reports

After the Pig job has cleaned the data, we kick off the Hive job to generate reports on same Amazon EMR cluster. Let’s take a closer look at this Hive script ( download the script to check it out).

Step 6: Clean EMRFS Metadata Older than Two Days (emrfs delete)

We do this post processing after both the jobs have completed on cluster. Amazon EMR documentation provides more information about EMRFS delete. For this we use the postStepCommand feature of AWS Data Pipeline which runs the command after successfully completing the step.

1.Few Points to Consider about AWS Glue ETL

1. It is a Serverless service and no need for provisioning and managing services and Resources.

Master in AWS

Furthermore, it is also useful to understand the existing data assets of the user. There are many AWS services available for storing data and maintaining a unified view of the data through the AWS Glue data catalog. It helps to view data quickly to find the datasets we have and manage the metadata in a central repository.

Should you stick with AWS Glue for ETL?

AWS Glue is a managed ETL service that you control from the AWS Management Console. Glue may be a good choice if you’re moving data from an Amazon data source to an Amazon data warehouse.

How to choose an ETL tool?

How do you pick the most suitable ETL tool for your business? Start by asking questions specific to your requirements: 1 Does an ETL tool integrate with every data source you use today? 2 Will it integrate with new data sources — even those that are less popular or custom-built? To be prepared to take advantage of new data sources, consider an extensible solution like Stitch, whose Singer open source framework lets you integrate new data sources. 3 What kind of reliability do you need? It’s tough to beat the fault tolerance of a cloud-native service. 4 What are your requirements for performance and scalability? 5 Does it meet your security requirements? Do you need to meet HIPAA, PCI, or GDPR compliance requirements? 6 Pricing models vary among ETL services. Does the solution charge by the hour, number of data sources, or a combination of factors? Make sure the solution is priced in a way that makes sense for your business’s usage. 7 What replication scheduling options are available and how is replication handled? What happens if a replication job is still running when the next is supposed to start? Can you specify a start time to establish a predictable schedule? 8 What types of support are available? Is quality documentation available online? Is in-app support available? Is there an online community to share knowledge with?

What is ETL in AWS?

ETL is a three-step process: extract data from databases or other data sources, transform the data in various ways, and load that data into a destination. In the AWS environment, data sources include S3, Aurora, Relational Database Service (RDS), DynamoDB, and EC2.

What is ETL in data warehouse?

ETL is part of the process of replicating data from one system to another — a process with many steps. For instance, you first have to identify all of your data sources. Then, unless you plan to replicate all of your data on a regular basis, you need to identify when source data has changed. You also need to select a data warehouse destination ...

Does stitch work with Amazon Redshift?

Stitch supports Amazon Redshift and S3 destinations and more than 90 data sources, and provides businesses with the power to replicate data easily and cost-effectively while maintaining SOC 2, HIPAA, and GDPR compliance. As a cloud-first, extensible platform, Stitch lets you reliably scale your ecosystem and integrate with new data sources — all while providing the support and resources to answer any of your questions. Try Stitch for free today to create an AWS data pipeline that works for your organization.

Which is better, ETL or third party?

If you need to include other sources in your ETL plan, a third-party ETL tool is a better choice.

Where does stitch stream data?

Stitch streams all of your data directly to your analytics warehouse.

Faster data integration

Different groups across your organization can use AWS Glue to work together on data integration tasks, including extraction, cleaning, normalization, combining, loading, and running scalable ETL workflows. This way, you reduce the time it takes to analyze your data and put it to use from months to minutes.

Automate your data integration at scale

AWS Glue automates much of the effort required for data integration. AWS Glue crawls your data sources, identifies data formats, and suggests schemas to store your data. It automatically generates the code to run your data transformations and loading processes.

No servers to manage

AWS Glue runs in a serverless environment. There is no infrastructure to manage, and AWS Glue provisions, configures, and scales the resources required to run your data integration jobs. You pay only for the resources your jobs use while running.

Build event-driven ETL (extract, transform, and load) pipelines

AWS Glue can run your ETL jobs as new data arrives. For example, you can use an AWS Lambda function to trigger your ETL jobs to run as soon as new data becomes available in Amazon S3. You can also register this new dataset in the AWS Glue Data Catalog as part of your ETL jobs.

Create a unified catalog to find data across multiple data stores

You can use the AWS Glue Data Catalog to quickly discover and search across multiple AWS data sets without moving the data. Once the data is cataloged, it is immediately available for search and query using Amazon Athena, Amazon EMR, and Amazon Redshift Spectrum.

Create, run, and monitor ETL jobs without coding

AWS Glue Studio makes it easy to visually create, run, and monitor AWS Glue ETL jobs. You can compose ETL jobs that move and transform data using a drag-and-drop editor, and AWS Glue automatically generates the code. You can then use the AWS Glue Studio job run dashboard to monitor ETL execution and ensure that your jobs are operating as intended.

Explore data with self-service visual data preparation

AWS Glue DataBrew enables you to explore and experiment with data directly from your data lake, data warehouses, and databases, including Amazon S3, Amazon Redshift, AWS Lake Formation, Amazon Aurora, and Amazon RDS.