How to run OpenStack on AWS?

The following steps will handle it for you:

- Install the macchanger tool.

- Note down the actual / Original MAC address of provider NIC (eth1)

- Change the MAC address of Provider NIC (eth1)

- Change the MAC address of Router’s Gateway Interface to the original MAC address of eth1.

- Now, try to ping 8.8.8.8 from router namespace

What can you do with HPC?

Where is high-performance computing used?

- Chip design and optimization

- Circuit simulation and verification

- Manufacturing optimization

Which operating system does AWS use?

macOS support is limited to the following AWS Regions:

- US East (N. Virginia) (us-east-1)

- US East (Ohio) (us-east-2)

- US West (Oregon) (us-west-2)

- Europe (Ireland) (eu-west-1)

- Asia Pacific (Singapore) (ap-southeast-1)

Which AWS resources are supported by AWS OpsWorks?

- In Person Training - Chef offers in person classes that will meet your needs regardless of your skill level.

- Online Instructor-Led Training - No need to leave your home or office for Chef training.

- Self-Paced Training - Learn new skills whenever you have time.

- Private Training - Chef training delivered when and where you need it.

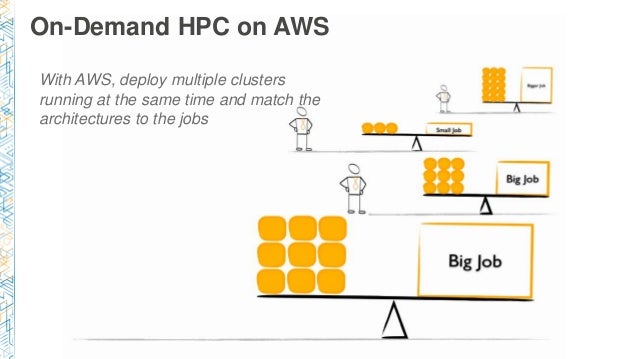

What is HPC in cloud computing?

High Performance Computing (HPC) refers to the practice of aggregating computing power in a way that delivers much higher horsepower than traditional computers and servers. HPC, or supercomputing, is like everyday computing, only more powerful.

What does a HPC do?

High performance computing (HPC) is the ability to process data and perform complex calculations at high speeds. To put it into perspective, a laptop or desktop with a 3 GHz processor can perform around 3 billion calculations per second.

Why is HPC in the cloud?

Today, HPC is used to solve complex, performance-intensive problems—and organizations are increasingly moving HPC workloads to the cloud. HPC in the cloud is changing the economics of product development and research because it requires fewer prototypes, accelerates testing, and decreases time to market.

What is HPC framework?

HPC frameworks provides the user with both the capability to properly exploit the computational resources of an ever-evolving HPC hardware ecosystem and the required robustness to harness the complexity of modern distributed systems.

What is the difference between HPC and cloud computing?

High-performance computing refers to networking several computers together in a cluster and aggregating their computational power to perform complex calculations at high speeds. Cloud computing gives organizations the ability to scale their HPC applications.

What are the types of HPC?

We can differentiate two types of HPC systems: the homogeneous machines and the hybrid ones. Homogeneous machines only have CPUs while the hybrids have both GPUs and CPUs. Tasks are mostly run on GPUs while CPUs oversee the computation. As of June 2020, about 2/3 of supercomputers are hybrid machines.

Does AWS have HPC?

Run your large, complex simulations and deep learning workloads in the cloud with a complete suite of high performance computing (HPC) products and services on AWS.

Is Google cloud an HPC?

Google Cloud's high performance computing (HPC) solutions are easy to use, built on the latest technology, and cost-optimized to provide a flexible and powerful HPC foundation that clears the way for innovation.

What is high performance cloud?

High-performance cloud computing (HPC2) is a type of cloud computing solution that incorporates standards, procedures and elements from cloud computing. HPC2 defines the techniques for achieving computing operations that match the speed of supercomputing from a cloud computing architecture.

Is Azure a HPC?

With purpose-built HPC infrastructure, solutions and optimised application services, Azure offers competitive price/performance compared to on-premises options with additional high-performance computing benefits.

Why do I need HPC?

The need for high-performance computing (HPC) HPC is specifically needed for these reasons: It paves the way for new innovations in science, technology, business and academia. It improves processing speeds, which can be critical for many kinds of computing operations, applications and workloads.

What are the three key components of HPC?

There are three key components of high-performance computing solutions: compute, network, and storage. In order to develop a high performance computing architecture, multiple computer servers are networked together to form a cluster.

What is HPC equipment?

High-performance computing (HPC) is a technology that harnesses the power of supercomputers or computer clusters to solve complex problems requiring massive computation.

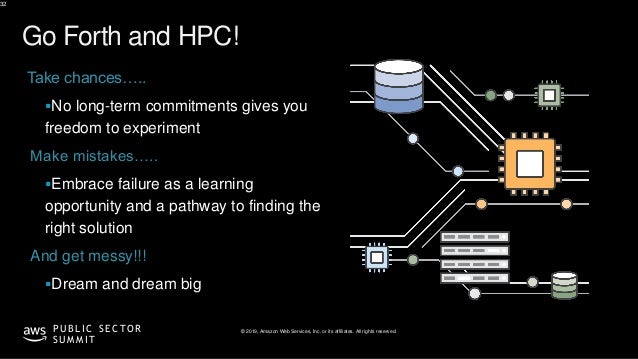

Is high performance computing hard?

An HPC machine is more complex than a simple desktop computer — but don't be intimidated! The basics aren't that much more difficult to grasp, and there are lots of companies (big and small) out there that can provide as much or as little help as you need.

How Does AWS Enable High Performance Computing (HPC)?

High performance computing differs from everyday computing in speed and processing power. An HPC system contains the same elements as a regular desktop computer, but enabling massive processing power. Today, most HPC workloads use massively distributed clusters of small machines rather than monolithic supercomputers.

6 AWS High Performance Computing Services

Below are the most common AWS services used to support HPC deployments.

8 Best Practices for Running HPC on AWS

Here are some best practices to help you make the most of AWS for HPC:

HPC on AWS with Run:AI

Run:AI automates resource management and orchestration for HPC clusters utilizing GPU hardware—in AWS, other public clouds, and on-premises. With Run:AI, you can automatically run as many compute intensive workloads as needed.

Creation of the default account password

The EnginFrame default administrator account, named ec2-user, requires a password. To improve the security of the solution, the password must be created by the user and saved in AWS Secrets Manager.

Install an application

The created environment doesn’t contain any installed applications. You can proceed to the installation of the desired applications accessing to one of the EnginFrame instances from AWS Systems Manager Session Manager. The connection is possible using the Amazon EC2 Console.

Accessing the EnginFrame portal

When you access to the URL provided at the end of the previous step, the following webpage is displayed.

Start your first job

The service on the left side of the portal, named Job Submission, can be used to submit your jobs in the cluster.

Clean up and teardown

In order to avoid additional charges, you can destroy the created resources by running the cdk destroy VPC AuroraServerless EFS FSX ALB EnginFrame command from the enginframe-aurora-serverless repository’s root directory.

Conclusions

In this post, we show how to deploy a complete highly available Linux HPC environment spread across two Availability Zones using AWS CDK. The solution uses EnginFrame as central point of access to the two HPC clusters, one per AZ, built using AWS ParallelCluster.

Background

NICE EnginFrame is an installable service-side application that provides a user-friendly application portal for HPC job submission, control, and monitoring. It includes sophisticated data management for every stage of a job’s lifetime, and integrates with HPC job schedulers and middleware tools to submit, monitor, and manage those jobs.

AWS HPC Connector

AWS HPC Connector begins by letting you register ParallelCluster 3 configuration files in the EnginFrame admin portal. The ParallelCluster configuration file is designed as a simple YAML text file for describing the resources needed for your HPC applications and automating their provisioning in a secure manner.

Using AWS HPC Connector

Let’s take a quick look at AWS HPC Connector in action. For this demo, we’re using a preconfigured EnginFrame installation.

When to use AWS HPC Connector

Now that you have a sense for how to use EnginFrame AWS HPC Connector, let’s tackle why and when to use it:

There is a lot more to cover

As we mentioned, EnginFrame is a product for managing and making available complex HPC systems. It should come as no surprise, then, that we’ve only scratched the surface of what EnginFrame can do in this short blog post.

Conclusion

In this post, we introduced a NICE EnginFrame AWS HPC Connector, a new EnginFrame plugin that allows for creation and management of remote HPC clusters on AWS. We gave some background on EnginFrame and a high-level overview of how AWS HPC Connector works from the perspective of the administrator.

AWS Research Initiative

AWS helps researchers process complex workloads by providing the cost-effective, scalable and secure compute, storage, analytics, and artificial intelligence/machine learning capabilities needed to accelerate time-to-science.

Peering with Global Research Networks

By peering with Global Research Networks, AWS gives researchers robust network connections to the AWS cloud. These network connections allow for reliable movement of data between your home institution, distributed data collection sites, and AWS.

AWS Cloud Credits for Research Program

The AWS Cloud Credits for Research Program supports researchers who seek to build cloud-hosted publicly available science-as-a-service applications and tools, perform proof of concept tests in the cloud, or train communities on the usage of the cloud for research workloads.

AWS Global Data Egress Waiver Program

AWS makes cloud budgeting more predictable by waiving data egress fees in the AWS Cloud, for qualified researchers and academic customers. These are fees associated with “data transfer out from AWS to the Internet." Contact your AWS representative to learn more about this program

Elastic Fabric Adapter (EFA)

EFA brings the scalability, flexibility, and elasticity of cloud to tightly-coupled HPC applications. With EFA, tightly-coupled HPC applications have access to lower and more consistent latency and higher throughput than traditional TCP channels, enabling them to scale better.

NICE DCV

NICE DCV is a graphics-optimized streaming protocol that is well suited for a wide range of usage scenarios ranging from streaming productivity applications on mobile devices to HPC simulation visualization. On the server side, NICE DCV supports Windows and Linux.

AWS ParallelCluster

You should use AWS ParallelCluster if you want to run High Performance Computing (HPC) workloads on AWS. You can use AWS ParallelCluster to rapidly build test environments for HPC applications as well as use it as the starting point for building HPC infrastructure in the Cloud.

NICE EnginFrame

You should use EnginFrame because it can increase the productivity of domain specialists (such as scientists, engineers, and analysts) by letting them easily extend their workflows to the cloud and reduce their time-to-results.

Creation of The Default Account Password

File System Structure

- The solution contains the following shared file systems: 1. /efsis the file system shared between the two clusters and can be used to install your applications; 2. /fsx_cluster1 and /fsx_cluster2are the high-performance file systems of the related clusters and can be used to contain your scratch and input and output files.

Install An Application

- The created environment doesn’t contain any installed applications. You can proceed to the installation of the desired applications accessing to one of the EnginFrame instances from AWS Systems Manager Session Manager. The connection is possible using the Amazon EC2 Console.

Accessing The EnginFrame Portal

- When you access to the URL provided at the end of the previous step, the following webpage is displayed. The Applicationssection is used to start your batch jobs. The User, required for the access, is named ec2-user. The Passwordof this user is the one saved in Secret Manager.

Start Your First Job

- The service on the left side of the portal, named Job Submission, can be used to submit your jobs in the cluster. The new page contains different parameters that you can use to personalize your job. The following parameters can be personalized in the interface: 1. Job Name: The name of the job 2. Project Name: The Project associated with the job 3. Application Executable: The full path …

Clean Up and Teardown

- In order to avoid additional charges, you can destroy the created resources by running the cdk destroy VPC AuroraServerless EFS FSX ALB EnginFrame command from the enginframe-aurora-serverless repository’s root directory.

Conclusions

- In this post, we show how to deploy a complete highly available Linux HPC environment spread across two Availability Zones using AWS CDK. The solution uses EnginFrame as central point of access to the two HPC clusters, one per AZ, built using AWS ParallelCluster. The selection of two different Availability Zones is used to maintain full operations in case of failure of a specific AZ, …