What is Spark and why it is used?

Spark is an open source framework focused on interactive query, machine learning, and real-time workloads. It does not have its own storage system, but runs analytics on other storage systems like HDFS, or other popular stores like Amazon Redshift, Amazon S3, Couchbase, Cassandra, and others.

What is the use of Spark in Java?

Spark is a Java micro framework that allows to quickly create web applications in Java 8. Spark is a lightweight and simple Java web framework designed for quick development. Sinatra, a popular Ruby micro framework, was the inspiration for it.

What is Apache Spark in Java?

Apache Spark is a unified analytics engine for large-scale data processing. It provides high-level APIs in Java, Scala, Python and R, and an optimized engine that supports general execution graphs.

Is Apache Spark and Spark are same?

Apache Spark belongs to "Big Data Tools" category of the tech stack, while Spark Framework can be primarily classified under "Microframeworks (Backend)". Apache Spark is an open source tool with 22.9K GitHub stars and 19.7K GitHub forks. Here's a link to Apache Spark's open source repository on GitHub.

How is Spark used today?

Spark has been called a “general purpose distributed data processing engine”1 and “a lightning fast unified analytics engine for big data and machine learning”². It lets you process big data sets faster by splitting the work up into chunks and assigning those chunks across computational resources.

Does Spark need JDK?

1. JDK. JDK is short for Java Development Kit, the development environment for Java. Spark is written in Scala, a language that is very similar to Java and also runs on JVM (Java Virtual Machine), so we need to install JDK first.

Does Spark need Hadoop?

Do I need Hadoop to run Spark? No, but if you run on a cluster, you will need some form of shared file system (for example, NFS mounted at the same path on each node). If you have this type of filesystem, you can just deploy Spark in standalone mode.

What is Apache Spark best used for?

Apache Spark's key use case is its ability to process streaming data. With so much data being processed on a daily basis, it has become essential for companies to be able to stream and analyze it all in real-time. And Spark Streaming has the capability to handle this extra workload.

Is Apache Spark an ETL tool?

Apache Spark provides the framework to up the ETL game. Data pipelines enable organizations to make faster data-driven decisions through automation. They are an integral piece of an effective ETL process because they allow for effective and accurate aggregating of data from multiple sources.

Which language is best for Spark?

ScalaSpark is written in Scala Scala is not only Spark's programming language, but it's also scalable on JVM. Scala makes it easy for developers to go deeper into Spark's source code to get access and implement all the framework's newest features.

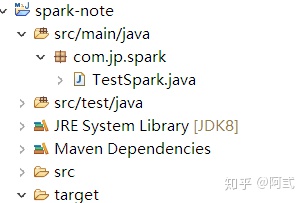

Which IDE is best for Spark?

IntelliJ IDEAWhile many of the Spark developers use SBT or Maven on the command line, the most common IDE we use is IntelliJ IDEA.

Is Spark a database?

However, Spark is a database also. So, if you create a managed table in Spark, your data will be available to a whole lot of SQL compliant tools. Spark database tables can be accessed using SQL expressions over JDBC-ODBC connectors. So you can use other third-party tools such as Tableau, Talend, Power BI and others.

What is Apache Spark best used for?

Apache Spark's key use case is its ability to process streaming data. With so much data being processed on a daily basis, it has become essential for companies to be able to stream and analyze it all in real-time. And Spark Streaming has the capability to handle this extra workload.

Is Spark used in real-time?

Spark Streaming supports the processing of real-time data from various input sources and storing the processed data to various output sinks.

How to make a Spark distribution?

To create a Spark distribution like those distributed by the Spark Downloads page, and that is laid out so as to be runnable, use ./dev/make-distribution.sh in the project root directory. It can be configured with Maven profile settings and so on like the direct Maven build. Example:

What is the best build tool for Spark?

Maven is the official build tool recommended for packaging Spark, and is the build of reference . But SBT is supported for day-to-day development since it can provide much faster iterative compilation. More advanced developers may wish to use SBT.

How to enable hive in Spark?

To enable Hive integration for Spark SQL along with its JDBC server and CLI, add the -Phive and -Phive-thriftserver profiles to your existing build options. By default Spark will build with Hive 2.3.9.

Does mvn package include Hadoop?

The assembly directory produced by mvn package will, by default, include all of Spark’s dependencies, including Hadoop and some of its ecosystem projects. On YARN deployments, this causes multiple versions of these to appear on executor classpaths: the version packaged in the Spark assembly and the version on each node, included with yarn.application.classpath . The hadoop-provided profile builds the assembly without including Hadoop-ecosystem projects, like ZooKeeper and Hadoop itself.

Can you pip install Spark?

If you are building Spark for use in a Python environment and you wish to pip install it, you will first need to build the Spark JARs as described above. Then you can construct an sdist package suitable for setup.py and pip installable package.

Does Spark build add MAVEN_OPTS?

The test phase of the Spark build will automatically add these options to MAVEN_OPTS, even when not using build/mvn.

Do you need hive to run PySpark?

If you are building PySpark and wish to run the PySpark tests you will need to build Spark with Hive support.

What is Maven build?

The maven build includes support for building a Debian package containing the assembly ‘fat-jar’, PySpark, and the necessary scripts and configuration files. This can be created by specifying the following:

Can Spark read from HDFS?

Because HDFS is not protocol-compatible across versions, if you want to read from HDFS, you’ll need to build Spark against the specific HDFS version in your environment. You can do this through the “hadoop.version” property. If unset, Spark will build against Hadoop 1.0.4 by default.

What is Maven used for?

Maven is a build automation tool used primarily for Java projects. It addresses two aspects of building software: First, it describes how software is built, and second, it describes its dependencies.

Why do I like Spark?

One of the reasons I like Spark is because it allows this minimal style. This is great for creating very fast and very lightweight services. Kinda like the philosophy behind the Unix/Linux tools - small, efficient tools (or these days, micro-services) that do one thing well - and Spark's REST allows connecting them easily.

How many libraries are there in Spark?

This is not at all complicated. Four libraries (including Spark) and you have a completely reliable set-up.

Can I use Maven with Idea?

Very nice. While I use IDEA and can use Maven, I prefer not to. I prefer to manually manage my libraries and check them in. Why? disk is cheap, and in 10 years you can rebuild your client's system *exactly* as it is in production (even if your Maven repository disappears).

Is Spark good for microservices?

Spark is absolutely perfect for building microservices in this way (which is not how everyone works, but after a couple of decades in the game and with some very-long lived software out there, is how I prefer to roll). Having very fine grained control of dependencies is a non-issue when there are frameworks like Spark that have minimal dependencies (unlike, say, Spring Boot, which is a bit heavier in comparison).

Is Maven beginner friendly?

I get your concern about Maven, but for most my projects managing dependencies manually would be a nightmare (especially updating them). Maven is also very beginner friendly, and beginners are the target audience for most my tutorials. If you have the time though, would you be willing to write a short tutorial on setting up Spark 'manually', outlining your workflow and rationale for doing it that way? The format doesn't matter, I can publish it here for you.