What does the Stream Analytics service do?

Stream Analytics can process millions of events every second and it can deliver results with ultra low latencies. It allows you to scale out to adjust to your workloads. Stream Analytics supports higher performance by partitioning, allowing complex queries to be parallelized and executed on multiple streaming nodes.

What is Azure Stream Analytics used for?

Azure Stream Analytics is a fully managed, real-time analytics service designed to help you analyze and process fast moving streams of data that can be used to get insights, build reports or trigger alerts and actions.

How do I create a Stream Analytics job?

Sign in to the Azure portal. Select Create a resource in the upper left-hand corner of the Azure portal. Select Analytics > Stream Analytics job from the results list. Enter a name to identify your Stream Analytics job.

Does Stream Analytics support Python?

Unfortunately, Azure Stream Analytics doesn't support queries from a python script. Queries in Azure Stream Analytics are expressed in a SQL-like query language. The language constructs are documented in the Stream Analytics query language reference guide.

How does Stream Analytics differ from regular analytics?

Streaming analytics complements traditional analytics by adding real-time insight to your decision-making toolbox. In some circumstances, streaming analytics enables better business decisions by focusing on live, streaming data.

Does Microsoft stream have analytics?

Today in Microsoft Stream, you can see analytics that show you the popularity of a video based on view, comment, and like counts. If you are a global administrator for Office 365, now you can set up a workflow to pull deeper analytics.

What is Oracle Stream Analytics?

Oracle Stream Analytics allows users to process and analyze large scale real-time information by using sophisticated correlation patterns, enrichment, and machine learning. It offers real-time actionable business insight on streaming data and automates action to drive today's agile businesses.

What is an IoT hub?

IoT Hub is a Platform-as-a-Services (PaaS) managed service, hosted in the cloud, that acts as a central message hub for bi-directional communication between an IoT application and the devices it manages.

What is Azure data lake and Stream Analytics tools?

Azure Data Lake is the answer to the complexity of managing and processing large volumes of data. It allows you to run rich analytics with the speed and performance of SQL Server and the flexibility of Azure with all the benefits of a managed database service.

How many stream analytic patterns does Dr Perera define?

13 patternsDr. Srinath Perera, an expert on CEP and streaming analytic, has summarised 13 patterns for streaming real-time analytics.

How do I send data to event hub using Python?

In this section, you create a Python script to send events to the event hub that you created earlier.Open your favorite Python editor, such as Visual Studio Code.Create a script called send.py. This script sends a batch of events to the event hub that you created earlier.Paste the following code into send.py:

What are azure event hubs?

Azure Event Hubs is a big data streaming platform and event ingestion service. It can receive and process millions of events per second. Data sent to an event hub can be transformed and stored by using any real-time analytics provider or batching/storage adapters.

Which of the following are capabilities and benefits of Azure Stream Analytics?

SecurityAzure Bastion. Private and fully managed RDP and SSH access to your virtual machines.Web Application Firewall. A cloud-native web application firewall (WAF) service that provides powerful protection for web apps.Azure Firewall. ... Azure Firewall Manager.

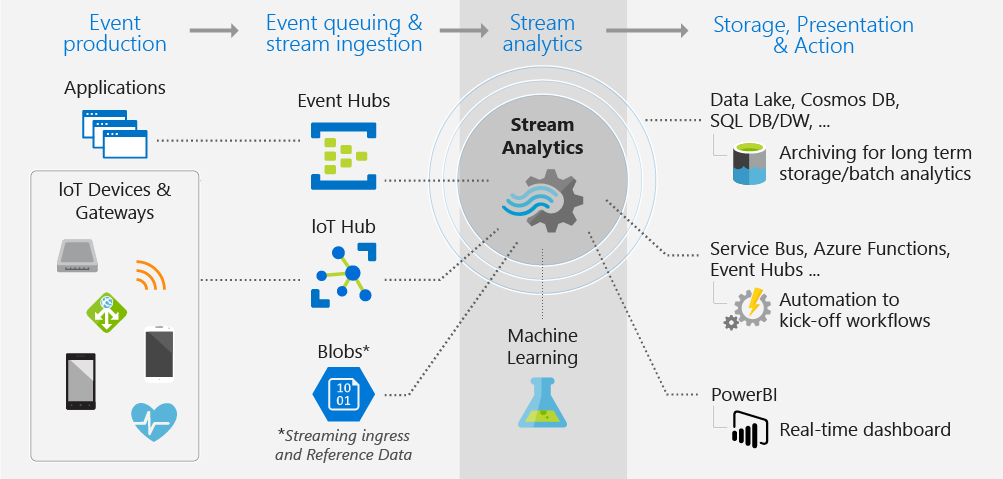

Which inputs can Azure Stream Analytics use to stream data from?

Stream Analytics has first-class integration with Azure data streams as inputs from three kinds of resources:Azure Event Hubs.Azure IoT Hub.Azure Blob storage.Azure Data Lake Storage Gen2.

How do I use Azure data Lake analytics?

Develop U-SQL programsUse Data Lake Tools for Visual Studio.Use a U-SQL database project in Visual Studio.Use Job Browser and Job View.Debug user-defined C# code.Use Azure Data Lake Tools for Visual Studio Code.Use custom code with U-SQL in Visual Studio Code.Schedule jobs using SSIS.

What are Azure analysis services?

Azure Analysis Services is a fully managed platform as a service (PaaS) that provides enterprise-grade data models in the cloud. Use advanced mashup and modeling features to combine data from multiple data sources, define metrics, and secure your data in a single, trusted tabular semantic data model.

How to add stream input to IoT Hub?

Basically, we will be setting the Inputs and Outputs and then we will be writing a query that can take the inputs and send the values to the configured output. Click on the Inputs label and click on Add stream input and then select the IoT Hub.

What is Azure stream analytics?

An Azure Stream Analytics is basically an engine that processes the events coming from the devices we have configured. It can be an Azure IoT Dev Kit (MXChip) or a Raspberry Pi or something else. The Stream Analytics Job has two vital parts -. Input source.

Can you create a new database?

You can either create a new database or select the one you had already created. I used the existing database and table.

Can you test functionality in portal?

You also have an option to test the functionality in the portal itself. The only thing you will have to do is to prepare the sample input data. I have prepared the sample JSON data as follows.

Can you select all fields in Stream?

As you can see, I am just selecting the fields I may need and saving them to our stream outputs. You can always select all the fields by using the select * query, but the problem with that is, you will have to set up the table columns in the same order of the stream data. Otherwise, you may get an error as below.

Can you use special characters in an alias field?

Please note that you are allowed to use special characters in the Input alias field, but if you use such, please make sure to include the same inside [] in the query, which we will be creating later.

Do we always need prerequisites?

To do wonderful things, we always need some prerequisites.

Prepare the input data

Before defining the Stream Analytics job, you should prepare the input data. The real-time sensor data is ingested to IoT Hub, which later configured as the job input. To prepare the input data required by the job, complete the following steps:

Create blob storage

From the upper left-hand corner of the Azure portal, select Create a resource > Storage > Storage account.

Configure job input

In this section, you will configure an IoT Hub device input to the Stream Analytics job. Use the IoT Hub you created in the previous section of the quickstart.

Clean up resources

When no longer needed, delete the resource group, the Stream Analytics job, and all related resources. Deleting the job avoids billing the streaming units consumed by the job. If you're planning to use the job in future, you can stop it and restart it later when you need.

Next steps

In this quickstart, you deployed a simple Stream Analytics job using Azure portal. You can also deploy Stream Analytics jobs using PowerShell, Visual Studio, and Visual Studio Code.

How Does Stream Analytics Work?

Streaming analytics, also known as event stream processing, is the analysis of huge pools of current and “in-motion” data through the use of continuous queries, called event streams. These streams are triggered by a specific event that happens as a direct result of an action or set of actions, like a financial transaction, equipment failure, a social post or a website click or some other measurable activity. The data can originate from the Internet of Things (IoT), transactions, cloud applications, web interactions, mobile devices, and machine sensors. By using streaming analytics platforms organizations can extract business value from data in motion just like traditional analytics tools would allow them to do with data at rest. Real-time streaming analytics help a range of industries by spotting opportunities and risks.

How does streaming data technology help businesses?

Create new opportunities. The existence of streaming data technology brings the type of predictability that cuts costs, solves problems and grows sales. It has led to the invention of new business models, product innovations, and revenue streams.

Why use streaming analytics?

By using streaming analytics platforms organizations can extract business value from data in motion just like traditional analytics tools would allow them to do with data at rest. Real-time streaming analytics help a range of industries by spotting opportunities and risks.

Why do businesses use streaming data?

Increased competitiveness. Businesses looking to gain a competitive advantage can use streaming data to discern trends and set benchmarks faster. This way they can outpace their competitors who are still using the sluggish process of batch analysis.

What can a company monitor in real time?

Your company can now monitor in real time: manufacturing closed-loop control systems; the health of a network or a system; field assets such as trucks, oil rigs, vending machines; and financial transactions such as authentications and validations.

How does Big Data help companies?

Finding missed opportunities. The streaming and analyzing of Big Data can help companies to uncover hidden patterns, correlations and other insights. Companies can get answers from it almost immediately being able to upsell, and cross-sell clients based on what the information presents.

Why is data visualization important?

Data visualization. Keeping an eye on the most important company information can help organizations manage their key performance indicators (KPIs) on a daily basis. Streaming data can be monitored in real time allowing companies to know what is occurring at every single moment

Introduction: The monitor page

The Azure portal surfaces key performance metrics that can be used to monitor and troubleshoot your query and job performance. To see these metrics, browse to the Stream Analytics job you are interested in seeing metrics for and view the Monitoring section on the Overview page.

Customizing Monitoring in the Azure portal

You can adjust the type of chart, metrics shown, and time range in the Edit Chart settings. For details, see How to Customize Monitoring.

Latest output

Another interesting data point to monitor your job is the time of the last output, shown in the Overview page. This time is the application time (i.e. the time using the timestamp from the event data) of the latest output of your job.

What is Azure Stream Analytics Cluster?

Azure Stream Analytics Cluster offers a single-tenant deployment for complex and demanding streaming scenarios. At full scale, Stream Analytics clusters can process more than 200 MB/second in real time. Stream Analytics jobs running on dedicated clusters can leverage all the features in the Standard offering and includes support for private link connectivity to your inputs and outputs.

Why is stream analytics cluster so reliable?

Because it is dedicated, Stream Analytics cluster offers more reliable performance guarantees. All the jobs running on your cluster belong only to you.

What is VNet support?

VNet support that allows your Stream Analytics jobs to connect to other resources securely using private endpoints.

How many SUs are there in a streaming unit?

A Streaming Unit is the same across Standard and Dedicated offerings. You can purchase 36, 72, 108, 144, 180 or 216 SUs for each cluster. A Stream Analytics cluster can serve as the streaming platform for your organization and can be shared by different teams working on various use cases.

Does Stream Analytics support VNets?

Stream Analytics jobs alone don't support VNets. If your inputs or outputs are secured behind a firewall or an Azure Virtual Network, you have the following two options:

Can you scale a cluster?

Yes. You can easily configure the capacity of your cluster allowing you to scale up or down as needed to meet your changing demand.

Prerequisites

Before you start to configure autoscaling for your job, complete the following steps.

Set up Azure Automation

Add the following variables inside the Azure Automation account. These variables will be used in the runbooks that are described in the next steps.

Autoscale based on a schedule

Azure Automation allows you to configure a schedule to trigger your runbooks.

Autoscale based on load

There might be cases where you cannot predict input load. In such cases, it is more optimal to scale up/down in steps within a minimum and maximum bound. You can configure alert rules in your Stream Analytics jobs to trigger runbooks when job metrics go above or below a threshold.

How many cores does Azure Stream Analytics use?

A job on Azure Stream Analytics on an IoT Edge device can use one CPU core. We don’t limit the volume of data that can be processed by this job.

What is Azure Stream Analytics?

Azure Stream Analytics on IoT Edge enables you to run your stream processing jobs on devices with Azure IoT Edge. Create your stream processing jobs in Azure Stream Analytics and deploy them to devices running Azure IoT Edge through Azure IoT Hub.

Why are streaming units needed?

The primary factors that impact streaming units needed are query complexity, query latency, and the volume of data processed. Streaming units can be used to scale out a job in order to achieve higher throughput. Depending on the complexity of the query and the throughput required, more streaming units may be necessary to achieve your application's performance requirements.

How long is Azure free?

Get free cloud services and a $200 credit to explore Azure for 30 days.

What is a streaming unit?

A standard streaming unit is a blend of compute, memory, and throughput.

When did streaming jobs migrate?

On February 1, 2017, existing streaming jobs were migrated without any interruption to the standard streaming model. Contact us, if you have any questions.

When does billing start in ASA?

Note: Billing starts when an ASA job is deployed to devices, no matter what the job status is (running/failed/stopped).