Why do we need Activation Functions?

- We use the Activation Function to achieve non-linearity. For instance, we can feed any data into it.

- A nonlinear function is used to achieve the advantages of a multilayer network. ...

- If we do not apply the activation function then the output would be a linear function, and that will be a Simple Linear Regression Model.

What are the different types of activation functions?

- A. Identity Function: Identity function is used as an activation function for the input layer. ...

- B. Threshold/step Function: It is a commonly used activation function. ...

- C. ReLU (Rectified Linear Unit) Function: It is the most popularly used activation function in the areas of convolutional neural networks and deep learning.

- D. ...

- E. ...

How do we 'train' neural networks?

Perceptron Learning Rule (Rosenblatt’s Rule)

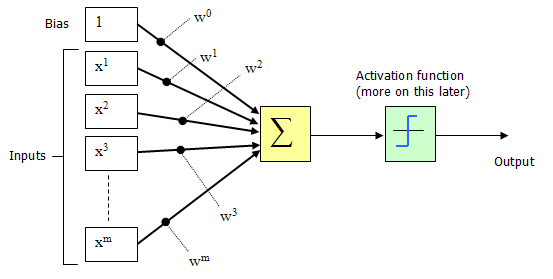

- Steps To Follow. The basic idea is to mimic how a single neuron in the brain works: it either fires or it doesn't. ...

- Prediction Of The Class Label. Case 1: Perceptron predicts the class label correctly. ...

- Convergence In Neural Network. Convergence is performed so that cost function gets minimized and preferably reaches the global minima.

What is an activation function?

… Neuroinflammation is closely related to the pathogenesis of neurodegenerative diseases. Activation of microglia, the resident immune cells in CNS, induces inflammatory responses, resulting in the release of neurotoxic molecules, which favors neuronal death and neurodegeneration.

What is the function of neural networks?

Neural networks are often discussed as black-box type algorithms ... All networks were trained with a mean squared error loss function coupled with (L2) regularization to prevent overfitting (Eq. 2). The Adam optimization algorithm was used for all ...

Why do neural networks use activation function?

Simply put, an activation function is a function that is added into an artificial neural network in order to help the network learn complex patterns in the data. When comparing with a neuron-based model that is in our brains, the activation function is at the end deciding what is to be fired to the next neuron.

What is the benefit of activation function?

They allow back-propagation because they have a derivative function which is related to the inputs. They allow “stacking” of multiple layers of neurons to create a deep neural network. Multiple hidden layers of neurons are needed to learn complex data sets with high levels of accuracy.

Why activation functions are used in neural networks what will happen if a neural network is built without activation functions?

A neural network without an activation function is essentially just a linear regression model. Thus we use a non linear transformation to the inputs of the neuron and this non-linearity in the network is introduced by an activation function.

What is the best activation function in neural networks?

ReLU (Rectified Linear Unit) Activation Function The ReLU is the most used activation function in the world right now. Since, it is used in almost all the convolutional neural networks or deep learning.

Why do we need activation functions or why do we need to introduce non linearity in our networks?

The significance of the activation function lies in making a given model learn and execute difficult tasks. Further, a non-linear activation function allows the stacking of multiple layers of neurons to create a deep neural network, which is required to learn complex data sets with high accuracy.

What is activation function in neural network and its types?

An activation function is a very important feature of an artificial neural network , they basically decide whether the neuron should be activated or not. In artificial neural networks, the activation function defines the output of that node given an input or set of inputs.

What is the purpose of activation function?

The purpose of the activation function is to introduce non-linearity into the output of a neuron. Explanation :-. We know, neural network has neurons that work in correspondence ...

Why do we need non linear activation functions?

The activation function does the non-linear transformation to the input making it capable to learn and perform more complex tasks.

What is the final activation function of the last layer?

No matter how many layers we have, if all are linear in nature, the final activation function of last layer is nothing but just a linear function of the input of first layer. Uses : Linear activation function is used at just one place i.e. output layer.

Why Neural Networks Have Activation Functions

Once in an interview, I was asked “Why do neural networks have activation functions?” At that moment I had a strong conviction that neural networks without activation functions were just linear models because I had read it in a book somewhere, but I wasn’t sure why that was true.

Before We Get to Neural Networks

I think it’s a little overwhelming at first to conceptualize how a gigantic neural network with millions of parameters could possibly be a linear model if we don’t add any nonlinear activation functions to it, so before we begin contextualizing our discussion with neural networks, let’s talk about univariate models.

A Toy Neural Network

Suppose we had a simple neural network that had two inputs, a single hidden layer with two nodes (with linear activation functions), and a single output.

XOR Linear vs XOR Nonlinear

As a final example, one that I feel is the quickest to illustrate the differences between neural networks with all linear activation functions and neural networks with nonlinear activation functions, let’s examine how different neural networks perform on XOr.

Conclusion

There is no such thing as a “most important” component to a neural network, however, activation functions make a strong argument for being first in line for that title.

Why do neural networks have activation functions?

Activation Functions are used to introduce non-linearity in the network. A neural network will almost always have the same activation function in all hidden layers. This activation function should be differentiable so that the parameters of the network are learned in backpropagation.

What is activation function?

An Activation Function decides whether a neuron should be activated or not. This means that it will decide whether the neuron’s input to the network is important or not in the process of prediction using simpler mathematical operations.

What are the advantages of using Relu as an activation function?

Mathematically it can be represented as: The advantages of using ReLU as an activation function are as follows: Since only a certain number of neurons are activated, the ReLU function is far more computationally efficient when compared to the sigmoid and tanh functions.

What is the function of the activation function in deep learning?

In deep learning, this is also the role of the Activation Function—that’s why it’s often referred to as a Transfer Function in Artificial Neural Network .

What is the role of segregation in neural networks?

The segregation plays a key role in helping a neural network properly function, ensuring that it learns from the useful information rather than get stuck analyzing the not-useful part. And this is also where activation functions come into the picture.

What are the limitations of binary step function?

Here are some of the limitations of binary step function: It cannot provide multi-value outputs —for example, it cannot be used for multi-class classification problems. The gradient of the step function is zero, which causes a hindrance in the backpropagation process.

Why do neural networks need activation functions?

Neural networks have to implement complex mapping functions hence they need activation functions that are non-linear in order to bring in the much needed non-linearity property that enables them to approximate any function.

Why is activation important?

This is important to avoid large values accumulating high up the processing hierarchy. Then lastly, activation functions are decision functions, the ideal decision function is the heaviside step function.

Why can neural networks learn complex representations?

Neural network is one of the methods of finding this function. Neural networks can learn complex representations because of the non-linearity of activation functions. Without the non-linearity, the network is restricted to learning just linear functions.

What is activation term?

In Neural network, range is changed generally to 0 to 1 or -1 to 1 by an activation function. Activation term is used as analogy of biological neuron. When input to activation function is in a specific range, then only it gives output (becomes active) Continue Reading.

Why do we need a neural network model?

Now, we need a neural network model to learn and represent almost anything and any arbitrary complex function that maps inputs to outputs. Neural networks are considered universal function approximators. It means that they can compute and learn any function.

What is the difference between a neuron and a linear network?

A neuron without an activation function is equivalent to a neuron with a linear activation function given by . Such an activation function adds no non-linearity hence the whole network would just be equivalent to a single linear neuron. That is to say, having a multi-layer linear network is equivalent to one linear node.

What is activation function?

In summary, activation functions provide the building blocks that can be used repeatedly in two dimensions of the network structure so that, combined with an attenuation matrix to vary the weight of signaling from layer to layer, is known to be able to approximate an arbitrary and complex function.

What would happen if you only had linear layers in a neural network?

If you only had linear layers in a neural network, all the layers would essentially collapse to one linear layer, and, therefore, a "deep" neural network architecture effectively wouldn't be deep anymore but just a linear classifier.

Why is post millenial excitement about deeper networks important?

The post-millenial excitement about deeper networks is because the patterns in two distinct classes of complex inputs have been successfully identified and put into use within larger business, consumer, and scientific markets.

What is a multilayer perceptron?

Artificial networks such as the multi-layer perceptron and its variants are matrices of functions with non-zero curvature that, when taken collectively as a circuit, can be tuned with attenuation grids to approximate more complex functions of non-zero curvature. These more complex functions generally have multiple inputs (independent variables).