Ten Ways to Easily Improve Oracle Solaris ZFS Filesystem Performance

- Add Enough RAM

- Add More RAM

- Boost Deduplication Performance With Even More RAM

- Use SSDs to Improve Read Performance

- Use SSDs to Improve Write Performance

- Use Mirroring

- Add More Disks

- Leave Enough Free Space

- Hire An Expert

- Be An Evil Tuner - But Know What You Do

Full Answer

How can I increase the speed of ZFS?

The more vdevs ZFS has to play with, the more shoulders it can place its load on and the faster your storage performance will become. This works both for increasing IOPS and for increasing bandwidth, and it'll also add to your storage space, so there's nothing to lose by adding more disks to your pool.

Why is ZFS so good for performance?

This is great for performance because it gives ZFS the opportunity to turn random writes into sequential writes - by choosing the right blocks out of the list of free blocks so they're nicely in order and thus can be written to quickly. That is, when there areenough blocks.

How does ZFS ensure proper alignment between drives?

ZFS will attempt to ensure proper alignment by extracting the physical sector sizes from the disks. ZFS makes the implicit assumption that the sector size reported by drives is correct and calculates ashift based on that. The largest sector size will be used per top-level vdev to avoid partial sector modification overhead is eliminated.

Why does ZFS slow down when you don't have enough blocks?

Because if you don't have enough free blocks in your pool, ZFS will be limited in its choice, and that means it won't be able to choose enough blocks that are in order, and hence it won't be able to create an optimal set of sequential writes, which will impact write performance.

See more

Does ZFS use a lot of ram?

So yes, it can run on watchOS-level amount of RAM. ZFS data deduplication does not require much more ram than the non-deduplicated case. Performance will depend heavily on IOPS when the DDT entries are not in cache, but the system will run slowly even with miniscule RAM.

How much ram do I need for ZFS cache?

Configuring Max Memory Limit for ARC On Linux, ZFS uses 50% of the installed memory for ARC caching by default. So, if you have 8 GB of memory installed on your computer, ZFS will use 4 GB of memory for ARC caching at max.

Is ZFS better than Ext4?

Ext4 is convenient because it's the default on Linux, but it lacks the user-friendly, up-to-date approach of ZFS. Additionally, ZFS has higher hardware requirements for operation and many devices will not have sufficient CPU and Memory available to run ZFS with it's compression and deduplication advantages.

What is so good about ZFS?

The advantages of using ZFS include: ZFS is built into the Oracle OS and offers an ample feature set and data services free of cost. Both ZFS is a free open source filesystem that can be expanded by adding hard drives to the data storage pool.

How much RAM do I need for ZFS deduplication?

For every TB of pool data, you should expect 5 GB of dedup table data, assuming an average block size of 64K. This means you should plan for at least 20GB of system RAM per TB of pool data, if you want to keep the dedup table in RAM, plus any extra memory for other metadata, plus an extra GB for the OS.

How much memory is ZFS using?

ZFS Adaptive Replacement Cache (ARC) tends to use up to 75% of the installed physical memory on servers with 4GB or less and up to everything except 1GB of memory on servers with more than 4GB of memory to cache data in a bid to improve performance.

Is ZFS the best file system?

Sad as it makes me, as of 2017, ZFS is the best filesystem for long-term, large-scale data storage. Although it can be a pain to use (except in FreeBSD, Solaris, and purpose-built appliances), the robust and proven ZFS filesystem is the only trustworthy place for data outside enterprise storage systems.

Is ZFS better than RAID?

ZFS over HW RAID is fine, but only if you understand what this means. Specifically: not having redundancy at the vdev level, it can detect but not fix data corruption. a faulty controller can totally trash your pool (a point only partially invalidated by the fact that a dying CPU/RAM/MB can have a similar effect)

Should I use ZFS on laptop?

If you've got loads of RAM, loads of cpu resources, and a need for ZFS - it's a good production file-system. It's resource heavy though; so I'd skip using it unless you benefit from what it provides. As a side note, if it's not soldered on, I recommend replacing the SSD.

Can Windows read ZFS?

There is no OS level support for ZFS in Windows. As other posters have said, your best bet is to use a ZFS aware OS in a VM.

How do I configure ZFS?

How to Set Up ZFS on a SystemCreate the basic file system. ... Set the properties to be shared by children file systems. ... Create the individual file systems that are grouped under the basic file system. ... (Optional) Set properties specific to the individual file system.

Is ZFS a JBOD?

There is no JBOD in ZFS (most of the time)

Determining Cache and Pool Size

After the ARC, ZIL and L2ARC comes the hard disks, comprising the ZFS pool. This tier is where your data lives and is usually composed of high capacity hard disks. Performance at this tier is the lowest of all, as it depends on spinning disks rather than flash drives.

IOPS by Type of Storage

In order to configure your FreeNAS or TrueNAS system for ideal performance between cache and pool, it is important to determine the Working Set Size of your system. Knowing the active data and performance requirements of your storage environment will allow you to put together a system that maximizes performance.

Conclusion

ZFS Caching can be an excellent way to maximize your system performance and give you flash speed with spinning disk capacity. TrueNAS capitalizes on this technology and the staff at iXsystems have the expertise to help you design a system that fits your needs and leverages the caching capabilities of ZFS to their full extent.

How to understand ZFS?

To really understand ZFS, you need to pay real attention to its actual structure. ZFS merges the traditional volume management and filesystem layers, and it uses a copy-on-write transactional mechanism—both of these mean the system is very structurally different than conventional filesystems and RAID arrays.

What is a zpool Vdev?

vdev. Each zpool consists of one or more vdevs (short for virtual device). Each vdev, in turn, consists of one or more real devices. Most vdevs are used for plain storage, but several special support classes of vdev exist as well—including CACHE, LOG, and SPECIAL. Each of these vdev types can offer one of five topologies—single-device, RAIDz1, ...

What is a Zpool?

zpool. The zpool is the uppermost ZFS structure. A zpool contains one or more vdevs, each of which in turn contains one or more devices. Zpools are self-contained units—one physical computer may have two or more separate zpools on it, but each is entirely independent of any others.

Is there redundancy in a Zpool?

There is absolutely no redundancy at the zpool level —if any storage vdev or SPECIAL vdev is lost, the entire zpool is lost with it. Modern zpools can survive the loss of a CACHE or LOG vdev—though they may lose a small amount of dirty data, if they lose a LOG vdev during a power outage or system crash.

Can a mirror Vdev survive a failure?

A mirror vdev can survive any failure, so long as at least one device in the vdev remains healthy.

Is ZFS a RAID0?

It is a common misconception that ZFS "stripes" writes across the pool—but this is inaccurate. A zpool is not a funny-looking RAID0— it's a funny-looking JBOD, with a complex distribution mechanism subject to change.

What is the default size of a zvol?

Zvols have a volblocksize property that is analogous to record size. The default size is 8KB, which is the size of a page on the SPARC architecture. Workloads that use smaller sized IOs (such as swap on x86 which use 4096-byte pages) will benefit from a smaller volblocksize.

What is ashift in vdev?

Top-level vdevs contain an internal property called ashift, which stands for alignment shift. It is set at vdev creation and is otherwise immutable. It can be read using the zdb command. It is calculated as the maximum base 2 logarithm of the physical sector size of any child vdev and it alters the disk format such that writes are always done according to it. This makes 2^ashift the smallest possible IO on a vdev. Configuring ashift correctly is important because partial sector writes incur a penalty where the sector must be read into a buffer before it can be written.

Does ZFS support Illumos?

ZFS will also attempt minor tweaks on various platforms when whole disks are provided. On Illumos, ZFS will enable the disk cache for performance. It will not do this when given partitions to protect other filesystems sharing the disks that might not be tolerant of the disk cache, such as UFS.

Does ZFS have an IO elevator?

On Linux, the IO elevator will be set to noop to reduce CPU overhead. ZFS has its own internal IO elevator, which renders the Linux elevator redundant. The Performance Tuning page explains this behavior in more detail. On illumos, ZFS attempts to enable the write cache on a whole disk.

comparing speed, space and safety per raidz type

ZFS includes data integrity verification, protection against data corruption, support for high storage capacities, great performance, replication, snapshots and copy-on-write clones, and self healing which make it a natural choice for data storage.

What are the advantages and disadvantages of each raid type ?

raid0 or striping array has no redundancy, but provides the best performance and additional storage. Any drive failure destroys the entire array so raid 0 is not safe at all. if you need really fast scratch space for video editing then raid0 does well.

Specification of the testing raid chassis and environment

All tests are run on the same day on the same machine. Our goal was to rule out variability in the hardware so that any differences in performance were the result of the ZFS raid configuration itself.

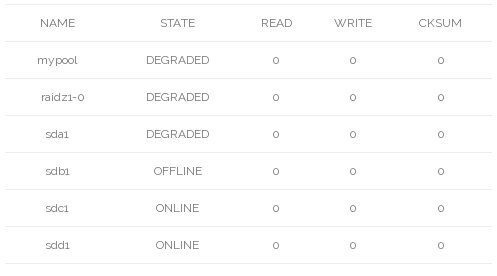

Quick Summery: ZFS Speeds and Capacity

The following table shows a summery of all the tests and lists the number of disks, raid types, capacity and performance metrics for easy comparison. The speeds display "w" for write, "rw" for rewrite and "r" for read and throughput is in megabytes per second.

Spinning platter hard drive raids

The server is setup using an Avago LSI Host Bus Adapter (HBA) and not a raid card. The HBA is connected to the SAS expander using a single multilane cable to control all 24 drives. LZ4 compression is disabled and all writes are synced in real time by the testing suite, Bonnie++.

Solid State (Pure SSD) raids

The 24 slot raid chassis is filled with Samsung 840 256GB SSD (MZ-7PD256BW) drives. Drives are connected through an Avago LSI 9207-8i HBA controller installed in a PCIe 16x slot. TRIM is not used and not needed due to ZFS's copy on write drive leveling.

Some thoughts on the RAID results..

Raid 6 or raidz2 is a good mix of all three properties. Good speeds, integrity and capacity. The ability to lose two drives is fine for a home or office raid where you can get to the machine in a short amount of time to put in another disk. For data center use raidz2 (raid6) is good if you have a hot spare in place.