Full Answer

What is Apache Hadoop?

The Apache™ Hadoop® project develops open-source software for reliable, scalable, distributed computing. The Apache Hadoop software library is a framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models.

Is there a commercial version of Hadoop?

A number of companies offer commercial implementations or support for Hadoop. The Apache Software Foundation has stated that only software officially released by the Apache Hadoop Project can be called Apache Hadoop or Distributions of Apache Hadoop.

What is a Hadoop cluster?

Hadoop is an open-source software framework for storing data and running applications on clusters of commodity hardware. It provides massive storage for any kind of data, enormous processing power and the ability to handle virtually limitless concurrent tasks or jobs.

What is Hadoop and why is it important?

In 2008, Yahoo released Hadoop as an open-source project. Today, Hadoop’s framework and ecosystem of technologies are managed and maintained by the non-profit Apache Software Foundation (ASF), a global community of software developers and contributors. Why is Hadoop important? Ability to store and process huge amounts of any kind of data, quickly.

What is hive used for?

Hive allows users to read, write, and manage petabytes of data using SQL. Hive is built on top of Apache Hadoop, which is an open-source framework used to efficiently store and process large datasets. As a result, Hive is closely integrated with Hadoop, and is designed to work quickly on petabytes of data.

What is Hadoop and why it is used?

Apache Hadoop is an open source framework that is used to efficiently store and process large datasets ranging in size from gigabytes to petabytes of data. Instead of using one large computer to store and process the data, Hadoop allows clustering multiple computers to analyze massive datasets in parallel more quickly.

Is HDFS a software?

HDFS is a distributed file system that handles large data sets running on commodity hardware. It is used to scale a single Apache Hadoop cluster to hundreds (and even thousands) of nodes. HDFS is one of the major components of Apache Hadoop, the others being MapReduce and YARN.

Is Hadoop software or a operating system?

Hadoop is an open-source software framework for storing data and running applications on clusters of commodity hardware.

What is an example of Hadoop?

Examples of Hadoop Financial services companies use analytics to assess risk, build investment models, and create trading algorithms; Hadoop has been used to help build and run those applications. Retailers use it to help analyze structured and unstructured data to better understand and serve their customers.

Is Hadoop similar to SQL?

Hadoop and SQL both manage data, but in different ways. Hadoop is a framework of software components, while SQL is a programming language. For big data, both tools have pros and cons. Hadoop handles larger data sets but only writes data once.

Where is HDFS used?

HDFS (Hadoop Distributed File System) is the primary storage system used by Hadoop applications. This open source framework works by rapidly transferring data between nodes. It's often used by companies who need to handle and store big data.

Is Hadoop and HDFS same?

Hadoop itself is an open source distributed processing framework that manages data processing and storage for big data applications. HDFS is a key part of the many Hadoop ecosystem technologies. It provides a reliable means for managing pools of big data and supporting related big data analytics applications.

What language is HDFS?

JavaThe Hadoop distributed file system (HDFS) is a distributed, scalable, and portable file system written in Java for the Hadoop framework.

What are the 4 components of Hadoop?

There are four major elements of Hadoop i.e. HDFS, MapReduce, YARN, and Hadoop Common. Most of the tools or solutions are used to supplement or support these major elements.

Who uses Hadoop?

Who uses Hadoop? 363 companies reportedly use Hadoop in their tech stacks, including Uber, Airbnb, and Pinterest.

What are the 2 parts of Hadoop?

HDFS (storage) and YARN (processing) are the two core components of Apache Hadoop.

Where is Hadoop being used?

Hadoop is used in big data applications that have to merge and join data - clickstream data, social media data, transaction data or any other data format.

What is Hadoop and its types?

There are three components of Hadoop: Hadoop HDFS - Hadoop Distributed File System (HDFS) is the storage unit. Hadoop MapReduce - Hadoop MapReduce is the processing unit. Hadoop YARN - Yet Another Resource Negotiator (YARN) is a resource management unit.

What is the basics of Hadoop?

Hadoop is a framework for working with big data. It is part of the big data ecosystem, which consists of much more than Hadoop itself. Hadoop is a distributed framework that makes it easier to process large data sets that reside in clusters of computers.

Why is it called Hadoop?

at the time and is now Chief Architect of Cloudera, named the project after his son's toy elephant. Cutting's son was 2 years old at the time and just beginning to talk. He called his beloved stuffed yellow elephant "Hadoop" (with the stress on the first syllable).

What language is Hadoop?

The Hadoop framework itself is mostly written in the Java programming language, with some native code in C and command line utilities written as shell scripts.

What is Hadoop MapReduce?

Hadoop MapReduce – an implementation of the MapReduce programming model for large-scale data processing.

How does a Hadoop cluster work?

Each datanode serves up blocks of data over the network using a block protocol specific to HDFS. The file system uses TCP/IP sockets for communication. Clients use remote procedure calls (RPC) to communicate with each other.

What is the core of Apache Hadoop?

The core of Apache Hadoop consists of a storage part , known as Hadoop Distributed File System (HDFS), and a processing part which is a MapReduce programming model. Hadoop splits files into large blocks and distributes them across nodes in a cluster.

What is Hadoop used for?

It provides a software framework for distributed storage and processing of big data using the MapReduce programming model. Hadoop was originally designed for computer clusters built from commodity hardware, which is still the common use. It has since also found use on clusters of higher-end hardware.

What is the difference between Hadoop 1 and Hadoop 2?

Difference between Hadoop 1 and Hadoop 2 (YARN) The biggest difference between Hadoop 1 and Hadoop 2 is the addition of YARN (Yet Another Resource Negotiator), which replaced the MapReduce engine in the first version of Hadoop. YARN strives to allocate resources to various applications effectively.

How many PB does Facebook have?

In 2010, Facebook claimed that they had the largest Hadoop cluster in the world with 21 PB of storage. In June 2012, they announced the data had grown to 100 PB and later that year they announced that the data was growing by roughly half a PB per day.

Hoodup- The Movie

Deep underground an organization of identity thieves known as the Scarlet Dreamers have come up with the most dangerous scheme in history. They are looking to change the events of history. They hope to bring about the future spoke of in the legend of the falling boy.

NEWS!!!

Hey Hibiki (Hoods Uchiha14) here, I'm back and I will be making prodigy again! Keep reading because the first round of the tournament is coming to an end and a charcter will be changed forver!

Overview

Apache Hadoop is a collection of open-source software utilities that facilitates using a network of many computers to solve problems involving massive amounts of data and computation. It provides a software framework for distributed storage and processing of big data using the MapReduce programming model. Hadoop was originally designed for computer clusters built from commodity hardware, which is still the common use. It has since also found use on clusters of h…

History

According to its co-founders, Doug Cutting and Mike Cafarella, the genesis of Hadoop was the Google File System paper that was published in October 2003. This paper spawned another one from Google – "MapReduce: Simplified Data Processing on Large Clusters". Development started on the Apache Nutch project, but was moved to the new Hadoop subproject in January 2006. Doug Cutting, who was working at Yahoo! at the time, named it after his son's toy elephant. The initial c…

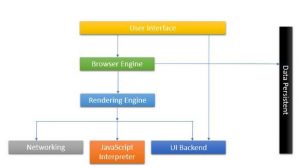

Architecture

Hadoop consists of the Hadoop Common package, which provides file system and operating system level abstractions, a MapReduce engine (either MapReduce/MR1 or YARN/MR2) and the Hadoop Distributed File System (HDFS). The Hadoop Common package contains the Java Archive (JAR) files and scripts needed to start Hadoop.

Prominent use cases

On 19 February 2008, Yahoo! Inc. launched what they claimed was the world's largest Hadoop production application. The Yahoo! Search Webmap is a Hadoop application that runs on a Linux cluster with more than 10,000 cores and produced data that was used in every Yahoo! web search query. There are multiple Hadoop clusters at Yahoo! and no HDFS file systems or MapReduce jobs are split across multiple data centers. Every Hadoop cluster node bootstraps the Linux ima…

Hadoop hosting in the cloud

Hadoop can be deployed in a traditional onsite datacenter as well as in the cloud. The cloud allows organizations to deploy Hadoop without the need to acquire hardware or specific setup expertise.

Commercial support

A number of companies offer commercial implementations or support for Hadoop.

The Apache Software Foundation has stated that only software officially released by the Apache Hadoop Project can be called Apache Hadoop or Distributions of Apache Hadoop. The naming of products and derivative works from other vendors and the term "compatible" are somewhat controversial within the Hadoop developer community.

Papers

Some papers influenced the birth and growth of Hadoop and big data processing. Some of these are:

• Jeffrey Dean, Sanjay Ghemawat (2004) MapReduce: Simplified Data Processing on Large Clusters, Google. This paper inspired Doug Cutting to develop an open-source implementation of the Map-Reduce framework. He named it Hadoop, after his son's toy elephant.

See also

• Apache Accumulo – Secure Bigtable

• Apache Cassandra, a column-oriented database that supports access from Hadoop

• Apache CouchDB, a database that uses JSON for documents, JavaScript for MapReduce queries, and regular HTTP for an API